SUNNYVALE, Calif., June 22, 2022 — AI computing company Cerebras Systems today announced that a single Cerebras CS-2 system is able to train models with up to 20 billion parameters on – something not possible on any other single device, according to the company.

SUNNYVALE, Calif., June 22, 2022 — AI computing company Cerebras Systems today announced that a single Cerebras CS-2 system is able to train models with up to 20 billion parameters on – something not possible on any other single device, according to the company.

By enabling a single CS-2 to train these models, Cerebras said it has reduced the system engineering time necessary to run large natural language processing (NLP) models from months to minutes, Cerebras said. It also eliminates a painful aspect of NLP — the partitioning of the model across hundreds or thousands of small graphics processing units (GPU), the company said.

“In NLP, bigger models are shown to be more accurate. But traditionally, only a very select few companies had the resources and expertise necessary to do the painstaking work of breaking up these large models and spreading them across hundreds or thousands of graphics processing units,” said Andrew Feldman, CEO and co-Founder of Cerebras Systems. “As a result, only very few companies could train large NLP models – it was too expensive, time-consuming and inaccessible for the rest of the industry. Today we are proud to democratize access to GPT-3XL 1.3B, GPT-J 6B, GPT-3 13B and GPT-NeoX 20B (language models), enabling the entire AI ecosystem to set up large models in minutes and train them on a single CS-2.”“GSK generates extremely large datasets through its genomic and genetic research, and these datasets require new equipment to conduct machine learning,” said Kim Branson, SVP of Artificial Intelligence and Machine Learning at GSK. “The Cerebras CS-2 is a critical component that allows GSK to train language models using biological datasets at a scale and size previously unattainable. These foundational models form the basis of many of our AI systems and play a vital role in the discovery of transformational medicines.”

These capabilities are achieved by combining the computational resources of the 850.000-core Cerebras Wafer Scale Engine-2 (WSE-2) and the Weight Streaming software architecture extensions available via release of version R1.4 of the Cerebras Software Platform, CSoft, Cerebras said.

When a model fits on a single processor, AI training is easy. But when a model has either more parameters than can fit in memory, or a layer requires more compute than a single processor can handle, complexity explodes. The model must be broken up and spread across hundreds or thousands of GPU. This process is painful, often taking months to complete. To make matters worse, the process is unique to each network compute cluster pair, so the work is not portable to different compute clusters, or across neural networks. It is entirely bespoke.

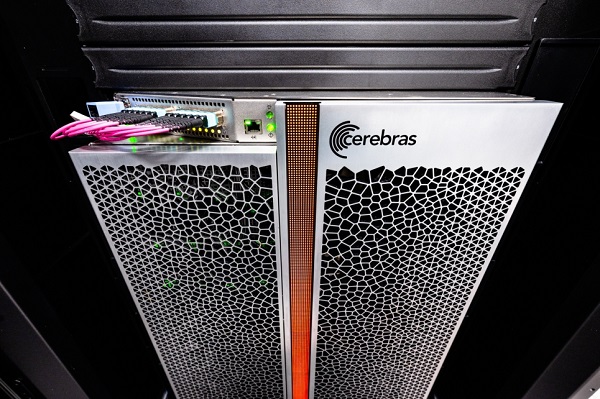

Cerebras CS-2

The company said that compared with the largest GPU, the Cerebras WSE-2 is 56 times larger, has 2.55 trillion more transistors and 100 times as many compute cores. “The size and computational resources on the WSE-2 enables every layer of even the largest neural networks to fit,” the company said in today’s announcement. “The Cerebras Weight Streaming architecture disaggregates memory and compute allowing memory (which is used to store parameters) to grow separately from compute. Thus a single CS-2 can support models with hundreds of billions even trillions of parameters.”

“Cerebras’ ability to bring large language models to the masses with cost-efficient, easy access opens up an exciting new era in AI. It gives organizations that can’t spend tens of millions an easy and inexpensive on-ramp to major league NLP,” said Dan Olds, chief research officer, Intersect360 Research. “It will be interesting to see the new applications and discoveries CS-2 customers make as they train GPT-3 and GPT-J class models on massive datasets.”

Cerebras said its customers include GlaxoSmithKline, AstraZeneca, TotalEnergies, nference, Argonne National Laboratory, Lawrence Livermore National Laboratory, Pittsburgh Supercomputing Center, Leibniz Supercomputing Centre, National Center for Supercomputing Applications, Edinburgh Parallel Computing Centre (EPCC), National Energy Technology Laboratory, and Tokyo Electron Devices.