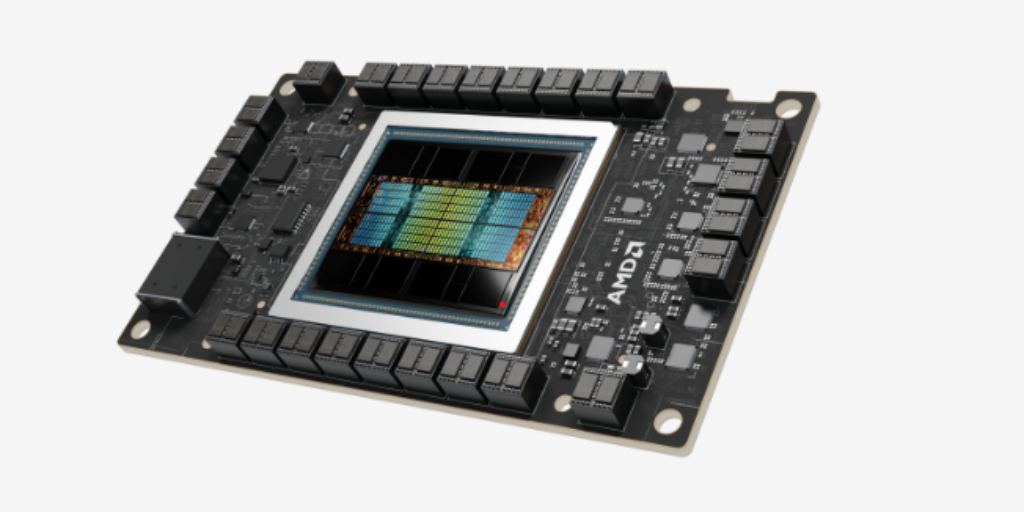

After years of NVIDIA having its dominant way in the GPU AI chip market — a market that has exploded over the last 12 months with the emergence of generative AI — AMD today announced the availability of the long-awaited and much previewed MI300X accelerator chip (pictured here) that AMD intends to be a worthy competitor with NVIDIA’s flagship H100 GPUs.

AMD said the chip has industry leading memory bandwidth for generative AI and leadership performance for large language model training and inferencing – as well as the AMD Instinct MI300A accelerated processing unit (APU) – combining the latest AMD CDNA 3 architecture and “Zen 4” CPUs for HPC and AI workloads.

In its announcements, AMD said Instinct MI300X accelerators are powered by the new AMD CDNA 3 architecture and that compared to Instinct MI250X accelerators the new chip delivers nearly 40 percent more compute units, 1.5x more memory capacity, 1.7x more peak theoretical memory bandwidth3, as well as support for new math formats such as FP8 and sparsity.

The company said Instinct MI300X accelerators have 192 GB of HBM3 memory capacity as well as 5.3 TB/s peak memory bandwidth. AMD said the Instinct Platform is a generative AI platform built on an industry standard OCP design with eight MI300X accelerators to offer an 1.5TB of HBM3 memory capacity. The company said its industry standard design allows OEMs to design-in MI300X accelerators into existing AI offerings and simplify deployment of AMD Instinct accelerator-based servers.

Compared to the Nvidia H100 HGX, the AMD Instinct Platform “can offer a throughput increase of up to 1.6x when running inference on LLMs like BLOOM 176B4 and is the only option on the market capable of running inference for a 70B parameter model, like Llama2, on a single MI300X accelerator; simplifying enterprise-class LLM deployments and enabling outstanding TCO,” according to AMD.

Lisa Su at AMD MI300 launch event in San Jose

AMD called the Instinct MI300A APUs the first data center APU for HPC and AI, leverage 3D packaging and the 4th Gen AMD Infinity Architecture. MI300A APUs combine AMD CDNA 3 GPU cores, the latest AMD “Zen 4” x86-based CPU cores and 128GB of next-generation HBM3 memory, to deliver ~1.9x the performance-per-watt on FP32 HPC and AI workloads, compared to previous gen AMD Instinct MI250X5.

AMD said MI300A APUs benefit from integrating CPU and GPU cores on a single package, adding that the company it aims to deliver a 30x energy efficiency improvement in server processors and accelerators for AI-training and HPC from 2020-2056.

“AMD Instinct MI300 Series accelerators are designed with our most advanced technologies, delivering leadership performance, and will be in large scale cloud and enterprise deployments,” said Victor Peng, president, AMD. “By leveraging our leadership hardware, software and open ecosystem approach, cloud providers, OEMs and ODMs are bringing to market technologies that empower enterprises to adopt and deploy AI-powered solutions.”

Customers leveraging the latest AMD Instinct accelerator portfolio include Microsoft, which recently announced the new Azure ND MI300x v5 Virtual Machine (VM) series, optimized for AI workloads and powered by AMD Instinct MI300X accelerators. Additionally, El Capitan – a supercomputer powered by AMD Instinct MI300A APUs and housed at Lawrence Livermore National Laboratory – is expected to be the second exascale-class supercomputer powered by AMD and expected to deliver more than 2 exaflops of double precision performance when fully deployed.

Oracle Cloud Infrastructure plans to add AMD Instinct MI300X-based bare metal instances to the company’s high-performance accelerated computing instances for AI. MI300X-based instances are planned to support OCI Supercluster with ultrafast RDMA networking.

Several major OEMs also showcased accelerated computing systems, in tandem with the AMD Advancing AI event. Dell showed the Dell PowerEdge XE9680 server featuring eight AMD Instinct MI300 Series accelerators and the new Dell Validated Design for Generative AI with AMD ROCm-powered AI frameworks. HPE recently announced the HPE Cray Supercomputing EX255a, the first supercomputing accelerator blade powered by AMD I nstinct MI300A APUs, which will become available in early 2024. Lenovo announced its design support for the new AMD Instinct MI300 Series accelerators with planned availability in the first half of Supermicro announced new additions to its H13 generation of accelerated servers powered by 4th Gen AMD EPYC CPUs and AMD Instinct MI300 Series accelerators.

AMD also announced the latest ROCm 6 open software platform as well as the company’s commitment to contribute libraries to the open-source community. The company said “ROCm 6 software represents a significant leap forward for AMD software tools, increasing AI acceleration performance by ~8x when running on MI300 Series accelerators in Llama 2 text generation compared to previous generation hardware and software7. Additionally, ROCm 6 adds support for several new key features for generative AI including FlashAttention, HIPGraph and vLLM, among others. As such, AMD is uniquely positioned to leverage the most broadly used open-source AI software models, algorithms and frameworks – such as Hugging Face, PyTorch, TensorFlow and others – driving innovation, simplifying the deployment of AMD AI solutions and unlocking the true potential of generative AI.”

“AMD Instinct MI300 Series accelerators are designed with our most advanced technologies, delivering leadership performance, and will be in large scale cloud and enterprise deployments,” said Victor Peng, president, AMD. “By leveraging our leadership hardware, software and open ecosystem approach, cloud providers, OEMs and ODMs are bringing to market technologies that empower enterprises to adopt and deploy AI-powered solutions.”