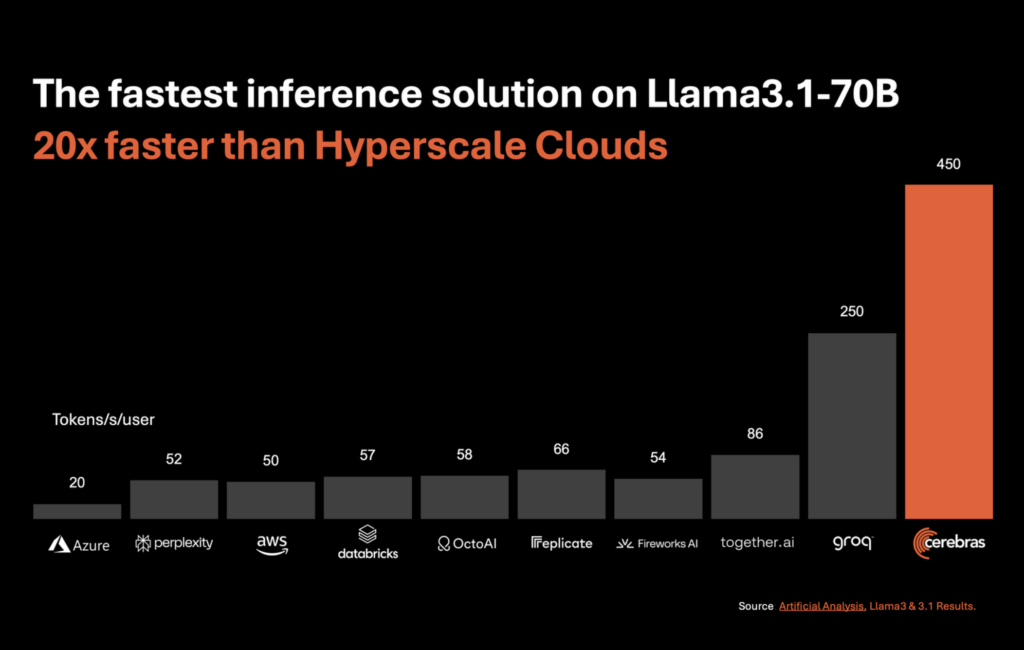

AI compute company Cerebras Systems today announced what it said is the fastest AI inference solution. Cerebras Inference delivers 1,800 tokens per second for Llama3.1 8B and 450 tokens per second for Llama3.1 70B, according to the company, making it 20 times faster than GPU-based solutions in hyperscale clouds.

“Starting at 10 cents per million tokens, Cerebras Inference is priced at a fraction of GPU solutions, providing 100x higher price-performance for AI workloads,” said the company.

Cerebras also said that “unlike alternative approaches that compromise accuracy for performance, Cerebras offers the fastest performance while maintaining state of the art accuracy by staying in the 16-bit domain for the entire inference run.”

Cerebras Inference is powered by the Cerebras CS-3 system and its AI processor, the Wafer Scale Engine 3, which has 7,000x more memory bandwidth than competing GPUs, solving “generative AI’s fundamental technical challenge: memory bandwidth,” Cerebras said.

Cerebras Wafter Scale Engine-3

“Cerebras has taken the lead in Artificial Analysis’ AI inference benchmarks,” said Micah Hill-Smith, co-founder and CEO of Artificial Analysis. “Cerebras is delivering speeds an order of magnitude faster than GPU-based solutions for Meta’s Llama 3.1 8B and 70B AI models. We are measuring speeds above 1,800 output tokens per second on Llama 3.1 8B, and above 446 output tokens per second on Llama 3.1 70B – a new record in these benchmarks.

“Artificial Analysis has verified that Llama 3.1 8B and 70B on Cerebras Inference achieve quality evaluation results in line with native 16-bit precision per Meta’s official versions. With speeds that push the performance frontier and competitive pricing, Cerebras Inference is particularly compelling for developers of AI applications with real-time or high volume requirements,” Hill-Smith said.

Inference is the fastest growing segment of AI compute and constitutes approximately 40 percent of the total AI hardware market, Cerebras said. The advent of high-speed AI inference, exceeding 1,000 tokens per second, is comparable to the introduction of broadband internet.

“DeepLearning.AI has multiple agentic workflows that require prompting an LLM repeatedly to get a result. Cerebras has built an impressively fast inference capability which will be very helpful to such workloads,” said Dr. Andrew Ng, Founder of DeepLearning.AI.

source: Cerebras

“For traditional search engines, we know that lower latencies drive higher user engagement and that instant results have changed the way people interact with search and with the internet,” said Denis Yarats, CTO and co-founder of Perplexity. “At Perplexity, we believe ultra-fast inference speeds like what Cerebras is demonstrating can have a similar unlock for user interaction with the future of search – intelligent answer engines.”

Cerebras has three price tiers:

- The Free Tier offers free API access to anyone who logs in.

- The Developer Tier, designed for serverless deployment, provides users with an API endpoint with Llama 3.1 8B and 70B models priced at 10 cents and 60 cents per million tokens, respectively, on a pay-as-you-go basis. Cerebras plans to extend support to more models.

- The Enterprise Tier offers fine-tuned models, custom service level agreements, and dedicated support. Designed for sustained workloads, enterprises can access Cerebras Inference via a Cerebras-managed private cloud or on customer premise.

Developers can access the Cerebras Inference API, which is compatible with the OpenAI Chat Completions API.

Speak Your Mind