This article is part of the Five Essential Strategies for Successful HPC Clusters series which was written to help managers, administrators, and users manage heterogeneous hardware and software inside their HPC clusters.

Almost all clusters expand after their initial installation. One of the reasons for this longevity of the original hardware is the fact that processor clock speeds are not increasing as quickly as they had in the past. Instead of faster clocks, processors now have multiple cores. Thus, many older nodes still deliver acceptable performance (from a CPU clock perspective) and can provide useful work. Newer nodes often have more cores per processor and more memory. In addition, many clusters now employ accelerators from NVIDIA or AMD or coprocessors from Intel on some or all nodes. There may also be different networks connecting different types of nodes (either 1/10 GbE or IB).

Another cluster trend is the use of memory aggregation tools such as ScaleMP. These tools allow multiple cluster nodes to be combined into a large virtual Symmetric Multiprocessing (SMP) node. To the end user, the combined nodes look like a large SMP node. Oftentimes, these aggregated systems are allocated on traditional clusters for a period of time and released when the user has completed their work, returning the nodes to the general node pool of the workload scheduler.

Another cluster trend is the use of memory aggregation tools such as ScaleMP. These tools allow multiple cluster nodes to be combined into a large virtual Symmetric Multiprocessing (SMP) node. To the end user, the combined nodes look like a large SMP node. Oftentimes, these aggregated systems are allocated on traditional clusters for a period of time and released when the user has completed their work, returning the nodes to the general node pool of the workload scheduler.

The combined spectrum of heterogeneous hardware and software often requires considerable expertise for successful operation. Many of the standard open source tools do not make provision for managing or monitoring these heterogeneous systems. Vendors often provide libraries and monitoring tools that work with their specific accelerator platform, but make no effort to integrate these tools into any of the open source tools. In order to monitor these new components, the administrator must “glue” the vendor tools into whatever monitoring system they employ for the cluster, typically this means Ganglia and Nagios. Although these tools provide hooks for custom tools, it is still incumbent upon the administrator to create, test and maintain these tools for their environment.

Managing virtualized environments, like ScaleMP, can be similarly cumbersome to administrators. Nodes will need the correct drivers and configuration before users can take advantage of the large virtual SMP capabilities.

Heterogeneity may extend beyond a single cluster because many organizations have multiple clusters, each with their own management environment. There may be some overlap of tools, however older systems may be running older software and tools for compatibility reasons. This situation often creates “custom administrators” that may have a steep learning curve when trying to assist or take over a new cluster. Each cluster may have its own management style that further taxes administrators.

Ideally, cluster administrators would like the ability to have a “plug-and-play” environment for new cluster hardware and software eliminating the need to weave new hardware into an existing monitoring system or automate node aggregation tools with scripts. Bright Cluster Manager is the only solution that offers this capability for clusters. All common cluster hardware is supported by a single, highly efficient monitoring daemon. Data collected by the node daemons are captured in a single database, and summarized according to the site’s preferences. There is no need to customize an existing monitoring framework. New hardware and even new virtual ScaleMP nodes automatically show up in the familiar management interface.

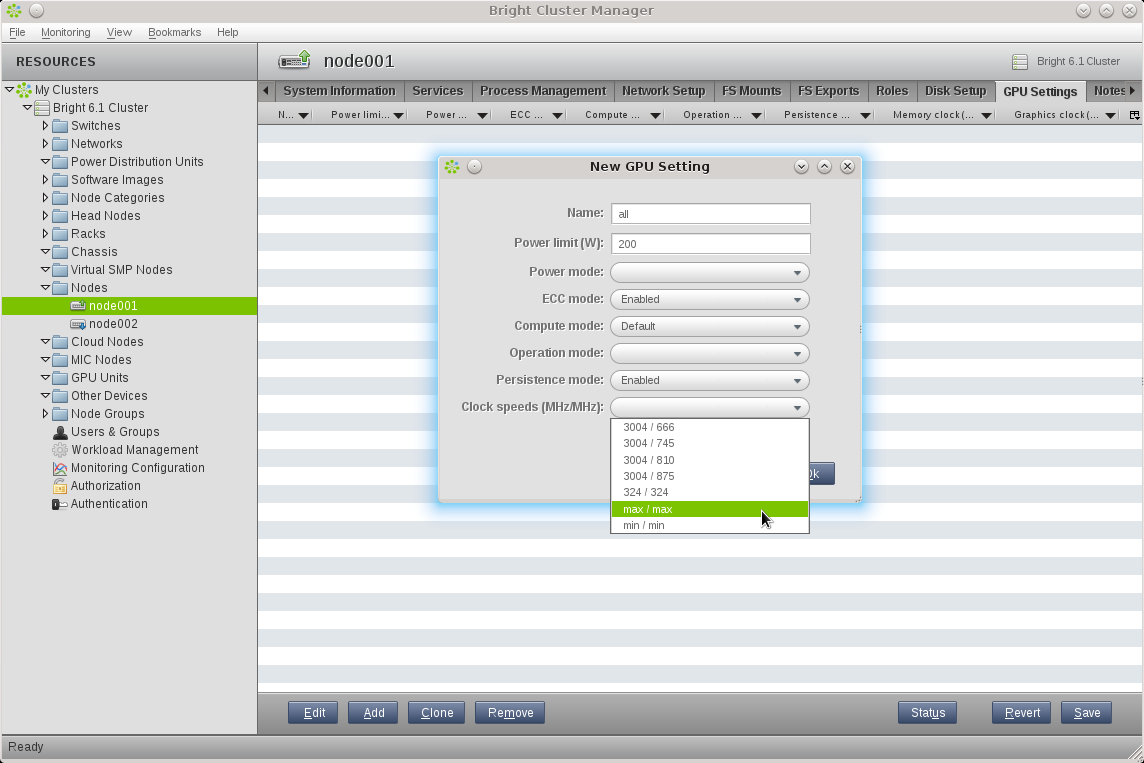

As shown in Figure 3, Bright Cluster Manager fully integrates NVIDIA GPUs and provides alerts and actions for metrics such as GPU temperatures, GPU exclusivity modes, GPU fan speeds, system fan speeds, PSU voltages and currents, system LED states, and GPU ECC statistics (Fermi GPUs only). Similarly, Bright Cluster Manager includes everything needed to enable Intel Xeon Phi coprocessors in a cluster using easy-to-install packages that provide the necessary drivers, SDK, flash utilities, and runtime environment. Essential metrics are also provided as part of the monitoring interface.

Bright Cluster Manager manages accelerators and coprocessors used in hybrid-architecture systems for HPC. Bright allows direct access to the NVIDIA GPU Boost technology through clock-speed settings.

Multiple heterogeneous clusters are also supported as part of Bright Cluster Manager. There is no need to learn (or develop) a separate set of tools for each cluster in your organization.

Bright Computing’s integration with ScaleMP’s vSMP Foundation means that creating and dismantling a virtual SMP node can be achieved with just a few clicks in the cluster management GUI. Virtual SMP nodes can even be built and launched automatically on-the-fly using the scripting capabilities of the cluster management shell. In a Bright cluster, virtual SMP nodes can be provisioned, monitored, used in the workload management system, and have health checks running on them – just like any other node in the cluster.

Recommendations for Managing Heterogeneity

- Make sure you can monitor all hardware in the cluster. Without the ability to monitor accelerators or coprocessors, an unstable environment can be created for users that is difficult for administrators to manage.

- Heterogeneous hardware is now present in all clusters. Flexible software provisioning and monitoring capabilities of all of components are essential. Try to avoid custom scripts that may only work for specific versions of hardware. Many open source tools can be customized for specific hardware scenarios thus minimizing the amount of custom work.

- Make sure your management environment is the same across all clusters in your organization. A heterogeneous management environment creates a dependency on specialized administrators and reduces the ability to efficiently manage all clusters in the same way. Any management solution should not interfere with HPC workloads.

- Consider a versatile and robust tool like Bright Cluster Manager with its single comprehensive GUI and CLI interface. All popular HPC cluster hardware and software technologies can be managed with the same administration skills. The capability to smoothly manage heterogeneous hardware and software is not available in any other management tool.

Next week’s article will look at Preparing for HPC Cloud . If you prefer you can download the entire insideHPC Guide to Successful HPC Clusters, courtesy of Bright Computing, by visiting the insideHPC White Paper Library.