From the first teraflop to the 3D Internet: an exclusive profile with Intel CTO Justin Rattner

Intel’s CTO Justin Rattner will be giving the opening address at SC09 in Portland next month, and he’ll be talking about the 3D internet and his own view that this could be an inflection point — where HPC “goes consumer.” But Rattner’s appearance at SC this year isn’t just about the 3D Internet, and his selection wasn’t just about picking one of the most influential suits in the tech world. As one of the pioneers of the modern era of parallel supercomputing, he is an ideal choice as the person to deliver the opening address for SC09.

Intel’s CTO Justin Rattner will be giving the opening address at SC09 in Portland next month, and he’ll be talking about the 3D internet and his own view that this could be an inflection point — where HPC “goes consumer.” But Rattner’s appearance at SC this year isn’t just about the 3D Internet, and his selection wasn’t just about picking one of the most influential suits in the tech world. As one of the pioneers of the modern era of parallel supercomputing, he is an ideal choice as the person to deliver the opening address for SC09.

Rattner’s career in HPC goes all the way back to the introduction — and eventual proof — of the idea that supercomputers should be built out of thousands of smaller processors rather than a few very powerful processors. Rattner led the collaboration that built the Touchstone Delta, and then the machine that was the first in the world to top one trillion floating point operations per second on the Linpack: ASCI Red. In this exclusive insideHPC feature interview, we talk with Rattner about his career to get a view of where HPC has come from, and where it is going next.

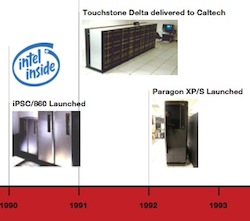

In 1973 Rattner joined Intel (see our exclusive visual timeline of Justin Rattner’s career by clicking on the image to the right) after holding positions with Hewlett-Packard and Xerox, beginning a remarkable career at the world’s largest chip manufacturer. In 1979 he was named Intel’s first Principal Engineer, its fourth Fellow in 1988, and by 2005 he was named Intel’s Chief Technology Officer. His title today reflects a career with far more victories than defeats: Intel Senior Fellow, Vice President, Director of Intel Labs, and Chief Technology Officer of Intel Corporation.

In 1973 Rattner joined Intel (see our exclusive visual timeline of Justin Rattner’s career by clicking on the image to the right) after holding positions with Hewlett-Packard and Xerox, beginning a remarkable career at the world’s largest chip manufacturer. In 1979 he was named Intel’s first Principal Engineer, its fourth Fellow in 1988, and by 2005 he was named Intel’s Chief Technology Officer. His title today reflects a career with far more victories than defeats: Intel Senior Fellow, Vice President, Director of Intel Labs, and Chief Technology Officer of Intel Corporation.

But in a career with so many high points, Rattner still thinks of the moment in 1996 when ASCI Red topped 1 TFLOPS on the Linpack as one of his proudest accomplishments.

“…9 out of 10 people in the audience thought I had lost my mind.”

The idea that was so radical at the time, Rattner says, that not only could one build a supercomputer out of lots of relatively smaller processors, but that if you wanted to top 1 TFLOPS of sustained performance in a way that was anything near affordable, you had to build a supercomputer that way. The introduction — and eventual proof — of this concept still tops Rattner’s list of accomplishments when I asked him about it in late September of 2009.

“When I started out with this idea in 1984, Cray and vector supercomputing was dominant,” Rattner says. “In 1984 or 1985 I gave a talk on the idea that very large ensembles of microprocessors would eventually replace complicated vector processors in supercomputers. I think 9 out of 10 people in the audience thought I had lost my mind.”

At the time the computing community was responding to an increased national focus on high performance computing catalyzed by a series of reports in the early 1980s on the need for increased funding in scientific computing following the 1970s era of neglect. The most influential of these was a joint agency study edited by Peter Lax (yes, that Peter Lax) and released in December 1982. Reading the first few pages of this report one finds language that is quite similar to the language of nearly all of the blue ribbon reports since then

At the time the computing community was responding to an increased national focus on high performance computing catalyzed by a series of reports in the early 1980s on the need for increased funding in scientific computing following the 1970s era of neglect. The most influential of these was a joint agency study edited by Peter Lax (yes, that Peter Lax) and released in December 1982. Reading the first few pages of this report one finds language that is quite similar to the language of nearly all of the blue ribbon reports since then

The Panel believes that under current conditions there is little likelihood that the U.S. will. lead in the development and application of this new generation of machines. Factors inhibiting the necessary research and advanced development are the length and expense of the development cycle for a new computer architecture, and the uncertainty of the market place. Very high performance computing is a case where maximizing short-term return on capital does not reflect the national security or the long-term national economic interest. The Japanese thrust in this area, through its public funding, acknowledges this reality.

(It seems that the call for increased national attention to HPC funding is not at all a recent phenomenon.) The Lax report led to the establishment of several national HPC programs, including the NSF Supercomputer Centers program that created the five centers at Cornell, NCSA, Pittsburgh, SDSC, and Princeton. This report also called, in the manner of the recent PFLOPS challenge, the creation of supercomputers “several orders of magnitude” more powerful than the computers of the day. The general consensus was that teraflops systems were the goal to shoot for, and meeting this challenge was the impetus within Intel for the creation of Intel Scientific Computers (which later became the Supercomputing Systems Division at Intel). Justin Rattner was the director of technology for this effort, responsible for setting Intel on the path that would eventually answer the TFLOPS challenge.

The first system to 1 teraFLOPS

The ASCI Red system, installed at Sandia National Laboratories, was the first step in the ASCI Platforms Strategy to increase scientific computing power by five orders of magnitude by the early 2000s. From the description of ASCI Red on the Top500 site:

The original incarnation of this machine used Intel Pentium Pro processors, each clocked at 200 MHz. These were later upgraded to Pentium II OverDrive processors. The final system had a total of 9298 Pentium II OverDrive processors, each clocked at 333 MHz. The system consisted of 104 cabinets, taking up about 2500 square feet (230 m²). The system was designed to use commodity mass-market components and to be very scalable.

The experience of working on the ASCI Red system was an unusual one for Rattner because he got to work closely with end users. “It was a very rewarding experience because we got to work directly with end users.” Some of these users attended a ceremony in 2006 at which the system was decommissioned after nine years of service. “It was a very emotional event,” says Rattner. “Users at the ceremony told stories of the machine and the science that it allowed them to do, remembering the machine almost as if it was a friend or colleague.”

The HPC industry during the time was very diverse, and HPC companies like Thinking Machines, nCube, MasPar, Kendall Square Research, Floating Point Systems, and others were trying out all kinds of new ideas. Remembering this time Rattner recalls that for him the real competition was between Intel and Danny Hillis’ Thinking Machines. Although he describes the competition at the time as a “war,” Rattner credits Hillis as a significant influence on the technology of the time, helping the MIMD, massively parallel approach that we use today win in the active (and sometimes acrimonious) MIMD/SIMD debate of the day.

Rattner was also influenced by other stars of the HPC community such as Alliant’s Craig Mundie (currently the Chief Research and Strategy Officer at Microsoft), Tera’s Burton Smith (Microsoft Technical Fellow), and Convex’s Steve Wallach (co-founder of HPC startup Convey Computer). “Steve pushes the industry because he fundamentally questions whether massively parallel programming will ever be easy enough for a large audience to adopt.”

Rattner’s first fastest computer in the world

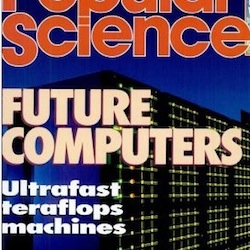

Intel’s Touchstone Delta system, a DARPA-funded effort begun in 1989 that resulted in the installation of a single system at Caltech in 1991, was a very big deal in the community. The system was on the cover of Popular Science in 1992, and for a time was the fastest computer in the world (8.6 GFLOPS). According to Rattner this was a highly influential moment in HPC. The fact that the Touchstone, a MIMD-design based on the i860 processor from Intel, outperformed vector machines from companies like Cray proved that MIMD designs would not only work in theory, they could be programmed to be incredibly fast in practice.

Intel’s Touchstone Delta system, a DARPA-funded effort begun in 1989 that resulted in the installation of a single system at Caltech in 1991, was a very big deal in the community. The system was on the cover of Popular Science in 1992, and for a time was the fastest computer in the world (8.6 GFLOPS). According to Rattner this was a highly influential moment in HPC. The fact that the Touchstone, a MIMD-design based on the i860 processor from Intel, outperformed vector machines from companies like Cray proved that MIMD designs would not only work in theory, they could be programmed to be incredibly fast in practice.

Rattner says that at the time he argued that vector machines, which were supposedly easy to program and were easier to build than MIMD machines, were “great machines for running bad algorithms. The machines would get great scaling numbers on algorithms that were very complex and had really long run times. MIMD computers could run a wider range of algorithms than vector systems, and even though they achieved lower efficiencies they could still solve problems with a much lower time to solution.”

GPUs, exascale, and the HPC of tomorrow

Although Intel stopped producing commercial supercomputers in 1996, it hasn’t stopped shaping the industry. The architectures pioneered by the company dominate today’s Top500 lists, and chips made by Intel were in 79.8% of all systems in the most recent Top500 list (Jun 2009). One of the challengers to the hegemony of Intel’s processors in HPC today is the GP-GPU. Rattner doesn’t see the GPU as a long-term solution, however. “For straight vector graphics GPUs work great, but next generation visual algorithms won’t compute efficiently” on the SIMD architecture of the GPU, he says, drawing a parallel between the era of vector computers and the Touchstone system and today’s processor/GPU debate. As he sees it the GPU restricts the choice of algorithm to one which matches the architecture, not necessarily the one that provides the best solution to the problem at hand. “The goal of our next generation Larrabee is to take a MIMD approach to visual computing,” he says (That’s a Larrabee die pictured at right being held by Intel’s Pat Gelsinger). Part of Intel’s motivation for this decision is that the platform scales from mobile devices all the way up to supercomputers. And they have early performance results that will be presented at an IEEE conference later this year that show that the Larrabee outperforms both the Nehalem and NVIDIA’s GT280 on volumetric rendering problems.

Although Intel stopped producing commercial supercomputers in 1996, it hasn’t stopped shaping the industry. The architectures pioneered by the company dominate today’s Top500 lists, and chips made by Intel were in 79.8% of all systems in the most recent Top500 list (Jun 2009). One of the challengers to the hegemony of Intel’s processors in HPC today is the GP-GPU. Rattner doesn’t see the GPU as a long-term solution, however. “For straight vector graphics GPUs work great, but next generation visual algorithms won’t compute efficiently” on the SIMD architecture of the GPU, he says, drawing a parallel between the era of vector computers and the Touchstone system and today’s processor/GPU debate. As he sees it the GPU restricts the choice of algorithm to one which matches the architecture, not necessarily the one that provides the best solution to the problem at hand. “The goal of our next generation Larrabee is to take a MIMD approach to visual computing,” he says (That’s a Larrabee die pictured at right being held by Intel’s Pat Gelsinger). Part of Intel’s motivation for this decision is that the platform scales from mobile devices all the way up to supercomputers. And they have early performance results that will be presented at an IEEE conference later this year that show that the Larrabee outperforms both the Nehalem and NVIDIA’s GT280 on volumetric rendering problems.

Thinking about our current search for exascale computers, Rattner says its much too early to know what those systems will look like. “The energy challenge is the main obstacle,” he says, adding that “business as usual won’t get us there unless someone is prepared to build an exascale computer next to a nuclear power station.” He anticipates that there will need to be a lot of new science developed in every aspect of computing to build exascale systems that require 10s, not 100s, of megawatts of power to run. What role will Intel play in the exascale future? “We had a paper at the last International Solid-State Circuits Conference that demonstrated the highest-ever reported energy efficiency. We were able to reach 490 Gops/W.” (Note: that paper was Ultra-low Voltage Microprocessor Design: Challenges and Solutions, presented by Ram Krishnamurthy, see the conference program.) But Rattner points out that designs like this are so radical that they aren’t yet sure what the implications will be for programming models and tools that work effectively on them, or indeed implications for building computers out of them. “Chips that operate at these low voltages have failure modes that are beyond what we are accustomed to dealing with,” he says. “Some of those failure modes can be managed in hardware and firmware, but some will probably have to be managed by the applications themselves.”

Rattner says that Intel is actively engaged in exascale research, and it’s the question of the power it will take to get there that brought them back. “Developing very low power computing has broad implications for our business,” says Rattner, “but it starts at the exascale in HPC. What we develop there will drive Intel’s future from mobile computing to supercomputers.”

SC09 and the 3D Internet

When Justin Rattner gives the opening address on Tuesday at SC09 in November he won’t just be talking about the 3D Internet, he’ll be doing demonstrations of applications he believes give us a glimpse of the future.

The 3D Internet is a concept that Intel believes is at the heart of a growing need to manage a deluge of data. As Rattner sees it, the (mostly) 2D Internet we have today provides a means of collaboration that will only grow more powerful as we move to an internet of 3D worlds driven by immersive, interactive, and complex visualizations of the exponentially increasing collection of unstructured data in the world around us. The data come from an incredible variety of sources — from still and video cameras to personal instrumentation systems and social networks — but approaches for managing the flow all boil down to one thing: computing, and lots of it. “This is how HPC goes consumer,” explains Rattner. “If the 3D, immersive experience becomes the dominant metaphor for how people experience the internet of tomorrow, we won’t have to worry about who will build the processors and computers that do HPC. Everyone will want to be a part of that.”

The 3D Internet is a concept that Intel believes is at the heart of a growing need to manage a deluge of data. As Rattner sees it, the (mostly) 2D Internet we have today provides a means of collaboration that will only grow more powerful as we move to an internet of 3D worlds driven by immersive, interactive, and complex visualizations of the exponentially increasing collection of unstructured data in the world around us. The data come from an incredible variety of sources — from still and video cameras to personal instrumentation systems and social networks — but approaches for managing the flow all boil down to one thing: computing, and lots of it. “This is how HPC goes consumer,” explains Rattner. “If the 3D, immersive experience becomes the dominant metaphor for how people experience the internet of tomorrow, we won’t have to worry about who will build the processors and computers that do HPC. Everyone will want to be a part of that.”

A picture of Rattner’s other HPC work (Intel iPSC) at the Computer History Museum:

http://www.facebook.com/photo.php?pid=569477&l=343e1c9f36&id=1066013684

um, should’ve been: http://www.facebook.com/photo.php?pid=569494&l=ddbd1ea01a&id=1066013684

and not the picture of the Pixar Image Computer …

ASCI Red had some initial hiccups but became a towering achievement. It was the first peak/Linpack TF machine, and the Cray T3E achieved the first TF on a real-world, 64-bit app. If I remember right, a DOE study in the late ’90s concluded that ASCI Red and the T3E were the only MPPs capable of scaling to large processor counts. That was an exciting era.