In this video, Gregor Matl from Team TUMuch Phun describes what his team learned at the SC15 Student Cluster Competition.

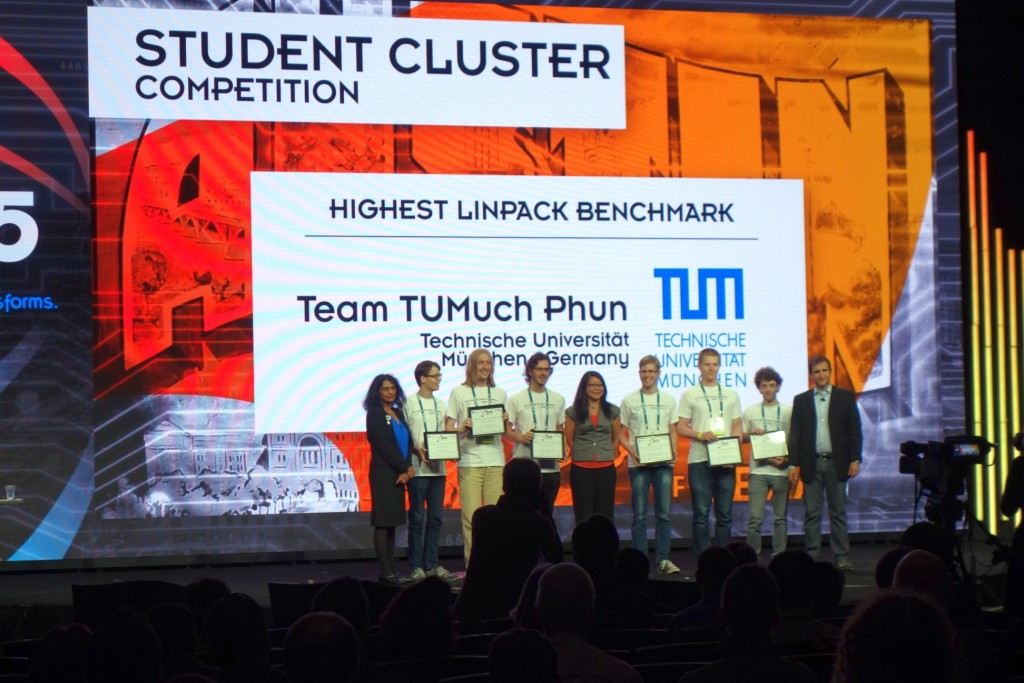

Today Russia’s RSC Group announced that Team TUMuch Phun from the Technical University of Munich (TUM) won the Highest Linpack Award in the SC15 Student Cluster Competition. The enthusiastic students achieved 7.1 Teraflops on the Linpack benchmark using an RSC PetaStream cluster with computing nodes based on Intel Xeon Phi. TUM student team took 3rd place in overall competition within 9 teams participated in SCC at SC15, so as only one European representative in this challenge.

The Student Cluster Competition, which debuted at SC07 in Reno and has since been replicated in Europe, Asia and Africa, is a real-time, non-stop, 48-hour challenge in which teams of six undergraduates race to complete a real-world workload across a series of scientific applications, demonstrate knowledge of system architecture and application performance, and impress HPC industry judges. The students partner with vendors to design and build a cutting-edge cluster from commercially available components, not to exceed a 3120-watt power limit and work with application experts to tune and run the competition codes.

“We thank RSC Group for their excellent technology support for our team TUMuch Phun during the preparation for SCC. The energy efficiency of their RSC PetaStream cluster with Intel Xeon Phi not only showed the highest Linpack performance in the competition, but also strongly contributed to our remarkable 3rd place overall.” – said Michael Bader, Professor of TUM.

It’s important to mention that TUM was the only team one who used the energy-efficient solution with Intel Xeon Phi, while all other student teams used CPU-only or GPU-based systems.

The scope of applications which German team used to run on RSC PetaStream system included:

- Linpack benchmark

- Trinity (a novel method for the efficient and robust de novo reconstruction of transcriptomes from RNA-seq data),

- WRF (Weather Research and Forecasting),

- MILC (the code is a varied suite of applications designed to study Quantum Chromodynamics (QCD) the theory of Nature’s strong force),

- HPC Repast (agent-based modeling system written in C++ that is useful in social science modeling to understand characteristics of a global population that are difficult to predict based only on the rules that govern the behavior of the agents),

- HPCG benchmark (High Performance Conjugate Gradient)

Performance Tools:

- Allinea Forge

- Allinea MAP

- Allinea DDT

- Allinea Performance Reports

TUM team’s win of the Highest Linpack Award is one more practical confirmation for very high performance and reliability of RSC PetaStream massively parallel architecture which also provides the best energy-efficiency. We are very proud that our server equipment, software tools for cluster management and technological support provided from RSC side helped to achieve such high result in Student Cluster Competition,” – commented Alexander Moskovsky, CEO of RSC Technologies (RSC Group).

German student team has been equipped with computing system containing one RSC PetaStream computing module (8x computing nodes, massively parallel architecture) and one RSC Breeze computing node (cluster architecture) having joint high speed Mellanox EDR InfiniBand interconnect and data storage subsystem.

RSC PetaStream computing module:

- 8x nodes based on Intel Xeon Phi 7120 (61 cores, 16 GB GDDR5 memory)

- Peak performance of 9.66 Tflops

- 488 cores (х86 architecture)

- Total GDDR5 memory – 128 GB

- Mellanox InfiniBand ConnectX-4 Dual-port HCA adapters

- RSC Breeze computing node:

2x Intel Xeon E5-2699 v3 processor (18 cores, 2.30 GHz each)

- Peak performance of 1.33 Tflops

- 128 GB of DDR4 memory

- Intel Server Board S2600WT

- Mellanox InfiniBand ConnectX-4 Dual-port HCA adapter

Joint SSD based data storage subsystem, 7.2 ТB

- 3.2 TB – 2x Intel® SSD DC S3610 (SATA, 1.6 TB each)

- 4 TB – Intel® SSD DC P3608 (NVMe)

Software tools for cluster management:

- RSC BasIS integrated system software stack optimized for HPC environment and usage.

High speed communication network based on:

- Mellanox EDR InfiniBand SB7790 switch (36 ports).

In addition to the TUM team from Germany, the SC15 Student Cluster Competition line-up included other student teams from around the world:

- Arizona Tri-University Team (United States)

- Illinois Institute of Technology (United States)

- National Tsing Hua University (Taiwan)

- Northeastern University (United States)

- Pawsey Supercomputing Centre (Australia)

- Tsinghua University (China)

- Universidad EAFIT (Columbia)

- University of Oklahoma (United States)

See our complete coverage of SC15 * Sign up for our insideHPC Newsletter