In this special guest feature, Gabriel Broner from Rescale writes that Cloud is the next disruption in HPC.

Gabriel Broner is VP & GM of HPC at Rescale

In 1991, I joined Cray and had the opportunity to work on the machines Seymour Cray designed. I was working on the operating system and would often have to work alone on it at night, but the excitement of working on such unique systems kept me going. The Cray 1, XMP, YMP, represented a family of machines where a differentiated architecture and design allowed you to solve problems that you just couldn’t solve with a regular computer.

When I joined, Cray was considering building a new type of parallel machines we called MPPs (massively parallel processing). I worked on the design and implementation of the operating system for the Cray T3E, a system with 2048 individual nodes, with standard CPU chips, memory, and a proprietary high-speed interconnect. Ahead of its time, Cray was building what we today call HPC clusters. Besides it being a fantastic engineering project, it was the beginning of a disruption: going from proprietary Cray architectures to clusters of nodes with commodity parts.

I lived through a second disruption around year 2000. I was leading software development at SGI, and we were planning to move from MIPS processors to Intel. At a time when Linux was still considered a hobbyist operating system, we at SGI took a risk by also moving to Linux during the migration, despite SGI’s IRIX being touted as a great operating system at the time. Initially customers were quite resistant to the change because Linux was viewed as offering less control than their proprietary OS. Over time, customers warmed up to the idea, other vendors followed, and Linux became the standard operating system for HPC, enabling more applications to be readily available.

As years have passed, HPC has transitioned from unique and proprietary designs, to clusters of many dual-CPU Intel nodes. Vendors’ products are now differentiated more by packaging, density, and cooling than the uniqueness of the architecture.

In parallel, cloud computing has gained momentum in the larger IT industry. Intel is now selling more processors to run in the cloud than in company-owned facilities, and cloud is starting to drive innovation and efficiencies at a rate faster than on premises.

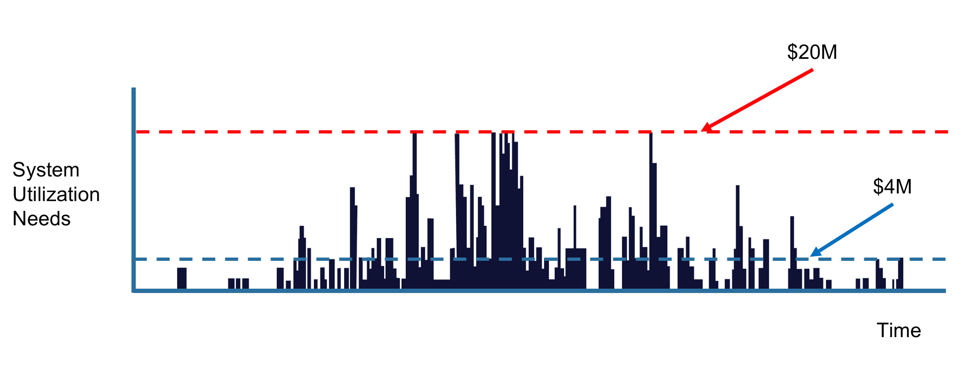

High performance computing has evolved on-premise. You buy a computer for a few million dollars, and you are able to run simulations to reduce your innovation time and time to market for your products. The auto manufacturer depicted in Figure 1 represents the new dilemma faced in buying such an in-house HPC system. With the workload this company has, what size system should they buy? If they buy a system that accommodates the peak workload, they may have to spend around $20M, but the system will be only 20% utilized. If they buy a $4M system, the system will be highly utilized, but large jobs cannot be run, and jobs will wait in a queue—potentially for days—before they run, delaying innovation and time to market.

Figure 1 – The Challenge of an auto manufacturer selecting the next HPC system

This auto part manufacturer decided that neither option was acceptable to them, and instead they decided to go with Rescale and run HPC in the cloud. They now pay between $50K and $100K per month for the IT they need when they need it and they have instant access to the perfectly-sized system without waiting, significantly improving design throughput and time to market. With the Rescale cloud platform, they get the best of both worlds—they spend monthly what they would have spent in depreciation of a $4M system, while they get the service of a $20M system.

All customers with high performance computing requirements face the same challenge the auto manufacturer faced. The problem becomes even more pronounced with the new variety of processor architectures that includes CPU, GPU, TPU, KNL, FPGA, and multiple interconnect technologies because you either have to partition your system or forego the latest architectures. In contrast, the cloud does not force you to choose and instead allows you to run each workload on the best possible architecture for your problem type. Applications are readily accessible on the cloud, allowing customers to pay per usage. The availability of hardware and applications allows new customers to take advantage of HPC, which was only available to large enterprises before cloud HPC.

Like the previous disruptions of clusters vs. monolithic systems or Linux vs. proprietary operating systems, cloud changes the status quo, takes us out of our comfort zone, and gives us a sense of lack of control. But the effect of price, the flexibility to dynamically change your system size and choose the best architecture for the job, the availability of applications, the ability to select system cost based on the needs of a particular workload, and the ability to provision and run immediately, will prove very attractive for HPC users. It may be time to start thinking about HPC in the cloud in your organization!

The nature of cloud disruption is unique. It’s not all or nothing, and you can dip your toe in the water. If you follow the traditional processes and buy another on-premise system, you will miss the advantages of cloud. Cloud gives us an opportunity to test the benefits of the future without committing to the next multi-million-dollar purchase. If you spend $100K you can start immediately, testing HPC in the cloud, accessing the latest architectures available. If the next HPC system in 3-5 years will be in the cloud or will be a hybrid system, testing it now, learning from it, and iterating will reduce risk and will enable a much smoother transition. So, in addition to thinking about cloud, I encourage you to test the future starting next week!

Gabriel BronerGabriel Broner has been in the HPC industry for 25 years. He held roles of operating systems architect at Cray, VP & GM of HPC at SGI/HPE, head of innovation at Ericsson, GM at Microsoft. Gabriel joined Rescale as VP & GM of HPC in July 2017.