Today GTC China, NVIDIA made a series of announcements around Deep Learning, and GPU-accelerated computing for Hyperscale datacenters.

Today GTC China, NVIDIA made a series of announcements around Deep Learning, and GPU-accelerated computing for Hyperscale datacenters.

At no time in the history of computing have such exciting developments been happening, and such incredible forces in computing been affecting our future,” said NVIDIA CEO Jensen Huang. “Demand is surging for technology that can accelerate the delivery of AI services of all kinds. And NVIDIA’s deep learning platform — which the company updated Tuesday with new inferencing software — promises to be the fastest, most efficient way to deliver these services.”

News Announcements Highlights:

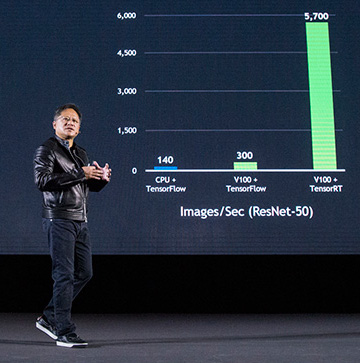

- TensorRT 3 inferencing acceleration platform. NVIDIA unveiled TensorRT 3 AI inferencing software, which runs a trained neural network in a production environment (see “What’s the Difference Between Deep Learning Training and Inferencing?”). The new software will boost the performance and slash the cost of inferencing from the cloud to edge devices, including self-driving cars and robots.

- V100 to power AI cloud in China. Huang announced that Alibaba, Baidu and Tencent are all deploying Tesla V100 GPU accelerators in their cloud services. Plus, China’s top OEMs — Huawei, Inspur and Lenovo — have all adopted our HGX server architecture to build a new generation of accelerated data centers with Tesla V100 GPUs.

- JD.com selects NVIDIA for autonomous machines. Huang announced the companies’ collaboration to bring AI to logistics and delivery using autonomous machines, powered by the NVIDIA Jetson supercomputer on a module,

In this keynote video from GTC China, NVIDIA CEO Jensen Huang unveils AI tools and a wide range of partnerships with China’s leading technology providers — including Alibaba Cloud, Baidu, Tencent, Huawei, Inspur, Lenovo, Hikvision, and JD – to accelerate mass adoption of AI computing across cloud computing, services, smart cities, self-driving vehicles, and autonomous machines.