Intel is aiming to tackle the challenges of increasing memory bandwidth for HPC applications with an FPGA that includes High Bandwidth Memory DRAM (HBM2).

Intel is aiming to tackle the challenges of increasing memory bandwidth for HPC applications with an FPGA that includes High Bandwidth Memory DRAM (HBM2).

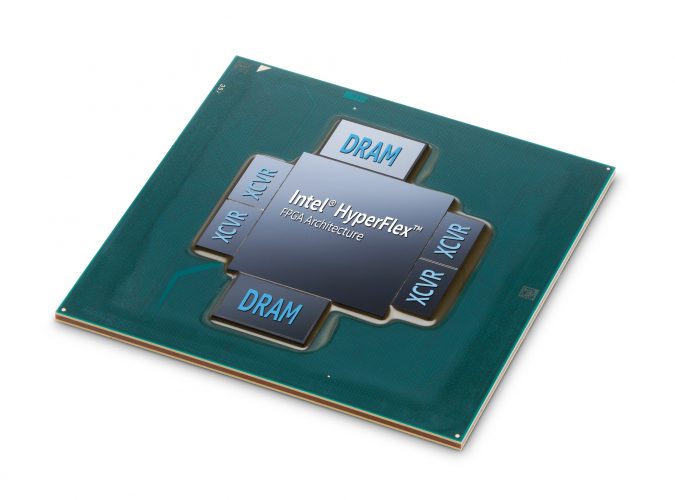

Intel recently announced the availability of the Intel Stratix 10 MX FPGA, the industry’s first field programmable gate array (FPGA) with integrated HBM2. By integrating the FPGA and the HBM2, Intel Stratix 10 MX FPGAs offer up to 10 times the memory bandwidth when compared when compared to standard DDR 2400 DIMM.

Intel is positioning this new FPGA as a tool that can further accelerate HPC application performance by keeping pace with the memory bandwidth demands seen in many of today’s HPC clusters. In HPC environments, the ability to compress and decompress data before or after mass data movements is paramount. HBM2-based FPGAs can compress and accelerate larger data movements compared with stand-alone FPGAs.

To efficiently accelerate these workloads, memory bandwidth needs to keep pace with the explosion in data,” said Reynette Au, vice president of marketing, Intel Programmable Solutions Group. “We designed the Intel Stratix 10 MX family to provide a new class of FPGA-based multi-function data accelerators for HPC and HPDA markets.”

With High Performance Data Analytics (HPDA) environments, streaming data pipeline frameworks like Apache Kafka and Apache Spark Streaming require real-time hardware acceleration. Intel Stratix 10 MX FPGAs can simultaneously read/write data and encrypt/decrypt data in real-time without burdening the host CPU resources.

These bandwidth capabilities make Intel Stratix 10 MX FPGAs the essential multi-function accelerators for high-performance computing (HPC), data centres, network functions virtualisation (NFV), and broadcast applications that require hardware accelerators to speed-up mass data movements and stream data pipeline frameworks.

The Intel Stratix 10 MX FPGA family provides a maximum memory bandwidth of 512 gigabytes per second with the integrated HBM2. HBM2 vertically stacks DRAM layers using silicon via (TSV) technology. These DRAM layers sit on a base layer that connects to the FPGA using high density micro bumps. The Intel Stratix 10 MX FPGA family utilises Intel’s Embedded Multi-Die Interconnect Bridge (EMIB) that speeds communication between FPGA fabric and the DRAM. EMIB works to efficiently integrate HBM2 with a high-performance monolithic FPGA fabric, solving the memory bandwidth bottleneck in a power-efficient manner.

It remains to be seen whether FPGA technology can breakthrough into mainstream HPC. FPGA technologies have been available to HPC users for some time but until today they have not been widely adopted as creating FPGA accelerated applications requires more specialised programming. The use of hardware description language (HDL) to program FPGA logic specifically for an application adds extra complexity to application development.

However, as the need for memory bandwidth increases HPC system designers may be more willing to adopt specialised technologies such as FPGAs if they can deliver on the promise of faster application performance.

This story appears here as part of a cross-publishing agreement with Scientific Computing World.