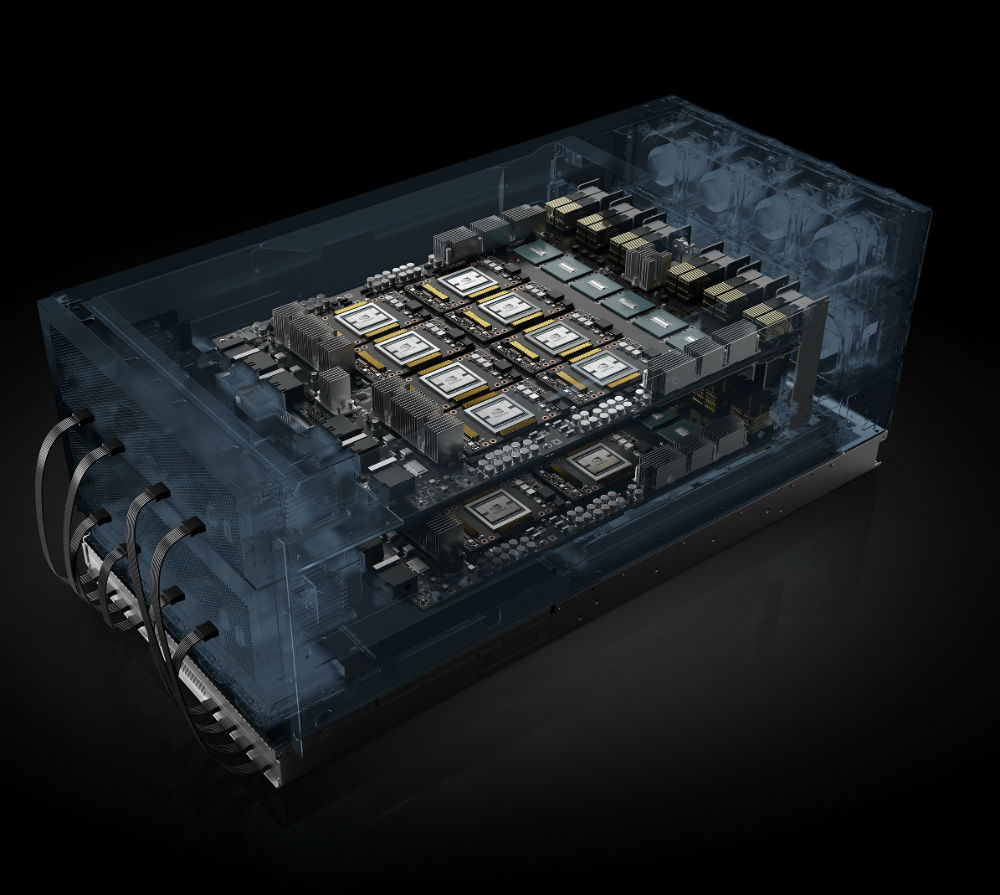

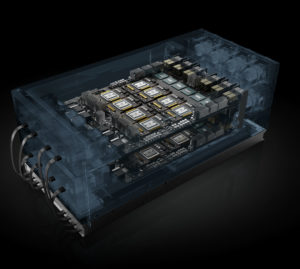

Today NVIDIA introduced the NVIDIA HGX-2, “the first unified computing platform for both artificial intelligence and high performance computing.” As a powerful cloud-server reference platform, the HGX-2 provides companies with unprecedented versatility to meet the requirements of applications that combine HPC with AI.

Today NVIDIA introduced the NVIDIA HGX-2, “the first unified computing platform for both artificial intelligence and high performance computing.” As a powerful cloud-server reference platform, the HGX-2 provides companies with unprecedented versatility to meet the requirements of applications that combine HPC with AI.

The world of computing has changed,” said Jensen Huang, founder and chief executive officer of NVIDIA, speaking at the GPU Technology Conference Taiwan, which kicked off today. “CPU scaling has slowed at a time when computing demand is skyrocketing. NVIDIA’s HGX-2 with Tensor Core GPUs gives the industry a powerful, versatile computing platform that fuses HPC and AI to solve the world’s grand challenges.”

The HGX-2 cloud server platform supports multi-precision computing, supporting high-precision calculations using FP64 and FP32 for scientific computing and simulations, while also enabling FP16 and Int8 for AI training and inference. This unprecedented versatility meets the requirements of the growing number of applications that combine HPC with AI.

A number of leading computer makers today shared plans to bring to market systems based on the NVIDIA HGX-2 platform.

NVIDIA’s HGX-2 ups the ante with a design capable of delivering two petaflops of performance for AI and HPC-intensive workloads,” said Paul Ju, vice president and general manager of Lenovo DCG. “With the HGX-2 server building block, we’ll be able to quickly develop new systems that can meet the growing needs of our customers who demand the highest performance at scale.”

As a reference platform, HGX-2-serves as a “building block” for manufacturers to create some of the most advanced systems for HPC and AI. Highlights include:

- Record AI Training Speed. has achieved record AI training speeds of 15,500 images per second on the ResNet-50 training benchmark, and can replace up to 300 CPU-only servers.

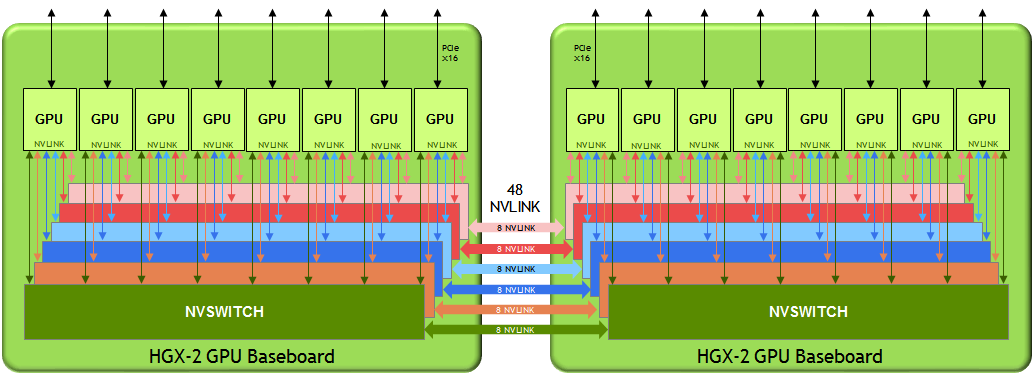

- High performance interconnect. HGX-2 incorporates such breakthrough features as NVIDIA NVSwitch interconnect fabric, which seamlessly links 16 NVIDIA Tesla V100 Tensor Core GPUs to work as a single, giant GPU delivering two petaflops of AI performance. The first system built using HGX-2 was the recently announced NVIDIA DGX-2.

- Rapid Adoption. Four leading server makers — Lenovo, QCT, Supermicro and Wiwynn — have already announced plans to bring their own HGX-2-based systems to market later this year. Four of the world’s top original design manufacturers (ODMs) — Foxconn, Inventec, Quanta and Wistron — are designing HGX-2-based systems, also expected later this year, for use in some of the world’s largest cloud datacenters.

To help address the rapidly expanding size of AI models that sometimes require weeks to train, Supermicro is developing cloud servers based on the HGX-2 platform,” said Charles Liang, president and CEO of Supermicro. “The HGX-2 system will enable efficient training of complex models.”

HGX-2 is a part of the larger family of NVIDIA GPU-Accelerated Server Platforms, an ecosystem of qualified server classes addressing a broad array of AI, HPC and accelerated computing workloads with optimal performance.

Sign up for our insideHPC Newsletter