In this video from the DDN User Group at ISC 2018, Steve Simms from Indiana University presents: Lustre / ZFS at Indiana University.

Data migrations can be time-consuming and tedious, often requiring large maintenance windows of downtime. Some common reasons for data migrations include aging and/or failing hardware, increases in capacity, and greater performance. Traditional file and block-based “copy tools” each have pros and cons, but the time to complete the migration is often the core issue. Some file-based tools are feature-rich, allowing quick comparisons of date/time stamps, or changed blocks inside a file. However, examining multiple millions, or even billions of files takes time. Even when there is little no no data churn, a final “sync” may take hours or even days to complete, with little data movement. Block based tools have fairly predictable transfer speeds when the block device is otherwise “idle,” however many block-based tools do not allow “delta” transfers. The entire block device needs to be read, and then written out to another block device to complete the migration.

ZFS backed OST’s can be migrated to new hardware or to existing reconfigured hardware by leveraging ZFS snapshots and ZFS send/receive operations. The ZFS snapshot/send/receive migration method leverages incremental data transfers, allowing an initial data copy to be “caught up” with subsequent incremental changes. This migration method preserves all the ZFS Lustre properties (mgsnode, fsname, network, index, etc), but allows the underlying zpool geometry to be changed on the destination. The rolling ZFS snapshot/send/receive operations can be maintained on a per-OST basis, allowing granular migrations.

This migration method greatly reduces the final “sync” downtime, as rolling snapshot/send/receive operations can be continuously run, thereby pairing down the delta’s to the smallest possible amount. There is no overhead to examine all the changed data, as the snapshot “is” the changed data. Additionally, the final sync can be estimated from previous snapshot/send/receive operations, which supports a more accurate downtime window.

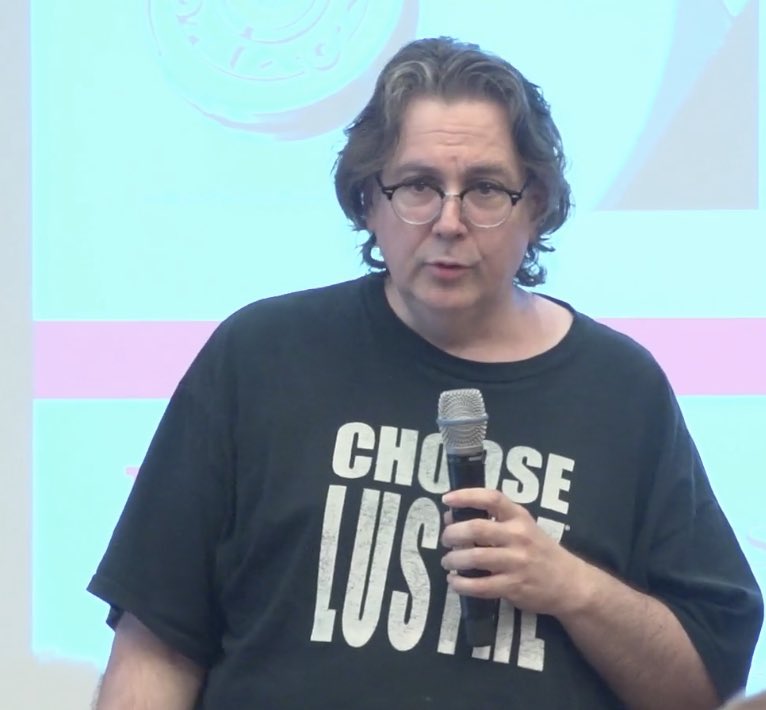

Steve Simms works for the Research Technologies division of University Information Technology Services (UITS) at IU. Simms has worked in High Performance Computing at IU since 1999 and currently leads IU’s High Performance File Systems Team. He and his team have been active in Lustre research, pioneering the use of Lustre across the WAN. Simms has served as the president of OpenSFS, a user-driven non-profit organization dedicated to promoting development and use of the Lustre file system.