Over at Argonne, Madeleine O’Keefe writes that the Lab is supporting CERN researchers working to interpret Big Data from the Large Hadron Collider (LHC), the world’s largest particle accelerator. The LHC is expected to output 50 petabytes of data this year alone, the equivalent to nearly 15 million high-definition movies—an amount so enormous that analyzing it all poses a serious challenge to researchers.

Centered around the ATLAS experiment, these efforts are especially important given what is coming up for the accelerator. In 2026, the LHC will undergo an ambitious upgrade to become the High-Luminosity LHC (HL-LHC). The aim of this upgrade is to increase the LHC’s luminosity—the number of events detected per second—by a factor of 10. “This means that the HL-LHC will be producing about 20 times more data per year than what ATLAS will have on disk at the end of 2018,” says Taylor Childers, a member of the ATLAS collaboration and computer scientist at the ALCF who is leading the effort at the facility. “CERN’s computing resources are not going to grow by that factor.”

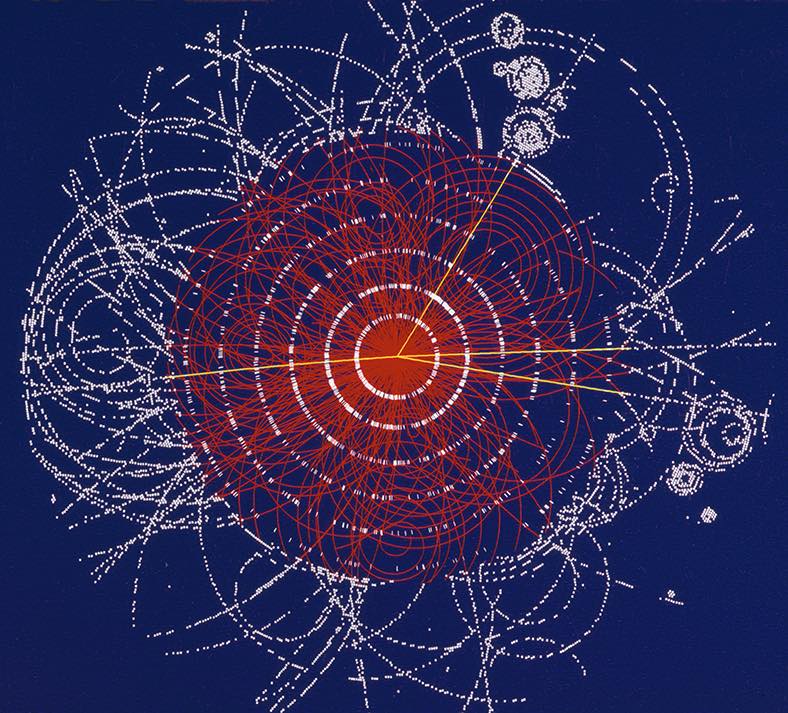

The ATLAS detector is an 82-foot-tall, 144-foot-long cylinder with magnets, detectors, and other instruments layered around the central beampipe like an enormous 7,000-ton Swiss roll. When protons collide in the detector, they send a spray of subatomic particles flying in all directions, and this particle debris generates signals in the detector’s instruments. Scientists can use these signals to discover important information about the collision and the particles that caused it in a computational process called reconstruction. Childers compares this process to arriving at the scene of a car crash that has nearly completely obliterated the vehicles and trying to figure out the makes and models of the cars and how fast they were going. Reconstruction is also performed on simulated data in the ATLAS analysis framework, called Athena.

An ATLAS physics analysis consists of three steps. First, in event generation, researchers use the physics that they know to model the kinds of particle collisions that take place in the LHC. In the next step, simulation, they generate the subsequent measurements the ATLAS detector would make. Finally, reconstruction algorithms are run on both simulated and real data, the output of which can be compared to see differences between theoretical prediction and measurement.

If we understand what’s going on, we should be able to simulate events that look very much like the real ones,” says Tom LeCompte, a physicist in Argonne’s High Energy Physics division and former physics coordinator for ATLAS.

“And if we see the data deviate from what we know, then we know we’re either wrong, we have a bug, or we’ve found new physics,” says Childers.

Some of these simulations, however, are too complicated for the Worldwide LHC Computing Grid, which LHC scientists have used to handle data processing and analysis since 2002. The Grid is an international distributed computing infrastructure that links 170 computing centers across 42 countries, allowing data to be accessed and analyzed in near real-time by an international community of more than 10,000 physicists working on various LHC experiments.

The Grid has served the LHC well so far, but as demand for new science increases, so does the required computing power.

That’s where the ALCF comes in.

In 2011, when LeCompte returned to Argonne after serving as ATLAS physics coordinator, he started looking for the next big problem he could help solve. “Our computing needs were growing faster than it looked like we would be able to fulfill them, and we were beginning to notice that there were problems we were trying to solve with existing computing that just weren’t able to be solved,” he says. “It wasn’t just an issue of having enough computing; it was an issue of having enough computing in the same place. And that’s where the ALCF really shines.”

LeCompte worked with Childers and ALCF computer scientist Tom Uram to use Mira, the ALCF’s 10-petaflops IBM Blue Gene/Q supercomputer, to carry out calculations to improve the performance of the ATLAS software. Together they scaled Alpgen, a Monte Carlo-based event generator, to run efficiently on Mira, enabling the generation of millions of particle collision events in parallel. “From start to finish, we ended up processing events more than 20 times as fast, and used all of Mira’s 49,152 processors to run the largest-ever event generation job,” reports Uram.

But they weren’t going to stop there. Simulation, which takes up around five times more Grid computing than event generation, was the next challenge to tackle.

Moving forward with Theta

In 2017, Childers and his colleagues were awarded a two-year allocation from the ALCF Data Science Program (ADSP), a pioneering initiative designed to explore and improve computational and data science methods that will help researchers gain insights into very large datasets produced by experimental, simulation, or observational methods. The goal is to deploy Athena on Theta, the ALCF’s 11.69-petaflops Intel-Cray supercomputer, and develop an end-to-end workflow to couple all the steps together to improve upon the current execution model for ATLAS jobs which involves a manystep workflow executed on the Grid.

Each of those steps—event generation, simulation, and reconstruction—has input data and output data, so if you do them in three different locations on the Grid, you have to move the data with it,” explains Childers. “Ideally, you do all three steps back-to-back on the same machine, which reduces the amount of time you have to spend moving data around.”

Enabling portions of this workload on Theta promises to expedite the production of simulation results, discovery, and publications, as well as increase the collaboration’s data analysis reach, thus moving scientists closer to new particle physics.

One challenge the group has encountered so far is that, unlike other computers on the Grid, Theta cannot reach out to the job server at CERN to receive computing tasks. To solve this, the ATLAS software team developed Harvester, a Python edge service that can retrieve jobs from the server and submit them to Theta. In addition, Childers developed Yoda, an MPI-enabled wrapper that launches these jobs on each compute node.

Harvester and Yoda are now being integrated into the ATLAS production system. The team has just started testing this new workflow on Theta, where it has already simulated over 12 million collision events. Simulation is the only step that is “production-ready,” meaning it can accept jobs from the CERN job server.

The team also has a running end-to-end workflow—which includes event generation and reconstruction—for ALCF resources. For now, the local ATLAS group is using it to run simulations investigating if machine learning techniques can be used to improve the way they identify particles in the detector. If it works, machine learning could provide a more efficient, less resource-intensive method for handling this vital part of the LHC scientific process.

Our traditional methods have taken years to develop and have been highly optimized for ATLAS, so it will be hard to compete with them,” says Childers. “But as new tools and technologies continue to emerge, it’s important that we explore novel approaches to see if they can help us advance science.”

Upgrade computing, upgrade science

As CERN’s quest for new science gets more and more intense, as it will with the HL-LHC upgrade in 2026, the computational requirements to handle the influx of data become more and more demanding.

With the scientific questions that we have right now, you need that much more data,” says LeCompte. “Take the Higgs boson, for example. To really understand its properties and whether it’s the only one of its kind out there takes not just a little bit more data but takes a lot more data.”

This makes the ALCF’s resources—especially its next-generation exascale system, Aurora—more important than ever for advancing science.

Aurora, scheduled to come online in 2021, will be capable of one billion billion calculations per second—that’s 100 times more computing power than Mira. It is just starting to be integrated into the ATLAS efforts through a new project selected for the Aurora Early Science Program (ESP) led by Jimmy Proudfoot, an Argonne Distinguished Fellow in the High Energy Physics division. Proudfoot says that the effective utilization of Aurora will be key to ensuring that ATLAS continues delivering discoveries on a reasonable timescale. Since increasing compute resources increases the analyses that are able to be done, systems like Aurora may even enable new analyses not yet envisioned.

The ESP project, which builds on the progress made by Childers and his team, has three components that will help prepare Aurora for effective use in the search for new physics: enable ATLAS workflows for efficient end-to-end production on Aurora, optimize ATLAS software for parallel environments, and update algorithms for exascale machines.

The algorithms apply complex statistical techniques which are increasingly CPU-intensive and which become more tractable—and perhaps only possible—with the computing resources provided by exascale machines,” explains Proudfoot.

In the years leading up to Aurora’s run, Proudfoot and his team, which includes collaborators from the ALCF and Lawrence Berkeley National Laboratory, aim to develop the workflow to run event generation, simulation, and reconstruction. Once Aurora becomes available in 2021, the group will bring their end-to-end workflow online.

The stated goals of the ATLAS experiment—from searching for new particles to studying the Higgs boson—only scratch the surface of what this collaboration can do. Along the way to groundbreaking science advancements, the collaboration has developed technology for use in fields beyond particle physics, like medical imaging and clinical anesthesia.

These contributions and the LHC’s quickly growing needs reinforce the importance of the work that LeCompte, Childers, Proudfoot, and their colleagues are doing with ALCF computing resources.

I believe DOE’s leadership computing facilities are going to play a major role in the processing and simulation of the future rounds of data that will come from the ATLAS experiment,” says LeCompte.

Source: Argonne National Laboratory