We spoke with Eviden’s Group Senior Vice President, Head of Advanced Computing, HPC, Quantum, AI Solutions Emmanuel Le Roux, who asserted that Atos’ financial troubles have had no impact on Eviden, that Eviden is winning deals, growing and hiring.

Eviden: No Impact from Atos’s Financial Difficulties – ‘We Are Growing and Hiring’

A New Day for the TOP500: Aurora No. 2 at 585 PFlops, 4 New Top 10 Entrants, Frontier Still No. 1

Denver — Attendees at the SC23 conference here in Denver have been greeted by a roiled TOP500 ranking of the world’s most powerful supercomputers, along with significant news about a would-be exascale HPC system coming in at no. 2 on the list….

Aurora Exascale Install Update: Cautious Optimism

The twice-annual TOP500 list of the world’s most powerful supercomputers is not universally loved, arguments persist whether the LINPACK benchmark is an optimal way to assess HPC system performance. But few would argue it serves a valuable purpose: for those installing leadership-class supercomputers, the TOP500 poses a challenge and a looming deadline that “concentrates the mind wonderfully.”

Conventional Wisdom Watch: Matsuoka & Co. Take on 12 Myths of HPC

A group of HPC thinkers, including the estimable Satoshi Matsuoka of the RIKEN Center for Computational Science in Japan, have come together to challenge common lines of thought they say have become, to varying degrees, accepted wisdom in HPC. In a paper entitled “Myths and Legends of High-Performance Computing” appearing this week on the Arvix […]

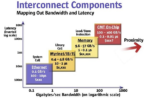

SC22: CXL3.0, the Future of HPC Interconnects and Frontier vs. Fugaku

HPC luminary Jack Dongarra’s fascinating comments at SC22 on the low efficiency of leadership-class supercomputers highlighted by the latest High Performance Conjugate Gradients (HPCG) benchmark results will, I believe, influence the next generation of supercomputer architectures to optimize for sparse matrix computations. The upcoming technology that will help address this problem is CXL. Next generation architectures will use CXL3.0 switches to connect processing nodes, pooled memory and I/O resources into very large, coherent fabrics within a rack, and use Ethernet between racks. I call this a “Petalith” architecture (explanation below), and I think CXL will play a significant and growing role in shaping this emerging development in the high performance interconnect space.

How Machine Learning Is Revolutionizing HPC Simulations

Physics-based simulations, that staple of traditional HPC, may be evolving toward an emerging, AI-based technique that could radically accelerate simulation runs while cutting costs. Called “surrogate machine learning models,” the topic was a focal point in a keynote on Tuesday at the International Conference on Parallel Processing by Argonne National Lab’s Rick Stevens. Stevens, ANL’s […]

IBM Doubles Down on 1000+-Qubit Quantum in 2023

As expectation-setting goes in the technology industry, this is bold. At IBM’s annual Think conference, a senior systems executive reiterated the company’s intent to deliver a 1,121-qubit IBM Quantum Condor processor by 2023. In a video interview with theCUBE, technology publication SiliconANGLE Media’s livestreaming studio, IBM GM of systems strategy and development for enterprise security, […]

Report: Security Firm Says HPC Clusters under Attack: ‘Level of Sophistication Rarely Seen in Linux Malware’

This is an updated version of a story first publised on Feb. 1. UK technology industry publication PCR published a story today stating that an international data security firm, ESET, has reported the identification of a malware called Kobalos that targets supercomputing clusters. They also said they have been working with security experts at CERN, the European Organization for Nuclear Research and other organizations on stemming attacks. “Among other targets was a large Asian ISP, a North American endpoint security vendor as well as several privately held servers,” PCR reported.

HPC Predictions 2021: Quantum Beyond Qubits; the Rising Composable and Memory-Centric Tide; Neural Network Explosion; 5G’s Slow March

Annual technology predictions are like years: some are better than others. Nonetheless, many provide useful insight and serve as the basis for worthwhile discussion. Over the last few months we received a number of HPC and AI predictions for 2021, here are the most interesting and potentially valid. Let’s check in 12 months from now […]