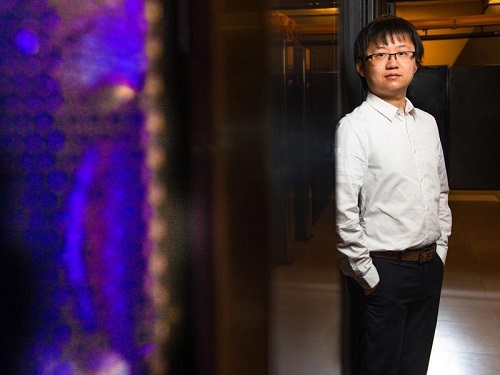

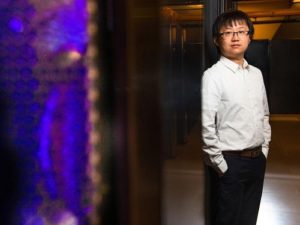

Pacific Northwest National Lab sent along this article today by PNNL’s Allan Brettman, who writes about the advanced techniques used by the lab’s Center for Advanced Technology Evaluation (CENATE) “to judge HPC workload legitimacy that is as stealthy as an undercover detective surveying the scene through a two-way mirror.” This includes machine learning methods, such as recurrent neural networks, that the center has found delivers prediction accuracy of more than 95 percent.

Pacific Northwest National Lab sent along this article today by PNNL’s Allan Brettman, who writes about the advanced techniques used by the lab’s Center for Advanced Technology Evaluation (CENATE) “to judge HPC workload legitimacy that is as stealthy as an undercover detective surveying the scene through a two-way mirror.” This includes machine learning methods, such as recurrent neural networks, that the center has found delivers prediction accuracy of more than 95 percent.

|