<SPONSORED CONTENT> This insideHPC technology guide, insideHPC Guide to HPC Fusion Computing Model – A Reference Architecture for Liberating Data, discusses how organizations need to adopt a Fusion Computing Model to meet the needs of processing, analyzing, and storing the data to no longer be static.

<SPONSORED CONTENT> This insideHPC technology guide, insideHPC Guide to HPC Fusion Computing Model – A Reference Architecture for Liberating Data, discusses how organizations need to adopt a Fusion Computing Model to meet the needs of processing, analyzing, and storing the data to no longer be static.

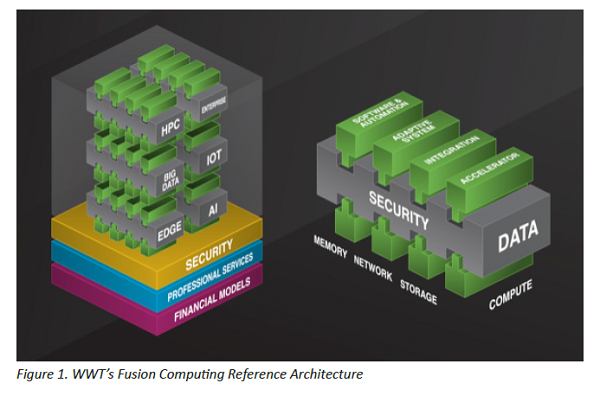

Fusion Computing Reference Architecture

Fusion computing provides a reference architecture with multiple configurations. Dodd states, “We went back to evaluate the first principles of why we store and move data. The Fusion Computing Model looks at a broader integration of capabilities to put agility back at the data center model.”

The model provides the ability to compose and virtualize every computing asset. Figure 1 shows an example of the WWT Fusion Computing reference architecture and services model. Technology elements of fusion computing architecture include:

- Security first mindset with silicon root of trust in Central Processing Units (CPUs) may provide Runtime Application Self-Protection (RASP) on a server that detects possible application attacks

- Scalable and balanced architecture with sustainable performance

- Open architecture first mindset

- Autonomic infrastructure (self-configuring, healing, optimizing, and protecting)

- Easy-to-install, easy-to-administrate, and easy-to-use

- Standards-based and understandable Benefits of the model include a predictable workflow with reduced costs and investments.

Benefits of the model include a predictable workflow with reduced costs and investments.

HPC and AI Memory Considerations

HPC processing requires hundreds of gigabytes to terabytes of memory needed to hold the enormous amounts of data that HPC, AI, ML, DL, Big Data, IoT and IIoT applications will access. The capacity of memory systems within an HPC computing environment is critical. Dynamic Random-Access Memory (DRAM) semiconductor memory commonly used in computers and graphics cards may be too expensive to use in HPC. Yet, the larger the high-speed memory, the more data can be held close to the CPU to increase performance. HPC solutions always face tradeoffs.

Importance of HPC Chips and Accelerators

Advances in processors (single and multi-socket) and graphic processing units (GPUs) revolutionized processing data’s speed and flexibility. Based on the Fusion Computing Model, data center staff need to evaluate the type of data that will be processed to determine the best types of CPUs and GPUs that may be required.

Creating a data center is expensive, and the density of CPUs and storage are critical. Compute servers that are used in HPC systems will typically have two sockets and possibly four. This configuration translates to over 120 cores available for the computation.

According to the Fusion Computing Model, when architecting an HPC solution, putting high-end CPUs in the same enclosure as the storage devices can be substantial. Housing computing and storage together in the same enclosure can eliminate integration issues and position the compute and storage close together, both physically and on the same internal bus. Applications will run faster when the application is accessing storage in the same system compared to going outside of the enclosure.

Incorporating GPUs in the infrastructure is also necessary depending on the type of workflows and processing performed. For example, GPU-based accelerators are increasingly used for massively parallel applications such as DL or HPC simulations which may take thousands of processing hours. DL algorithms may repeatedly perform a simulation learning task to gradually improve a simulation’s outcome through deep layers that enable progressive learning.

Intel® Optane™ persistent memory technology is a new memory class that delivers a combination of affordable large capacity and support for data persistence by combining memory and storage traits into a single product. The product delivers persistent memory, large memory pools and fast caching. This product also allows consolidating workflows concentrated on fewer compute nodes, to maximize previously underutilized processors due to memory constraints. Again, data centricity in the digital race. Intel Optane is combined with 2nd Gen Intel® Xeon® Scalable processors and included with 3rd Gen Intel® Xeon® Scalable processors.

“Compute and memory are important in keeping streams of data in motion and at scale. We recommend putting the compute and memory where the data are located.” – Earl J. Dodd, WWT, Global HPC Business

Practice Leader

HPC Storage is Critical to Fusion Computing

Storage is a critical portion of data center infrastructure and a significant component in the Fusion Computing Model. Storage infrastructure should be part of the initial infrastructure selection and planning. Storage must have the intelligence to maximize performance on all types of data. It must also be able to quickly read and write data from multiple applications running simultaneously. Storage security is a significant factor both while the storage is operating and physically removed from the enclosure.

Over the next few weeks we will explore these topics:

- Executive Summary, Customer Challenges, Fusion Computing drives the High Performance Architecture, The Fusion Computing Model: Harnessing the Data Fountain

- Fusion Computing Reference Architecture, HPC and AI Memory Considerations, Importance of HPC Chips and Accelerators, HPC Storage is Critical to Fusion Computing

- Archiving Data Case Study, What to Consider when Selecting Storage for the HPA Reference Architecture, The Role of Storage in Fusion Computing, Seagate Storage and Intel Compute Enable Fusion Computing, WWT’s Advanced Technology Center (ATC), Conclusion

Download the complete insideHPC Guide to HPC Fusion Computing Model – A Reference Architecture for Liberating Data courtesy of Seagate.