Cerebras Systems, maker of the “dinner plate sized” AI processor, announced two alliances today, one with Cirrascale Cloud Services, provider of deep learning solutions for AVs, NLP and computer vision, and with Jasper, maker of an AI content platform for AI-based copywriting and content creation.

Cerebras Systems, maker of the “dinner plate sized” AI processor, announced two alliances today, one with Cirrascale Cloud Services, provider of deep learning solutions for AVs, NLP and computer vision, and with Jasper, maker of an AI content platform for AI-based copywriting and content creation.

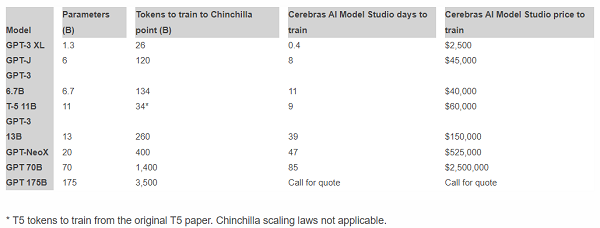

Under the Cirrascale-Cerebras partnership, the two companies announced the availability of the Cerebras AI Model Studio. Hosted on the Cerebras Cloud @ Cirrascale, the offering is designed to enable training of generative Transformer (GPT)-class models, including GPT-J, GPT-3 and GPT-NeoX, on Cerebras Wafer-Scale Clusters, including the Cerebras’ recently announced Andromeda AI supercomputer.

Cerebras said the AI model studio addresses the problems for traditional cloud providers faced with guaranteeing latency between large numbers of GPUs when building large language models.

“Variable latency produces complex and time-consuming challenges in distributing a large AI model among GPUs and large swings in time to train,” Cerebras said in its announcement. “The Cerebras AI Model Studio overcomes these challenges. Set up is quick and easy; clusters of dedicated CS-2s guarantee deterministic latency; and because the clusters rely solely on data parallelization, there is zero distributed compute work required.”

Normally, Cerebras said, training Large Language Models (LLMs) with multi-billion parameters is challenging and expensive, requiring months of training on clusters of GPUs supported by a team of engineers experienced in distributed programming and hybrid data-model parallelism. It is a multi-million dollar investment that many organizations cannot afford, Cerebras said.

But the Cerebras AI Model Studio can train GPT-class models at half the cost of traditional cloud providers, according to Cerebras, and requires only a few lines of code. Users can choose from state-of-the-art GPT-class models ranging from 1.3 billion parameters up to 175 billion parameters, and complete training with 8x faster time to accuracy than on an A100, the company said.

Andrew Feldman, CEO and co-founder of Cerebras Systems said, the AI Model Studio is intended to democratizes AI by providing “access to multi-billion parameter NLP models on our powerful CS-2 clusters, with predictable, competitive model-as-a-service pricing. Our mission at Cerebras is to broaden access to deep learning and rapidly accelerate the performance of AI workloads. The Cerebras AI Model Studio makes this easy and dead simple – just load your dataset and run a script.”

The Cerebras AI Model Studio offers cloud access to the Cerebras Wafer-Scale cluster. Users can access up to a 16-node Cerebras Wafer-Scale cluster and train models using longer sequence lengths of up to 50,000 tokens.

The Cerebras AI Model Studio offers cloud access to the Cerebras Wafer-Scale cluster. Users can access up to a 16-node Cerebras Wafer-Scale cluster and train models using longer sequence lengths of up to 50,000 tokens.

With Jasper, Cerebras intends to improve the accuracy of generative AI for creators and businesses. Using Cerebras’ Andromeda AI supercomputer, Jasper “can train its profoundly computationally intensive models in a fraction of the time and extend the reach of generative AI models to the masses,” the companies said.

Cerebras said generative AI “is one of the most important technological waves in recent history, enabling the ability to write documents, create images, and code software all from ordinary text inputs.”

Based on generative AI models, Jasper said its products are used by nearly 100,000 customers to write copy for marketing, ads, books and other materials. With the Andromeda supercomputer, the partners hope “to dramatically advance AI work, including training GPT networks to fit AI outputs to all levels of end-user complexity and granularity. This improves the contextual accuracy of generative models and will enable Jasper to personalize content across multiple classes of customers quickly and easily.”

Jasper said its platform eliminates “the tyranny of the blank page.”

“Our platform provides an AI co-pilot for creators and businesses to focus on the key elements of their story, not the mundane,” said Dave Rogenmoser, CEO of Jasper. “The most important thing to us is the quality of outputs our users receive. Partnering with Cerebras enables us to invent the future of generative AI by doing things that are impractical or simply impossible with traditional infrastructure. Our collaboration with Cerebras accelerates the potential of generative AI, bringing its benefits to our rapidly growing customer base around the globe.”

Announced at SC22, the 13.5 million AI core Andromeda supercomputer delivers performance and near perfect linear scaling without traditional distributed computing and parallel programming pains, enabling Jasper to efficiently design and optimize their next set of models, the companies said.

Cerebras said in initial work on small workloads, Andromeda was faster than 800 GPUs and on large complex workloads it completed work that thousands of GPUs were incapable of doing. In a recent publication on Gordon Bell award-winning work, the authors wrote, “We note that for the larger model sizes (2.5B and 25B), training on the 10,240 sequence length data was infeasible on GPU-clusters due to out-of-memory errors during attention computation…. To enable training of the larger models on the full sequence length (10,240 tokens), we leveraged AI-hardware accelerators such as Cerebras CS-2, both in a stand-alone mode and as an inter-connected cluster.” It is these very GPU impossible workloads that Jasper has begun exploring, in the quest for more accurate and relevant models, trained in less time with less energy.

Andromeda is now available for commercial customers, as well as for academics and graduate students. It is deployed in Santa Clara, California, in 16 racks at Colovore, a high performance data center. The 16 CS-2 systems, with a combined 13.5 million AI cores are fed by 284 64-core AMD EPYC Gen 3 x86 processors. The SwarmX fabric, which links the MemoryX parameter storage solution to the 16 CS-2s, provides more than 96.8 terabits of bandwidth. Through gradient accumulation Andromeda can support all batch sizes, a characteristic profoundly different from GPU clusters, according to Cerebras.