Stronger. Lighter. More durable. These physical qualities and other properties, such as conductivity, heat resistance, and reactivity, are key to developing novel materials with exceptional performance for various applications, including national security. In nuclear energy production, for example, commercial fusion reactors are a promising technology for producing clean, affordable, limitless energy, but they will require newly engineered materials that can withstand the punishing conditions inside the reactor for sustained, long-term operation. To create these next-generation materials and accelerate their development, scientists need enhanced computational tools for understanding material behavior at the most fundamental level.

First demonstrated in the late 1950s, molecular dynamics (MD) simulations have become a key capability for studying material behavior at the atomic scale. These virtual experiments allow scientists to computationally analyze the physical movement and trajectories of individual atoms and molecules in a system as a function of time and fully evaluate their response to external conditions as the system evolves. MD provides a dependable predictive capability for understanding material properties at the finest scales and in regimes that are often inaccessible in a laboratory setting. The raw computing power of exascale machines offers the ability to perform larger, more complex, and precise MD simulations—but there’s a catch.

Traditional MD algorithms numerically solve equations of motion for each atom in a physical system through a sequential series of time steps. To efficiently utilize computational resources and optimize time to solution, the larger problem is broken down into smaller subdomains that are distributed equally across individual processors, allowing many independent calculations to be performed simultaneously using a parallel processing algorithm called domain decomposition. When a time step is complete, neighboring subdomains exchange information about what they’ve “learned,” and the process repeats in a succession of loops until a terminating function—such as a preprogrammed number of time steps—stops the iterative process. However, on larger machines with tens of thousands or more processors, each subdomain contains fewer and fewer atoms and there is less work to be done locally. Eventually, progress hits a communication wall, where the overhead of synchronizing the work between subdomains exceeds the subdomain computations and scaling breaks down. This scaling crunch limits conventional MD algorithms to submicrosecond timescales—too short to assess the longer term structural effects of stress, temperature, and pressure on a material.

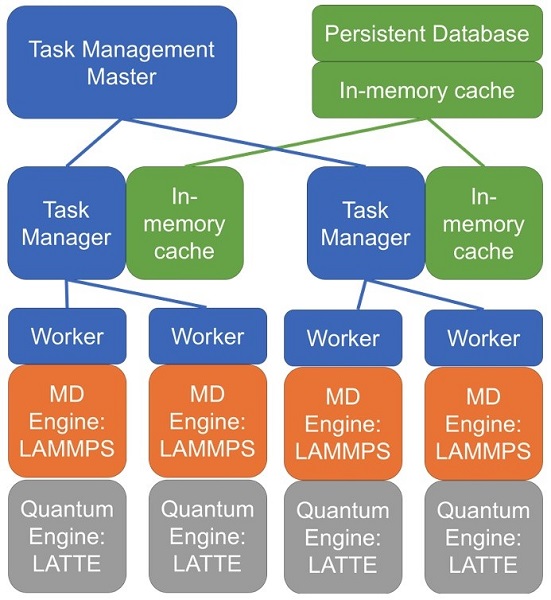

The EXAALT framework provides a task (blue) and data (green) management infrastructure that can orchestrate MD simulations (shown using the LAMMPS MD engine).

As part of the Exascale Computing Project (ECP), a collaborative team of scientists, software developers, and hardware integration specialists from across the Department of Energy (DOE) has developed the Exascale Atomistics for Accuracy, Length, and Time (EXAALT) application to bring MD into the exascale era. Danny Perez, a physicist within the Theoretical Division at Los Alamos National Laboratory and the project’s principal investigator says, “We’ve implemented new scalable methods that allow us to access as much of the accuracy, length, and time space as possible on exascale machines by rethinking our methods and developing algorithms that go around some of the bottlenecks that limited scaling previously.” Such a capability has potential to revolutionize MD.

“Scaling” the Communication Wall

The EXAALT application integrates three cutting-edge MD computer codes: the long-renowned LAMMPS (Large-Scale Atomic/Molecular Massively Parallel Simulator) classical MD code; the ParSplice (Parallel Trajectory Splicing) algorithm; and the LATTE (Los Alamos Transferable Tight-Binding for Energetics) code, which is used for simulating quantum aspects of a physical system. Early on, the project team had in hand an open-source version that worked well for huge systems on short timescales or for very small systems on long timescales. The goal was to extend this long-time capability (picoseconds to milliseconds) to intermediate-size systems (hundreds to millions of atoms) at two levels of accuracy: one where machine-learning (ML) interatomic potentials are used to approximate quantum physics and another using a simplified quantum representation of the system that is much more affordable than conventional first-principle quantum approaches.

ECP projects all have a “figure of merit,” which is a specified performance target. Progress toward this target is assessed by running the codes on challenge problems that address real-world scientific questions and serve as a proof-of-concept for future work. EXAALT is subject to two underlying challenge problems related to materials for nuclear energy. In the first, the team’s ML models simulate the effects of plasma, neutron flux, and extremely high temperatures on the walls of fusion reactors to further development of more resilient, long-lasting materials. The second problem uses quantum-based models to better understand the evolution of nuclear fuels in fission power plants. This work helps to address structural material degradation that affects production efficiency. Perez says, “The physics and the chemistry of these materials is way more complex than just a simple metal, so capturing quantum effects is very important to understanding the material behavior and developing better performing materials for these applications.”

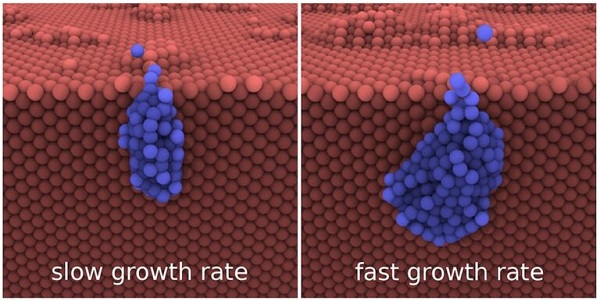

The EXAALT framework and management infrastructure orchestrates all the different calculations and stitches them together seamlessly to generate high-quality trajectories of ensembles of atoms in materials. The framework implements the ParSplice method, which uses a parallel-in-time approach to lengthen the timescales that can be achieved. “We did a lot of work to figure out how to enable the basic codes that drive the whole framework so that we could speed up the time steps and synchronize the data into interesting trajectories,” says Perez. “We added an extra layer of domain decomposition that enables time acceleration by running different subregions of the system independently. We can accelerate one small patch at a time and run multiple copies of each patch, which allows us to leverage more parallelism.” With this approach, the simulation timescale becomes controlled by the morphological changes happening locally rather than globally, extending the timescales that be achieved.

Danny Perez, physicist in the Theoretical Division at Los Alamos National Lab, leads ECP’s EXAALT application development effort.

Another major focus of the project was learning how to port their models efficiently to GPU-based machines. Early in the project, the team observed that as the code ran on increasingly more advanced machines using GPUs, the application performance—as demonstrated by the challenge problems—was decreasing against the peak performance of the hardware. “These models have lots of nested loops that you can unroll and unfold in many different ways. Finding the right mapping of the physics onto the hardware is tricky,” says Perez. “We developed clever ways to better exploit symmetry and memory and to manipulate the loops to maximize performance.”

One of those tricks was to rewrite from scratch a fundamental physical model called SNAP (Spectral Neighbor Analysis Potential), which uses ML techniques to accurately predict the underlying physics of material behavior using high-accuracy quantum calculations. Performance experts at the National Energy Research Scientific Computing Center and NVIDIA were brought in to identify areas for improvement in the SNAP kernel and help the team implement optimization strategies that led to an initial 25x speed up in the code. Fast forward to early 2023, and the team’s enhanced EXAALT code is surpassing all expectations. A recent run using Oak Ridge National Laboratory’s Frontier supercomputer—DOE’s first exascale machine—returned exceptional results. Perez says, “We project more than a 500x speed up when we extrapolate our results to the machine’s full capability, almost a factor of 10 higher than our target.”

An Unexpected Bonus

With the EXAALT code showing increasingly strong scaling and performance, the team has turned its attention to building a model-generation capability based on the ML models used to run the simulations. “We want to show how this tool can drastically speed up the whole scientific workflow from picking a system, to obtaining a model, to performing the run,” explains Perez. “Developing the specific machine-learning models that drive the simulations is time consuming and tedious work. We discovered that the ML infrastructure we were using to generate the models could also automate the entire workflow.”

Rather than relying on an expert’s time and knowledge to define a small data set that contains the right amount of information to include in a model, the team’s approach allows the machine to figure out the right “ingredients.” Perez adds, “We decided that instead of guessing what will happen in a simulation, we would try to capture everything that’s physically sensible. We wanted the most diverse data set possible so that in a simulation we would never encounter a configuration that’s unlike anything used in our training data, but this requires generating a massive amount of data. So, we framed this as an optimization problem where we can quantify the diversity of the data set and create new configurations without generating redundant information.”

Aidan Thompson, researcher in the Center for Computing Research at Sandia National Labs, leads the LAMMPS development effort.

The team has also been exploring ways to integrate quantum aspects, like charge transfer, into the ML models to create an intermediate model that would have features of both. “We have demonstrated an integrated capability where you can generate these configurations, do the quantum calculations, and train the potentials all inside one big run. Between now and the end of ECP, we want to transition this prototype into a robust, production-quality version of the machine-learning framework,” says Perez. In addition, the team is looking to make MD simulations more cost efficient. Since MD algorithms resolve each atom in a system, the computational cost of running larger systems with more sophisticated codes could become cost prohibitive. He adds, “We are working with a world expert to develop methodologies on the machine-learning side so that we can obtain the extra physics at a reasonable price.”

Taking the Long View

The notable progress the EXAALT team has made over the last seven years builds off decades of MD code development. Aidan Thompson, the leader of LAMMPS development at Sandia National Laboratories, says, “The creation of the EXAALT framework and the arrival of exascale computing resources are game-changing events for LAMMPS, allowing users for the first time the freedom to select the accuracy, length, timescale, and chemical composition appropriate to their particular science applications, rather than being restricted to certain niche areas.”

The work is also a testament to the ECP research paradigm. “Typically, sponsors want the scientific potential up front, and the capability advancements needed to make that possible are considered afterward. With ECP, the capability was at the forefront, so it gave us the opportunity to focus on the long view of code development. We had time to decide what features we needed and to optimize the code as aggressively as we could to reach the necessary performance,” states Perez. He is also quick to point out that the ability to work across the DOE complex as part of a multidisciplinary team was paramount to EXAALT’s success. He notes, “This work would not have been possible without this integrated team that brings together the skills of physics, methods, machine-learning, and performance experts. This whole ecosystem of people all pushing in the same direction at the same time gave the project momentum and enabled us to meet and exceed our performance goals.”

The team will continue to test EXAALT’s performance and ensure scalability on Frontier and other future exascale machines. Through demonstration of the challenge problems, the team has shown that EXAALT can be applied to issues that directly impact the national security side of DOE’s mission space, but the code’s relevance extends beyond nuclear energy. Perez says, “Our goal is that users will be able to use EXAALT on exascale machines to run MD simulations directly in the conditions relevant to their applications by giving them access to the entire accuracy, length, and time space.” In addition, the team’s MD ML-based computational workflow could cut development time of new materials from decades to just a few years by using computer simulations almost exclusively. The ability to achieve atomistic materials predictions at the engineering scale would indeed be an MD revolution.