If you agree that generative AI signifies a new chapter in IT, then you may also agree there’s a major storm gathering among GPU accelerator chip vendors vying for position in the GenAI market. It’s a competition with the potential to roil not only the microprocessor sector but also the technology industry overall. We’re talking about AMD and the emergence of its MI300 GPU product line.

On the long and winding road of AMD corporate history, 2017 was a red letter year. That’s when the company unveiled its EPYC CPUs and declared price-performance superiority over Intel in the x86 data center chip market. AMD then proceeded to do what many thought impossible. Each of the past six years, the 50-year-old upstart has cut into what had been Intel’s 95 percent-plus market share.

Led by AMD Chair and CEO Lisa Su, it’s one of the great corporate turnaround stories, and it’s still unfolding. As reported in a March 2023 article in Hardware Times, “In the data center CPU market (in 2022), AMD was by far the most successful chipmaker, with a yearly (revenue) growth of 62 percent… The global data center CPU market’s revenue contracted by 4.4 percent YoY in 2022. AMD’s design wins consolidated its market share to 20 percent, nearly twice as much as the year before. Consequently, Intel lost 10 points as its data center market dropped to an all-time low of 70.77 percent.”

Within the HPC sector, AMD is the hot CPU vendor. EPYC-powered systems crowd the high end of the TOP500 list of the world’s most powerful supercomputers: Four of the top 10 use AMD CPUs, including the no. 1 (joined the list last year, more on this system below) and no. 3 (new to the list this past June – see below) systems, along with 13 of the top 25 supercomputers.

Other leading Top500 systems powered by AMD EPYCs include several Microsoft Azure systems (Explorer, Voyager and Pioneer), along with the “KT” NVIDIA DGX SuperPOD system.

AMD CEO Lisa Su

As AMD mounts its challenge in the GPU market with an expanding accelerator product line, it’s important to note that AMD already can boast GPU proof points in the supercomputing sector.

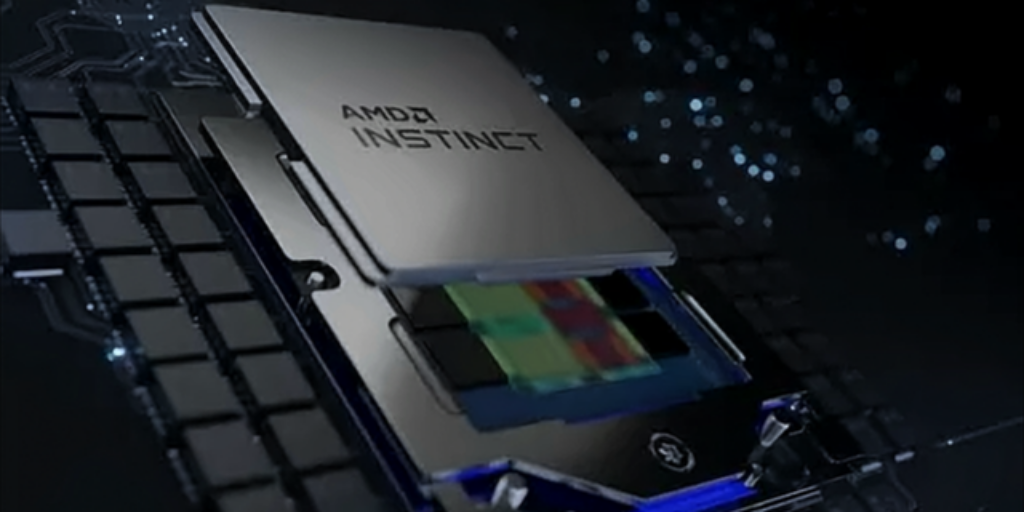

The Frontier supercomputer at Oak Ridge National Laboratory, powered by 3rd Gen AMD EPYC CPUs and AMD Instinct 250X GPUs, has held the no. 1 position on the Top500 list of the world’s most powerful systems since May 2022. It is the first system recognized by the Top500 to break the exascale (a billion billion calculations per second) performance milestone,

The no. 3 most powerful system on the Top500, LUMI, housed at CSC–IT Center for Science Ltd. in Kajaani, Finland, also is powered by both AMD EPYC CPUs and AMD Instinct MI250X GPUs, delivering 309 PFLOPS.

Another exascale-class system, “El Capitan,” now being installed at Lawrence Livermore National Laboratory, will be powered by EPYC CPUs and by the next-generation AMD Instinct™ , which includes three AMD CDNA™ GPU chiplets and one Genoa Zen-4 based Core Complex Die (CCD) chiplet. compared to previous Gen AMD accelerators. That system is expected to be recognized as the world’s most powerful supercomputer upon its acceptance by Livermore.

AMD’s exascale success story extends beyond hardware throughput. Guided by the Department of Energy’s Exascale Computing Project (ECP), the Frontier project delivers on what ECP called a “capable exascale” mission – i.e., development of a system with a built-out hardware and software exascale ecosystem. That means a usable, stable machine able to leverage its compute resources (74 HPE Cray EX supercomputer cabinets with more than 9,400 nodes and 90 miles of networking cables) with a library of dozens of ported and optimized software scientific and AI applications.

The software lessons learned and skills developed by AMD are incorporated in the company’s for GPU programming, which spans several domains: .

ROCm is evolving to meet machine learning needs. The platform supports major ML frameworks like TensorFlow and PyTorch to help users accelerate AI workloads. From optimized MIOpen libraries to the MIVisionX computer vision and machine intelligence libraries, utilities and applications AMD works with the AI open community to broaden the range of ML and deep learning workloads that can take advantage of accelerated compute.

Getting back to AMD AI-optimized hardware – later this year, AMD will expand its MI300 product line with the release of the MI300X accelerator, built with GPU-only chiplets, offering 153 billion transistors and 192Gb of HBM3 memory. At the AMD Data Center & AI Technology Premiere event in San Francisco in June, Su said the chip’s memory capacity allows it to run – on its own – the Falcon 40-b, a generative AI large language model with 40 billion parameters.

So how does the MI300X stack up against the NVIDIA H100 GPU for the AI market?

During a breakout session at the event, we asked Brad McCredie, corporate vice president, GPU Platforms at AMD how the company plans to compete with NVIDIA in the GPU sector. While AMD is not yet releasing MI300X pricing details, his response emphasized TCO (total cost of ownership) advantages.

“We believe that especially around AI inferencing, we’re going to have a very nice dollar-per-dollar for inference advantage,” McCredie said. The key to that advantage, he said, is the MI300X’s memory and bandwidth.

Brad McCredie

“We added the extra memory, and that’s really important, especially when you’re doing the inferencing,” he said. “It’s very much memory gated, both on capacity as well as on bandwidth. And this is the largest memory capacity and the largest memory bandwidth in the industry. So as these models grow, you’ll need fewer GPUs to do your inferencing. You’ll be hearing more about this, that the dollar per inference is very efficient here. We’ll have a significant TCO advantage.”

McCredie said the AMD TCO benefits are enhanced by the openness and ease of deployment fostered by ROCm.

To further its open strategy, AMD last month joined the Hardware Partner Program of Hugging Face, a hub of open source models for natural language processing, computer vision and other AI-centric fields. AMD and Hugging Face work together to deliver state-of-the-art transformer performance on AMD CPUs and GPUs.

“The selection of deep learning hardware has been limited for years, and prices and supply are growing concerns,” Hugging Face said in its announcement. “This new partnership will do more than match the competition and help alleviate market dynamics: it should also set new cost-performance standards.”

Taken all together, the GPU market stakes couldn’t be higher. In her San Francisco keynote, Su said AI represents the most significant strategic opportunity for the company, a market AMD expects to grow from about $30 billion this year to $150 billion by 2027, a CAGR of 50 percent, which represents just the Data Center AI accelerator market.

Let the competition begin.