Over at the VMware CTO Office, Josh Simons writes that HPC efforts are stepping at the company with new staffing and some exciting InfiniBand performance improvements that could help make virtualization a widespread technology for high performance computing.

This spring we installed a four-node InfiniBand HPC cluster in our lab in Cambridge, MA. The system includes four HP DL380p Gen8 servers, each with 128 GB memory and two Intel 3.0GHz E5-2667 eight-core processors and Mellanox ConnectX3 cards that support both FDR (56 Gb/s) InfiniBand and 40 Gb/s RoCE. The nodes are connected with a Mellanox MSX6012F-1BFS 12-port switch. Na Zhang, PhD student at Stony Brook University, did an internship with us this summer. She accomplished a prodigious amount of performance tuning and benchmarking, looking at a range of benchmarks and full applications. We have lots of performance data to share, including IB, RoCE, and SR-IOV results over a range of configurations.

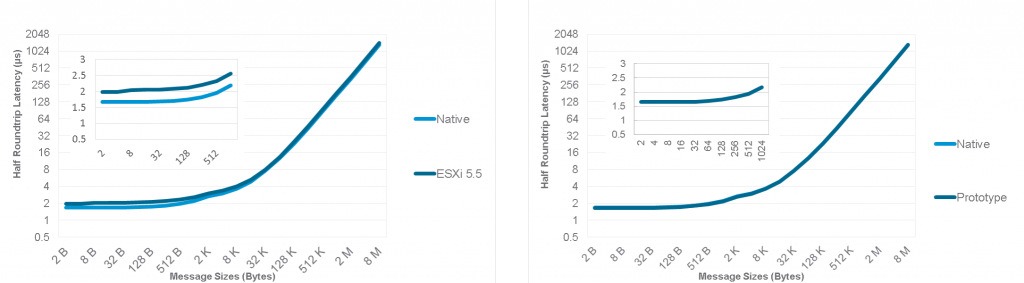

FDR IB latencies: native, ESX 5.5u1, ESX prototype

This year at SC14, Josh and the VMware team will have a demo station in the EMC booth where we they will be showing a prototype of our approach to self-provisioning of virtual HPC clusters in a vRA private cloud as well as demonstrating use of SR-IOV to connect multiple VMs to a remote file system via InfiniBand.

Read the Full Story over at Josh Simons’ Blog * Download the insideHPC Guide to Virtualization

Virtualization will really open doors for HPC Cloud adoption.