Sponsored Post

Of the varied approaches to liquid cooling, most remain technical curiosities and fail to show real-world adoption in any significant degree. In contrast, both Asetek RackCDU D2C™ (Direct-to-Chip) and Internal Loop Liquid Cooling are seeing accelerating adoption both by OEMs and end users. Asetek (booth #1709) is highlighting its adoption by OEMs and HPC sites at SC15.

OEM have focused on two Asetek solutions that were designed with the needs of real data center operations in mind: RackCDU D2C and Internal Loop.

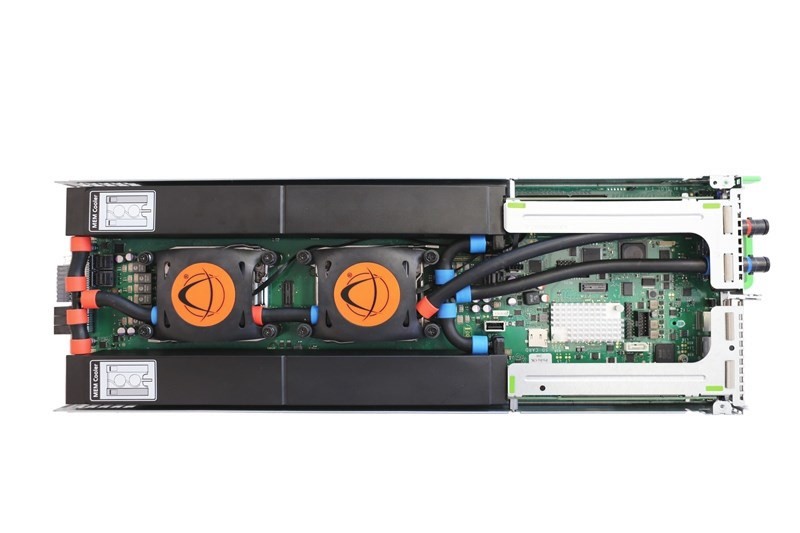

In the RackCDU D2C system, coolers replace the CPU/GPU/memory heat sinks in the server nodes and remove heat with hot water while also providing low PSI redundant pumping. Removed heat is transferred to facilities water via a CDU extension on the back of each rack. A video detailing how RackCDU D2C works can be seen below.

Internal Loop is liquid enhanced air cooling for server nodes that replaces air coolers with sealed loop liquid coolers. Liquid to air heat exchangers within each node enable the servers to use the highest performing/overclocked CPUs and GPUs without any changes to the existing datacenter facilities.

Asetek OEMs CIARA, Cray, Fujitsu, Format and Penguin all have customers today using Asetek liquid cooling featuring RackCDU and Internal Loop.

Penguin Computing (Penguin), uses RackCDU D2C™ technology in its Tundra™ Extreme Scale (ES) HPC server product line. Along with the recent OEM announcement, Penguin and the National Nuclear Security Administration’s (NNSA) Lawrence Livermore National Laboratory announced the Asetek enabled Tundra system had been selected for the tri-laboratory Commodity Technology Systems programs (CTS-1) at Los Alamos, Sandia and Lawrence Livermore national laboratories. The resulting deployment of these HPC clusters will be one of the world’s largest Open Compute-based installations.

In July, Fujitsu announced Cool-Central™ Liquid Cooling for PRIMERGY™ Scale-out Servers. The CX400 M1 and its HPC cluster nodes use Asetek RackCDU D2C. The PRIMERGY CX400 M1 and its cluster nodes can increase data center density by up to five times. In addition, it can reduce cooling costs by up to 50%. Shortly after SC15, Fujitsu is expected to make public a major HPC installation using Cool-Central.

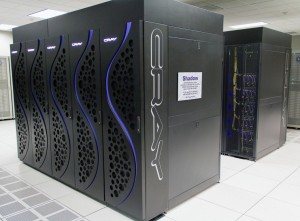

Cray™ announced a second generation of liquid cooled cluster supercomputers using Asetek D2C, the Cray CS-400LC. Like the CS-300LC, the CS-400LC allows for hot water liquid cooling of CPUs, memory and GPUs. One of the most significant new Cray sites is at the Sandia National Laboratories with the liquid cooled 600-teraflop, 1848 node, Cray CS300-LC cluster supercomputer named Sky Bridge.

Expanding footprints at existing sites are of particular interest as they reflect end user satisfaction. Mississippi State University (MSU) installed a Cray-300LC cluster in February 2014 and added a second cluster in December. The University of Tromsø in Norway has been running production HPC workloads since January 2014 using D2C with HP SL230 nodes. This led to build out of the Stallo system to over 6,500 liquid cooled cores. Meanwhile, National Renewable Energy Lab (NREL) has been operating with 100% uptime with D2C since June 2013 and has since added more RackCDU D2C cooled racks.

FORMAT Sp., a comprehensive provider of advanced ICT solutions in Poland, recently announced the installation of D2C RackCDU-based Expert Server HPC cluster at Maria Curie-Skłodowska University (UMCS) using dual Intel CPU nodes with both CPU and memory cooling.

Of particular note is the announcement by CIARA of the ORION HF320D, the first 2U 2-Node High Frequency (HF) server based on Asetek liquid cooling technology at the 2015 Chicago Trading Show. CIARA is the leader in servers for high-frequency trading and looks to liquid cooling to achieve the highest throughput for the demands of that market. Using Internal Loop has helped CIARA reach a new level of ultra high performance, speed and low latency while doubling the density of its previous ORION HF210 and 320 servers.

Asetek is targeting the need for higher wattage nodes for OEM designs that will use next generation CPUs & GPUs. On display at SC15 is a liquid cooler for Intel™’s forthcoming next generation Xeon Phi (Knights Landing). Also on display are liquid coolers for Power processors designed for use in overclocking OpenPOWER™ systems. (Asetek is a member of OpenPOWER) as well as solutions in use today for Nvidia GPUs.

Asetek can be expected to continue to address the key needs felt by HPC, data centers and OEMs that will increasingly require liquid cooling. And if recent history is an indicator, Asetek will continue to distance itself from the other liquid cooling approaches with OEMs and an accelerating list of major HPC data center installations. To learn more about Asetek RackCDU D2C and Internal Loop Liquid Cooling, visit Asetek at booth # 1709 at SC15 in Austin, Texas.