Lawrence Livermore National Laboratory (LLNL) and AI company Cerebras Systems today announced the integration of the 1.2-trillion Cerebras’ Wafer Scale Engine (WSE) chip into the National Nuclear Security Administration’s (NNSA) 23-petaflop Lassen supercomputer.

Lawrence Livermore National Laboratory (LLNL) and AI company Cerebras Systems today announced the integration of the 1.2-trillion Cerebras’ Wafer Scale Engine (WSE) chip into the National Nuclear Security Administration’s (NNSA) 23-petaflop Lassen supercomputer.

The pairing of Lassen’s simulation capability with Cerebras’ machine learning compute system, along with the CS-1 accelerator system that houses the chip, makes LLNL “the first institution to integrate the AI platform with a large-scale supercomputer and creates a radically new type of computing solution, enabling researchers to investigate novel approaches to predictive modeling,” according to the lab. Work on initial AI models began last month.

Lassen is the “unclassified companion,” according to LLNL, to the IBM/Nvidia system Sierra (ranked no. 3 on the Top500 list of the world’s most powerful supercomputer) and is no. 14 on the Top500. Funded by the NNSA’s Advanced Simulation and Computing program, the platform aims to accelerate solutions for Department of Energy and NNSA national security mission applications.

Early applications include fusion implosion experiments performed at the National Ignition Facility, materials science and rapid design of new prescription drugs for COVID-19 and cancer through the Accelerating Therapeutic Opportunities in Medicine (ATOM) project.

“The addition of an AI component to a world-class supercomputer like Lassen will allow our scientists and physicists to leverage the large investments in AI being made by industry to improve both the accuracy of our simulations as well as the productivity of the end user in solving mission critical problems,” said Thuc Hoang, acting director of the ASC program. “We are excited to be collaborating with Cerebras in putting this pioneering technology to work for NNSA.”

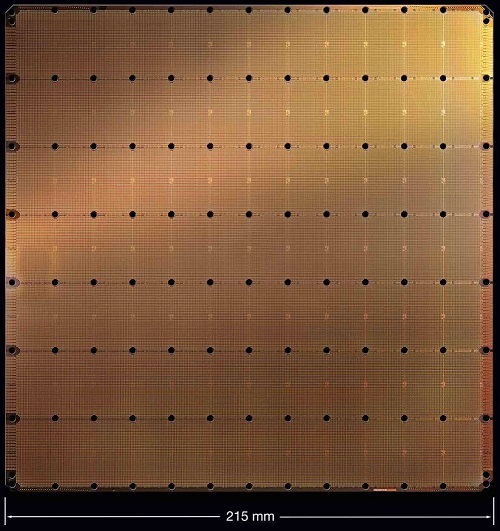

Billed as the “world’s fastest AI supercomputer,” the Cerebras CS-1 system runs on the WSE chip, which consists of 400,000 AI-optimized cores, 18 gigabytes of on-chip memory and 100 petabits per second of on-chip network bandwidth.

Cerebras Wafer Scale Engine

LLNL said the Lassen upgrade marks the first time the lab has an HPC resource includes AI-specific hardware, creates “the world’s first computer system designed for ‘cognitive simulation’ (CogSim) – a term used by LLNL scientists to describe the combination of traditional HPC simulations with AI,” according to the lab.

Computer scientists at Livermore said the system’s capabilities will enable them to skip unnecessary processing in workflows and accelerate deep learning neural networks. Wafer-scale integration will minimize the limits of communication for scaling up the training of neural networks and how often researchers need to slice and dice problems into smaller jobs, said LLNL computer scientist Brian Van Essen, who heads the Lab’s efforts in large-scale neural networks.

“Either we can do scientific exploration in a shorter amount of compute time, or we could go more in-depth in the areas where the science is less certain, using more compute time but getting a better answer,” Van Essen said. “Having this system be monitored by the cognitive framework can allow us to improve the efficiency of our physicists. Rather than babysitting the simulations to look for errors, they can hopefully start to look more into the scientific questions that are being illuminated by this type of system.”

A research team led by Van Essen has selected two AI models from a Laboratory Director’s Initiative on Cognitive Simulation to run on the CS-1 system. LLNL researchers said that their preliminary work focuses on learning from up to five billion simulated laser implosion images for optimization of fusion targets used in experiments at the National Ignition Facility (NIF), with the goal of reaching high energy output and robust fusion implosions for stockpile stewardship applications.

LLNL physicist and Cognitive Simulation lead Brian Spears said NIF scientists will use the CS-1 to embed dramatically faster AI-based physics models directly inside Lassen’s simulation codes, resulting in significantly more detailed physics in less time. Additionally, the expanded compute load will allow scientists to merge enormous simulation volume with hyper-precision experimental data, Spears said.

“Pairing the AI power of the CS-1 with the precision simulation of Lassen creates a CogSim computer that kicks open new doors for inertial confinement fusion (ICF) experiments at the National Ignition Facility,” Spears said “Now, we can combine billions of simulated images with NIF’s amazing X-ray and neutron camera output to build improved predictions of future fusion designs. And, we’re pretty sure we’re just scratching the surface – giving ourselves a sneak peek at what future AI-accelerated HPC platforms can deliver and what we will demand of them.”

“Pairing the AI power of the CS-1 with the precision simulation of Lassen creates a CogSim computer that kicks open new doors for inertial confinement fusion (ICF) experiments at the National Ignition Facility,” Spears said “Now, we can combine billions of simulated images with NIF’s amazing X-ray and neutron camera output to build improved predictions of future fusion designs. And, we’re pretty sure we’re just scratching the surface – giving ourselves a sneak peek at what future AI-accelerated HPC platforms can deliver and what we will demand of them.”

To ensure a successful integration, LLNL and Cerebras are engaging in an Artificial Intelligence Center of Excellence (AICoE) over the coming years to determine the optimal parameters that will allow cognitive simulation to work with the Lab’s workloads. Depending on the results, LLNL could add more CS-1 systems, both to Lassen and other supercomputing platforms.

“This is why we’re here, to do this kind of science,” Van Essen said. “I’m a computer architect by training, so the opportunity to build this kind of system and be the first one to deploy these things at this scale is absolutely invigorating. Integrating and coupling it into a system like Lassen gives us a really unique opportunity to be the first to explore this kind of framework.”