From left, Mike Heroux, Sandia National Lab; Sameer Shende, Univ. of Oregon; Todd Gamblin, Lawrence Livermore National Lab

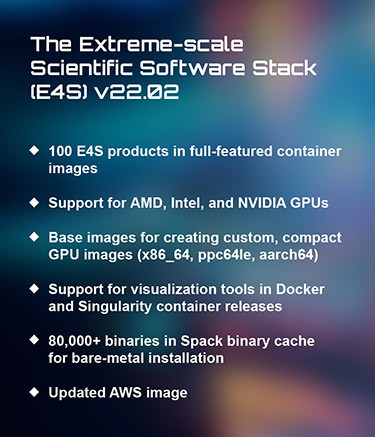

The Extreme-scale Scientific Software Stack (E4S) high-performance computing (HPC) software ecosystem—an ongoing broad collection of software capabilities continually developed to address emerging scientific needs for the US Department of Energy community—recently released version 22.02.

E4S, which began in the fall of 2018, is aimed at accelerating the development, deployment, and use of HPC software, thereby lowering the barriers for HPC users.

Spack serves as a meta-build tool to identify the software dependencies of the E4S products and enable the creation of a recursively built tree of products.

“E4S provides Spack recipes for installing software on bare-metal systems aided by a Spack binary build cache,” said Sameer Shende, ECP E4S project lead and research associate professor and the director of the Performance Research Lab, OACISS, University of Oregon. “It provides a range of containers that span Spack-enabled base images with support for NVIDIA GPUs on x86_64, ppc64le, and aarch64 architectures. On x86_64, GPUs from all three vendors—NVIDIA, Intel, and AMD—are supported. This allows a user to build custom, compact, container images starting from E4S base images. A full-featured E4S image that supports visualization tools such as VisIt, ParaView, and TAU has 100 E4S products installed using Spack and includes GPU runtimes including CUDA, NVHPC, ROCm, and oneAPI as well as AI/ML packages such as TensorFlow and PyTorch that support GPUs. This gives developers a range of platform-specific options to deploy their HPC and AI/ML applications on GPUs easily.”

The two major contributors to E4S are ST and the Co-design area within the ECP Application Development portfolio. ST products tend to encompass more established approaches for how math libraries and tools can be made available and usable by many different teams for developing, deploying, and running scientific applications on HPC platforms. ECP Co-design involves emerging patterns that epitomize functionality that can be used by many different applications.

E4S encapsulates ST and Co-design products with other software development tools such as the PyTorch machine learning framework, the TensorFlow source platform for machine learning, and the distributed memory Horovod, which sits on top of the other two tools.

“Our teams within Software Technology and within Co-design are working on new features for the exascale computing platforms,” Heroux said. “Now, that work is being done in very specific environments where we’re on the early-access systems. We’re working shoulder to shoulder with our Application Development teams to development new capabilities. That stuff is not in E4S today; it will be, but it’s not there yet.”

The E4S teams have also established capabilities that they have learned about in recent months that are now available only in the release branch of independent software product development teams.

For example, the capability that the hypre numerical software package for windfarm modeling and simulation capability used by the ECP ExaWind project is available only locally, isolated in the development stage of an upcoming version and the current release of hypre. Such a new capability is added to the software package’s repository in GitHub after bug fixes and other release-oriented tasks are performed, and four to six months later, the E4S project adds it to the E4S portfolio.

“You can think of E4S as being the final delivery vehicle of hardened and truly robust capabilities that are provided by ECP as reusable libraries and tools,” Heroux said.

Designed to address the latest scientific challenges, E4S is tested for interoperability and portability to multiple computing architectures, and supports GPUs from NVIDIA, AMD, and Intel in a single distribution. E4S will increasingly include artificial intelligence (AI) tools and libraries that are needed specifically to apply to the scientific problem sets.

Designed to address the latest scientific challenges, E4S is tested for interoperability and portability to multiple computing architectures, and supports GPUs from NVIDIA, AMD, and Intel in a single distribution. E4S will increasingly include artificial intelligence (AI) tools and libraries that are needed specifically to apply to the scientific problem sets.

Scientific edge computing, which involves lots of instrumentation and incoming data that must be managed and then assimilated for deeper scientific understanding, will also become part of E4S, as will quantum technology upon its further development. Scientific software products—libraries and tools—will be necessary to access the fundamental capabilities afforded by quantum devices.

Users working on computing systems that lack the latest innovations can also benefit from E4S. For example, the most hardened and robust versions of the E4S libraries and tools are currently available and meaningful for use on clusters with CPU nodes or on laptops. Many E4S developers use a laptop as their primary software development environment and compile and run their software on that machine.

“ECP has provided us with an ambitious set of goals that required us to work together across labs, universities, industry partners to deliver software to exascale applications and to the broader scientific community,” Heroux said. “It is a big enough and large enough—and I would have to say, gnarly enough—problem that forced us and incentivized us to work together in this kind of portfolio way. We’ve been developing as a DOE scientific community lots of these products; these are not generally brand-new products. They’re brand-new capabilities that are targeting the exascale systems.”

The enduring legacy of the E4S project will have two facets: its usability across different GPU platforms and its portfolio approach.

“Because we’ve had to deliver these products in a very aggressive timeline, we have to work together to make sure that we’re not introducing bugs as we create new features and doing that as a portfolio is far more effective and efficient than having each individual product team go to these early-access systems, go to the exascale systems independently and having to go through that entire process all on their own,” Heroux said. “As a collective portfolio—and even at a finer scale, the SDK [software development kit] level of integration, where we take things like the math libraries together as a group—these two extra layers of collaboration allow us to accelerate and make more robust the collection of software that we’re providing.”

The quality of E4S as a community platform is its value proposition to scientists. Users can set their applications on top of E4S and tap into its immense set of capabilities, and contributors to E4S can make their own libraries or tools available to their user communities. E4S is also part of the broader Spack ecosystem, so its packages can be used in conjunction with more than 6,000 other software packages in Spack.

The 100 software packages in E4S rely on over 400 dependencies from Spack.

“Spack benefits greatly from the quality and hardening efforts of the E4S team—they ensure that a core set of very complex packages are integrated and work reliably for Spack users,” said Todd Gamblin, Spack lead. “We also use the E4S packages to test Spack itself. With every change to Spack, we run tests to ensure that E4S builds continue to work. The two efforts are highly leveraged—the ongoing work of Spack’s nearly 1,000 contributors ensures that E4S’s dependencies are up to date and work reliably, and the E4S team ensures that the latest ECP software is available to the broader community.”

Heroux elaborated on the uniqueness of E4S.

“It’s a good set of tools, lots of functionality that you can’t find in a similar collection anywhere else on the planet,” Heroux said. “It provides some very nice build capabilities via Spack, and in fact, if you start using our stuff and you want to create what’s called a build cache, you can also reduce the amount of time you spend building the libraries and tools in E4S because we’ll keep a cached binary version of a previous build, and you can grab that rather than having to rebuild it. We see build times going down from, say, a day-length type of run to a few hours. … We also provide E4S as a container, and it supports all of the GPU architectures that we’re targeting in these containers. Plus, E4S can be installed on cloud nodes—for example, from AWS, Amazon’s cloud—and we’re working with other cloud providers so that you can rely on E4S being available; it becomes part of the ecosystem, rather than having to build it yourself all the time.”

Related Links

- ECP Software Ecosystem and Delivery

- E4S website

- E4S version 22.02 release notes

- Revisiting the E4S Software Ecosystem Upon Release of Version 22.02

- Mike Heroux’s bio

- Sameer Shende’s bio

- Todd Gamblin’s bio

source: Scott Gibson, Exascale Computing Project