[SPONSORED GUEST ARTICLE] LUMI is a model for both world-class supercomputing and sustainability. It also embodies Europe’s rise on the global HPC scene in recent years. The AMD-powered, HPE-built system, ranked no. 3 on the new TOP500 list of the world’s most powerful supercomputers, also ranks no. 7 on the GREEN500 list of the most energy efficient HPC systems.

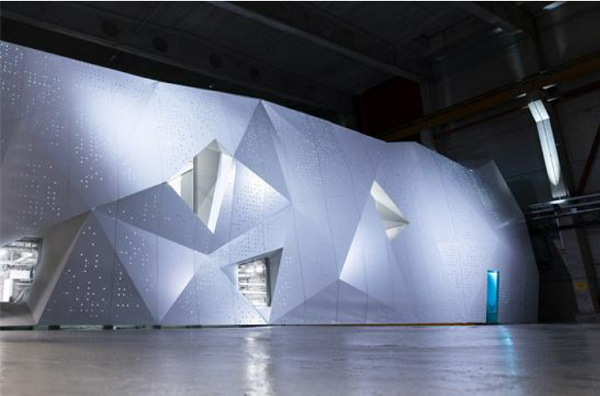

LUMI (Large Unified Modern Infrastructure) is in the vanguard of leadership-class supercomputers that also are eco-friendly. The system incorporates an extensive sustainability ethic. LUMI’s workload roster includes extensive climate research (more on this below). The system is housed at one of the world’s most energy efficient data centers, CSC – IT Center for Science Ltd., in Kajaani, Finland. CSC earlier this year was recognized by the 2023 Data Centre World Awards for the Green Data Centre of the Year.

CSC draws electrical power from carbon-neutral hydro generators and free cooling is available year-round in Kajaani, located 160 miles from the Arctic Circle. LUMI’s waste heat produces approximately 20 percent of the heat for houses and buildings in the Kajaani area, reducing the city’s carbon emissions by an estimated 12,400 metric tons per year.

Yet LUMI’s environmental consciousness does not come at the price of diminished throughput. The HPE Cray EX supercomputer system has sustained computing power of 429 petaFLOPS (see LUMI’s TOP500 system profile). That’s the equivalent of the combined performance of 1.5 million laptop computers that, if stacked on top of each other, would stand more than 23 kilometers (14 miles) high. LUMI has a storage capacity of 118 petabytes and an aggregated I/O bandwidth of 2 terabytes per second.

The system consists of several partitions targeted for different use cases. The GPU partition consists of 2,560 nodes, each node with one 64-core optimized 3rd Gen AMD EPYC™ CPU and four AMD Instinct™ MI250X GPUs. Each GPU node features four 200-Gbit/s network interconnect cards for a total of 800 Gbit/s injection bandwidth.

Each MI250X GPU consists of two compute dies, each with 110 compute units, and each compute unit has 64 stream processors for a total of 14,080 stream processors. The MI250X GPU has 128 GB of HBM2e memory, delivering up to 3.2 TB/s of memory bandwidth.

In addition, there is a partition (LUMI-C) using CPU-only nodes featuring 64-core 3rd Gen AMD EPYC CPUs, and between 256 GB and 1024 GB of memory. There are 1,536 dual-socket CPU nodes in total.

LUMI data center

LUMI’s high standing on the GREEN500 list is a direct reflection on the efficiency of AMD processors (for more on LUMI, including videos and testimonials, visit this page on the AMD web site). The Instinct MI250X GPU (500W) based on AMD CDNA™ 2 6nm architecture offers 96 GFLOPS peak theoretical FP64 performance per wattMI200-32 and the EPYC CPU-powered servers reduce cost, energy consumption and save physical space.

LUMI’s storage system consists of three components: an 8 petabyte all-flash Lustre system for short term fast access; a longer term 80 petabyte Lustre system based on mechanical hard drives; and for data sharing and project lifetime storage LUMI has 30 petabytes of Ceph-based storage.

The different compute and storage partitions are connected to the Cray Slingshot interconnect of 200 Gbit/s.

LUMI fills almost 400m of space, which is about the size of two tennis courts. The weight of the system is nearly 150 000 kilograms (150 metric tons).

LUMI is among many AMD-powered systems on the latest TOP500 list. The November 2022 list includes 101 supercomputers utilizing AMD chips, a year-over-year jump from 73. The world’s no. 1 supercomputer, the Frontier exascale-class HPC system at Oak Ridge National Laboratory, is powered by AMD processors and AMD accelerators. Additionally, AMD powers 75 percent of the top 20 systems on the GREEN500 list.

On the software side, the AMD ROCm™ programming environment provides open source components developed and maintained by AMD. The ROCm stack contains accelerated scientific libraries, such as ROCBlas for BLAS functionality and ROCfft for FFT and includes compilers with support for offloading through OpenMP directives. It also comes with HIP, which is the AMD replacement for CUDA, and tools to simplify translating code from CUDA to HIP.

On the software side, the AMD ROCm™ programming environment provides open source components developed and maintained by AMD. The ROCm stack contains accelerated scientific libraries, such as ROCBlas for BLAS functionality and ROCfft for FFT and includes compilers with support for offloading through OpenMP directives. It also comes with HIP, which is the AMD replacement for CUDA, and tools to simplify translating code from CUDA to HIP.

ROCm also has tools for debugging code running on AMD GPUs in the form of ROCgdb, AMDs ROCm source-level debugger for Linux based on the GNU Debugger (GDB). AMD provides its MIOpen library, an open-source library for high performance machine learning primitives.

LUMI also comes with the Cray Programming Environment (CPE) stack, including compilers and tools that help users port, debug and optimize for GPUs and conventional multi-core CPUs.

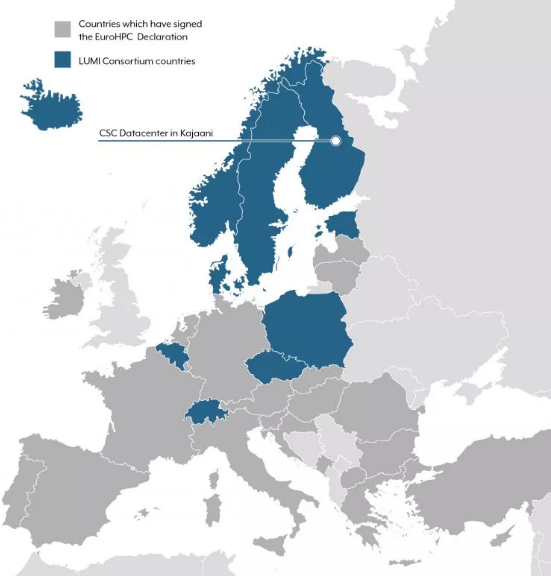

LUMI consortium countries and EuroHPC JU -countries.

For workloads, LUMI is tasked with some of the most urgent climate-related problems. As part of the European Green Deal and European Digital Strategy, the system is used in the Destination Earth project (DestinE), which is funded by the EU’s Digital Europe Program. The project’s aim is to create a detailed model of the earth – a digital twin – that can be used to understand climate change and its impacts, including extreme weather phenomena such as floods and hurricanes. With LUMI, researchers can link the climate model to other models to understand the complex interplay between environmental processes and systems.

In medical research, LUMI is supporting researchers in an AI-driven computational pathology project called ComPatAI aimed at developing a digital pathology workflow to diagnose and grade cancer more accurately. More information can be found in this article. Other LUMI scientific workloads can be found here.

LUMI also is instrumental in the development of OLMo (Open Language Model), created by the Allen Institute for AI (AI2) for the AI research community and announced in May 2023. OLMo is an open generative language model that at 70 billion parameters will be comparable in scale to other state-of-the-art LLMs. It is expected in early 2024.

AI2 is developing OLMo in collaboration with AMD and CSC using the new GPU portion of the LUMI system. OLMo will offer an avenue for many AI researchers to work directly on language models for the first time. All elements of the OLMo project will be accessible – the data along with the code used to create the data. The model, the training code, the training curves and evaluation benchmarks will all be open-sourced. The goal: help guide the understanding and responsible development of language modeling technology.

LUMI is also helping support researchers with the Finnish language model developed by the TurkuNLP research team. This 13 billion parameter Generative Pre-trained Transformer 3 (GPT-3) model is the largest Finnish language model ever. Looking forward, members of the team involved in TurkuNLP – along with CSC and LUMI – are also part of the Green NLP project. This project aims to develop the training and use of language models to make them more energy efficient. The goal is to create best practices for improving energy efficiency in the field of natural language processing.

LUMI is also helping support researchers with the Finnish language model developed by the TurkuNLP research team. This 13 billion parameter Generative Pre-trained Transformer 3 (GPT-3) model is the largest Finnish language model ever. Looking forward, members of the team involved in TurkuNLP – along with CSC and LUMI – are also part of the Green NLP project. This project aims to develop the training and use of language models to make them more energy efficient. The goal is to create best practices for improving energy efficiency in the field of natural language processing.

LUMI is owned by the EuroHPC Joint Undertaking and hosted by the LUMI consortium, consisting of Finland, Belgium, the Czech Republic, Denmark, Estonia, Iceland, Norway, Poland, Sweden and Switzerland. Researchers from across Europe can apply for access to LUMI’s scientific computing resources.