Joan Koka writes that researchers at Argonne National Laboratory are paving the way for the creation of exascale supercomputers. The work is part of Argonne’s collaboration with the U.S. Department of Energy’s Exascale Computing Project (ECP).

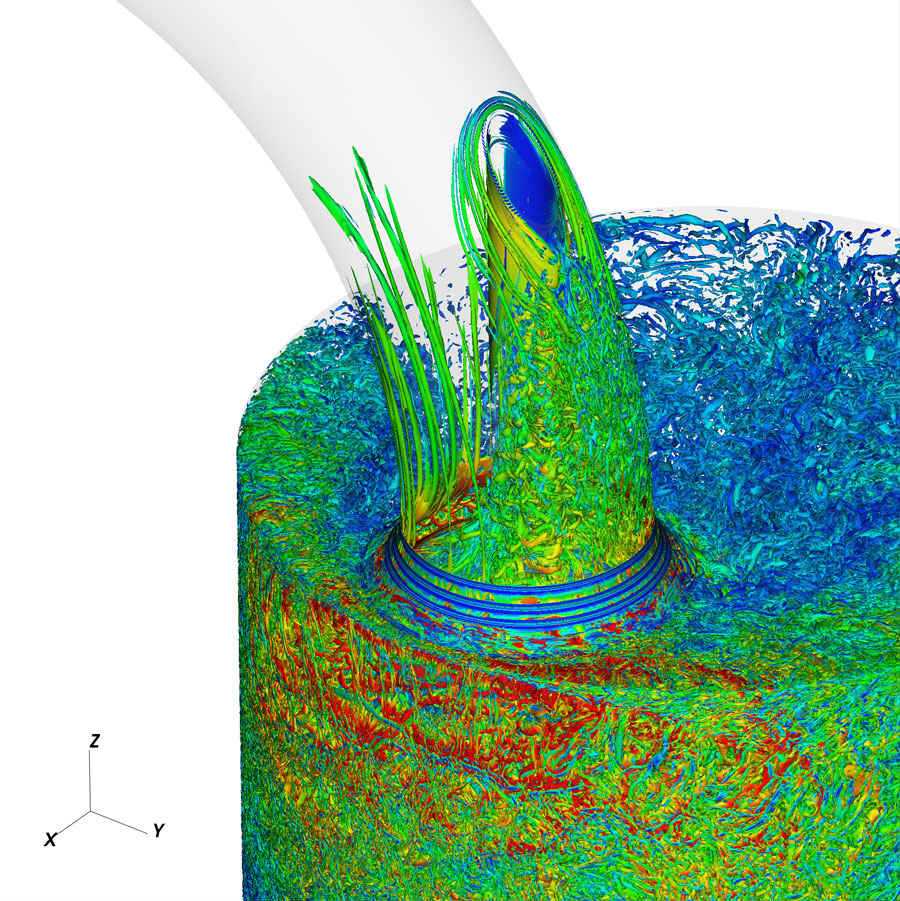

Simulation of turbulence inside an internal combustion engine, rendered using the advanced supercomputing resources at the Argonne Leadership Computing Facility, an Office of Science User Facility. The ability to create such complex simulations helps researchers solve some of the world’s largest, most complex problems. (Image by George Giannakopoulos.)

The term ’co-design’ describes the integrated development and evolution of hardware technologies, computational applications and associated software. In pursuit of ECP’s mission to help people solve realistic application problems through exascale computing, each co-design center targets different features and challenges relating to exascale computing.

One of the new Co-Design Center for Online Data Analysis and Reduction at the Exascale. Ian Foster and other researchers in CODAR are working to overcome the gap between computation speed and the limitations in the speed and capacity of storage by developing smarter, more selective ways of reducing data without losing important information.

Exascale systems will be 50 times faster than existing systems, but it would be too expensive to build out storage that would be 50 times faster as well,” said Foster. “This means we no longer have the option to write out more data and store all of it. And if we can’t change that, then something else needs to change.”

There are many powerful techniques for doing data reduction, and CODAR researchers are studying various approaches.

One such approach, lossy compression, is a method whereby unnecessary or redundant information is removed to reduce overall data size. This technique is what’s used to transform the detail-rich images captured on our phone camera sensors into JPEG files, which are small in size. While data is lost in the process, the most important information ― the amount needed for our eyes to interpret the images clearly ― is maintained, and as a result, we can store hundreds more photos on our devices.

The same thing happens when data compression is used as a technique for scientific data reduction. The important difference here is that scientific users need to precisely control and check the accuracy of the compressed data with respect to their specific needs,” said Argonne computer scientist Franck Cappello, who is leading the data reduction team for CODAR.

Other data reduction techniques include use of summary statistics and feature extraction.

Read the Full Story.

Sign up for our insideHPC Newsletter