Sponsored Post

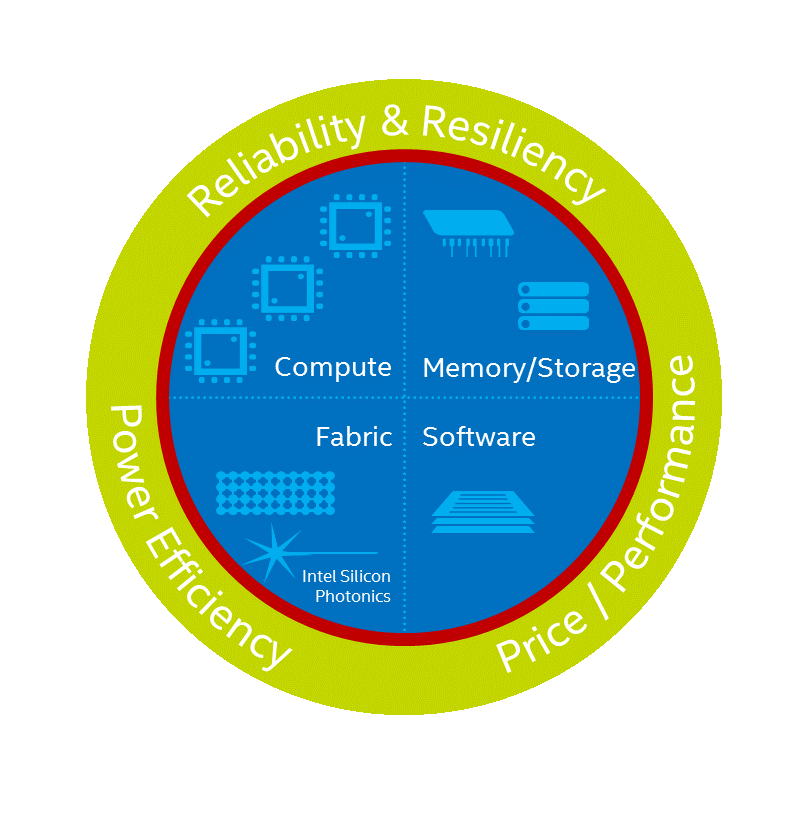

At SC15, Intel talked about some transformational high-performance computing (HPC) technologies and the architecture—Intel® Scalable System Framework (Intel® SSF). Intel describes Intel SSF as “an advanced architectural approach for simplifying the procurement, deployment, and management of HPC systems, while broadening the accessibility of HPC to more industries and workloads.” Intel SSF is designed to eliminate the traditional bottlenecks; the so called power, memory, storage, and I/O walls that system builders and operators have run into over the years. Leading system integrators and OEMs have already announced launching systems based on Intel SSF, including Colfax, Cray, Dell, Fujitsu Systems Europe, HPE, Inspur, Lenovo, Penguin Computing, SGI, Sugon, and Supermicro. Aurora, at the Argonne Leadership Computing Facility at Argonne National Lab will be built on Intel SSF.

Solving Today’s HPC Challenges

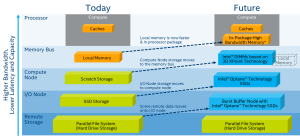

The Intel SSF is solving the challenges of HPC with innovative technologies—such as Intel® Omni-Path Architecture (Intel® OPA), an end-to-end, next-generation fabric solution that delivers significant improvements as compared to InfiniBand EDR* technology. Along with new technologies, Intel is tightly integrating those technologies, both into their silicon and at the system level, to create a highly efficient and capable infrastructure. Integration is key to their approach, because it brings everything—memory, interconnect, and storage—closer to the processing cores. Several key components of Intel SSF exist today, such as Intel OPA, Intel® Xeon Phi™ processors, Intel® Xeon® processors, and Intel® Enterprise Edition for Lustre* software, but some innovative technologies are still in the making and slated for launch next year.

It’s no wonder that Intel is leading the charge of integration across the architecture. The company is uniquely poised with its wide portfolio of IP and its silicon capabilities.

One of the really interesting aspects of this silicon and system integration is how it escalates across the node, memory and storage hierarchy. The result is that this essentially raises the center of gravity of the memory pyramid and makes it fatter, which will enable faster and more efficient data movement.

Lustre—Intel SSF Storage Core

Intel® Solutions for Lustre software are core to Intel SSF as a major part of this memory-storage hierarchy. The file system, once considered only for national laboratories and academia, and used traditionally as an extremely fast scratch file system, is now being deployed as persistent storage in enterprise HPC installations around the world. The most recent release of Lustre adds important features enterprises demand for their mission-critical workloads.

Intel has a significant investment in Lustre. Company developers continue to add enhancements to the open source code base, which is managed by OpenSFS (in the U.S.) and EOFS (in Europe). In addition to code contributions, five additional Intel® Parallel Computing Centers (Intel® PCC) recently opened that will specialize in improving the performance and ease of use of Intel Solutions for Lustre* software. These centers include:

- Johannes Gutenberg University, focusing on Lustre QoS (Quality of Service)

- Lawrence Berkeley National Laboratory, focusing on optimized Big Data Analytics

- University of Hamburg, focusing on enhanced adaptive compression in Lustre

- University of California Santa Cruz, focusing on automatic tuning and contention management for Lustre

- GSI Helmholtz Centre for Heavy Ion Research, focusing on a unifying Lustre benchmark suite

Enabling HPC Opportunities through a Software Community

While Intel is creating breakthrough hardware for HPC, it is also helping enable the software community to take advantage of HPC more easily. Intel has joined with more than 30 other companies to found the OpenHPC Collaborative Project (www.openhpc.community), a new community-led organization focused on developing a comprehensive and cohesive open source HPC software stack. It’s a huge challenge to integrate the individual components and validate an aggregate HPC software stack when developing these systems. And, it is a major logistical effort to maintain all the codes, stay abreast of rapid release cycles, and address user requests for compatibility with additional software packages. With many users and system makers creating and maintaining their own stacks, there is duplicated work and inefficiency across the ecosystem. OpenHPC intends to help minimize these issues and improve the efficiency across the ecosystem.

“Many sites are left to their own devices when assembling a software stack on a high-end HPC system. Since most of the components they use are open source driven, it makes sense to put together an open source community around this,” says Karl Schulz, Principal Engineer for the Intel Enterprise and High Performance Computing Group. “OpenHPC is a place where system administrators, ISVs, OEMs and end users, including national labs and academia, can come together to solve shared problems,” explains Schulz.

An Exascale Foundation

While there’s still more to be done in supercomputing to reach 1 exaflops (or even 180 petaflops—the goal for the Aurora system), Intel’s approach to integration, new fabric, and storage under the umbrella of Intel SSF forms the foundation for an Exascale computing architecture.

These initiatives mainly / nicely driven by Intel and its partners are all really admirable and – although we have talked about them for years – for the first time ever really realizable, because almost all necessary hardware and software components are on the table today.

However, and it’s no surprise, one VERY important aspect seems to fall short here and all the time: the thousands and thousands of complex application software packages which are neither optimized for such a great infrastructure nor easily accessible and usable by a wider community of users, which however is a prerequisite for making HPC mainstream.

Application software is THE point of contact of any user with HPC, it’s not through the network, nor the hardware, nor the middleware; it is the user’s application software. And here an intuitive user experience on any computing infrastructure is an absolute must. And in my humble opinion the (currently) best way is to package them into a black-box CONTAINER, fully portable, easy to access and use (on demand, at your fingertips), and even easier to support and to maintain.

It has to be ONE standard base container, suitable for all HPC applications, running everywhere, applicable to all and every application software, be it commercial, free, open source, or in-house developed; a container which contains the user’s application workflow, the date, and the tools, all in one, re-usable any time, and working in harmony with the underlying Scalable System Framework.

Only then we will see millions of novice HPC users joining our community, from areas such as 3D printing, big data, IoT, and finally all those engineers and scientists in ‘our’ (IDC) classical vertical markets who have not touched HPC so far.