This is the third article in a series taken from The insideHPC Guide to The Industrialization of Deep Learning.

The recent introduction of new high end processors from Intel combined with accelerator technologies such as NVIDIA Tesla GPUs and Intel Xeon Phi provide the raw ‘industry standard’ materials to cobble together a test platform suitable for small research projects and development. When combined with open source toolkits some meaningful results can be achieved, but wide scale enterprise deployment in production environments raises the infrastructure, software and support requirements to a completely different level.

If we begin by considering a deep learning focused rack mount system that is designed for production use such as the HPE Apollo 6500 system, density and performance are extremely impressive. However, such capability brings other considerations to the forefront from the infrastructure perspective. With one or two Intel CPUs, memory, storage, I/O and up to 8 state of the art NVIDIA Tesla GPUs all packed into a 4u rack mount chassis, such as system will have a power envelope of 3kW or more.

While it is entirely possible to physically mount several such systems into a single standard rack, being able to supply sufficient power and cooling together with remote management capabilities requires a full data center level range of capabilities such as are delivered by HPE’s Apollo 6000 family which is fully proven and deployed in demanding enterprise environments such HPC and technical computing along with a global advisory and support capability.

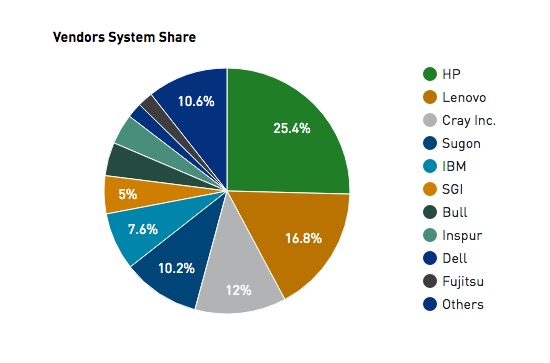

If we look at the HPC market and the June 2016 TOP500 list by vendor, HPE is the clear leader with 25% of the installed systems, of which 85% are in the industry segment as opposed to academia, research or government. These statistics alone go a long way towards underscoring HPE’s credibility to deliver and support such systems for production deployment in global enterprises.

With robust industrial grade deep learning systems organizations will be able to make dramatic improvements in productivity and production efficiency combined with significantly lower operational cost and faster time to results.

In coming weeks, this series will consist of articles that explore:

- Deep Learning and Getting There

- Technologies for Deep Learning

- Components for Deep Learning (this article)

- Software Framework for Deep Learning

- Examples of Deep Learning

- HPE Solutions for Deep Learning / HPE Cognitive Computing Toolkit

If you prefer you can download the complete inside HPC Guide to The Industrialization of Deep Learning courtesy of Hewlett Packard Enterprise