In this video from the GPU Technology Conference, Marc Hamilton from NVIDIA describes the new DGX-2 supercomputer with the NVSwitch interconnect.

In this video from the GPU Technology Conference, Marc Hamilton from NVIDIA describes the new DGX-2 supercomputer with the NVSwitch interconnect.

“The rapid growth in deep learning workloads has driven the need for a faster and more scalable interconnect, as PCIe bandwidth increasingly becomes the bottleneck at the multi-GPU system level. NVLink is a great advance to enable eight GPUs in a single server, and accelerate performance beyond PCIe. But taking deep learning performance to the next level will require a GPU fabric that enables more GPUs in a single server, and full-bandwidth connectivity between them.

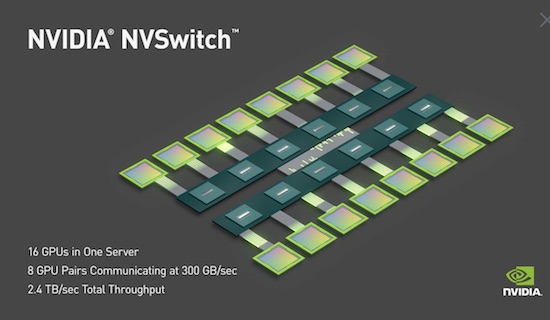

NVIDIA NVSwitch is the first on-node switch architecture to support 16 fully-connected GPUs in a single server node and drive simultaneous communication between all eight GPU pairs at an incredible 300 GB/s each. These 16 GPUs can be used as a single large-scale accelerator with 0.5 Terabytes of unified memory space and 2 petaFLOPS of deep learning compute power.”

Transcript:

insideHPC: Hi, I’m Rich with insideHPC. We’re here at the GPU Technology Conference in Silicon Valley. And I’m here with Marc Hamilton from NVIDIA. So Marc, thanks for coming today. It’s been a very exciting week, but I’m glad you had the time to share and kind of give us some more details on the exciting announcements. How is GTC from your perspective?

Marc Hamilton: GTC has been amazing as usual. Boy, Jensen’s keynote of I think two and a half hours didn’t stop for a second. I think I got about 12 hours sleep the last 4 days. Not per day, over all four days. But it’s a great event.

insideHPC: Well, good, good. What’s exciting for you in terms of our space, the HPC world?

Marc Hamilton: Yeah, I think from the HPC perspective, the real gem of the show it was definitely DGX-2. There’s so much news, I think, that maybe it didn’t sink into everyone. But at the heart of DGX-2 is this new NVSwitch. And I think for HPC people, this is just some amazing technology. Nothing else like it in the world.

insideHPC: Well, for those that might not have seen it, so DGX-2, it’s the follow-on to the DGX-1, which was like a small supercomputer, very tightly packed GPUs. You mentioned the switch, but what does DGX-2 by itself add to the picture?

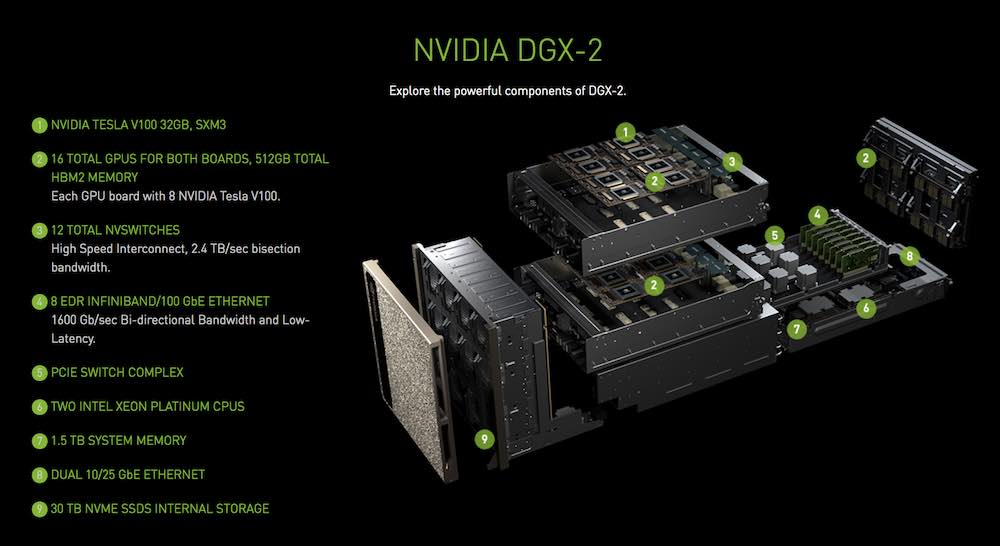

Marc Hamilton: Yeah, so it’s easy to think of maybe building blocks and think, “Well, DGX-2 is twice a DGX-1. It has 16 Volta GPUs instead of eight GPUs,” but it’s really so much more than that. So starting with the basics, so first of all, DGX-2 is our first server. It’s part of the DGX family, but the first server to usher in our new SXM3 form factor. So a new form factor for the GPU. In size, it’s sort of very similar. That 3×5″ sort of high-density form factor that we introduced with SXM2 back in the Pascal days, but now SXM3 will take us through Volta and beyond. Next, of course, it’s built with our new 32 gig HBM 2 memory parts. So each of those 16 GPUs has 32 gig of HBM2 memory. So that’s in one box, you have half a terabyte of HBM2 memory. So some amazing memory there. And so that’s just some of the details.

And then, of course, what’s most amazing is NVSwitch. Now, when we think of switching, I always think first of an Ethernet switch or a network switch, so it’s important to think NVSwitch is an internal, inside the box switch. And it’s not really– don’t think of it as a networking switch, think of it as a memory switch connecting those 16 GPUs. And what’s amazing about it is the bisection bandwidth, which HPC people will know as a familiar term. What’s the bandwidth between one half of the box to the other? And so if you took two DGX-1’s, you had four InfiniBand interfaces between them, and the bisection bandwidth then is 400 gigabytes a second with the 480R InfiniBands. With NVSwitch, we have 2.4 terabytes a second bisection bandwidth, 24 times what you would have with two DGX-1s.

insideHPC: So Marc, how would that compare to a big supercomputer like Titan at Oak Ridge, for example?

Marc Hamilton: If you look at the Titan specs, it gives you a little bit more than 10 terabytes a second across the whole supercomputer. All right? Which was about a $100 million a system. And so now in one 10 RU supercomputer, you’re getting one-quarter of that, 2.4 terabytes a second.

insideHPC: And that machine has something like 17,000 GPUs to share that bandwidth.

Marc Hamilton: 18,000, I think [laughter].

insideHPC: Wow. Wow. Okay. In HPC, that’s a big deal. What does the memory do for applications, this doubling? I mean, is this one big memory space? Is that the way it looks?

Marc Hamilton: Absolutely. And I think that Jensen talked about it as one big GPU. And again with NVSwitch being a memory interconnect, you can perform– it’s not only used for moving data back and forth between GPUs but you can actually perform atomic memory operations over NVLink. So you can read and write another GPU’s memory over NVLink at that full bandwidth without having to move the data.

insideHPC: Just for comparison Marc, the original NVLink was kind of a finite, what was it, eight nodes or something that it could scale to. Adding these switches, how big are we talking in terms of– how big does the fabric get?

Marc Hamilton: So with DGX-1, we talked about the hybrid mesh-cube topology. So remember initially NVLink was a point-to-point interconnect. That’s all we had on DGX-1 initial servers. So each Volta V100 has six individual NVLinks exposed, and each of those links is 50 gigabytes a second of bidirectional bandwidth. And so if you think about it, what we do with DGX-2– let’s sort of do a visual with my hands here. You divide up the box into two right, right, and you put 8 GPUs on one side, 8 GPUs on another side. You then line up– next to the GPUs, you line up 6 switches because out of each GPU you have 6 individual NVLinks, one of them goes to each switch. So that gives you a total of 8 times 6 or 48 links going into the switch. The other half of the switch, then you take those 6 NVSwitches, you connect them to another six NVSwitches, and again at full bandwidth. So you have 48 links connecting the two complexes of switches, and then you just repeat. Then that fans out to another 8 Voltas with 6 connections each. So each Volta has 300 gigabytes of second of bandwidth to any other Volta simultaneously all at the same time or 2.4 terabytes a second.

Marc Hamilton: So with DGX-1, we talked about the hybrid mesh-cube topology. So remember initially NVLink was a point-to-point interconnect. That’s all we had on DGX-1 initial servers. So each Volta V100 has six individual NVLinks exposed, and each of those links is 50 gigabytes a second of bidirectional bandwidth. And so if you think about it, what we do with DGX-2– let’s sort of do a visual with my hands here. You divide up the box into two right, right, and you put 8 GPUs on one side, 8 GPUs on another side. You then line up– next to the GPUs, you line up 6 switches because out of each GPU you have 6 individual NVLinks, one of them goes to each switch. So that gives you a total of 8 times 6 or 48 links going into the switch. The other half of the switch, then you take those 6 NVSwitches, you connect them to another six NVSwitches, and again at full bandwidth. So you have 48 links connecting the two complexes of switches, and then you just repeat. Then that fans out to another 8 Voltas with 6 connections each. So each Volta has 300 gigabytes of second of bandwidth to any other Volta simultaneously all at the same time or 2.4 terabytes a second.

insideHPC: So to use the old Slashdot joke, can you build a Beowulf cluster our of these [laughter]?

Marc Hamilton: We think that no one’s going to want to buy just one, right? I mean, again, our customers use hundreds, thousands of GPUs or more in their systems. If you look at our Saturn V Supercomputer add-in video which we use for our internal training, that has 5,280 GPUs, so that would be 330 DGX-2s if we were to upgrade it all to DGX-2. So we have eight EDR InfiniBand interfaces from Mellanox. So Mellanox really loves this box, right? And so with those eight interconnects we’re not only supporting InfiniBand, but now we’re supporting a 100 gig RoCE running in InfiniBand mode. That’s, of course, the RDMA over Converged Ethernet.

insideHPC: Exciting stuff. So Marc, specs are nice and everything, but do you have any real performance numbers on any kind of benchmarks?

Marc Hamilton: Absolutely. So first of all, you need to think about what sort of things is NVSwitch really good for. It’s good for the traditional strong-scaling problems, right? And what is the attribute of a strong-scaling problem is when you have a lot of all-to-all communications between it. Because every GPU is now fully connected with the full speed of all six individual NVLinks, it’s perfect for all-to-all. So a couple things, one of the benchmarks we talked about was (FAIR) seek. (FAIR) seek is a Facebook natural language processing algorithm. Very, very complex. It takes days to train the network on it on a DGX-1. Not going back even to the original DGX-1 with Pascal, we took a DGX-1 with Volta V100, and we trained on there, and then we trained on DGX-2. We were able to train 10 times faster. So that’s in less than a year a 10x performance.

Also, if you look at– the world of deep neural networks is just exploding. 6,000 research papers written last year in just the top 5 or 6 conferences. And you’ve got more and more complicated deep neural networks. So a great example is Google’s has– I have to check even my notes here, but Google has a deep neural network called Transformer Mixture Of Experts. And this is really a collection of many deep neural networks all sort of controlled by another deep neural network at the top. So think about it, very, very complex set of deep neural networks that all has to train at the same time. So that’s an example of the type of deep neural network that scales very well on this. And then for HPC customers, codes like 3D FFTs, things where you really need to have all that– you need to have all of your data for a large FFT in memory and be able to communicate those speeds. This effect for a 3D FFT looks like one huge GPU.