GTC Digital begins this week as virtual event with free registration. While the annual GPU Technology Conference typically packs hundreds of hours of talks, presentations and conversations into a five-day event in San Jose, NVIDIA’s goal with GTC Digital is to bring some of the best aspects of this event to a global audience, and make it accessible for months. “Apart from the instructor-led, hands-on workshops and training sessions, which require a nominal fee, we’re delighted to bring this content to the global community at no cost. And we’ve incorporated new platforms to facilitate interaction and engagement.”

NVIDIA Cancels GTC 2020 Live Event due to Coronavirus

Due to the coronavirus, today NVIDIA announced that GTC 2020 is turning into a digital conference rather than a live event. “As the coronavirus situation is not improving, we’re turning GTC San Jose into a digital conference rather than a live event. Jensen will still give a keynote. We will still share our announcements. And we’ll work to ensure our speakers can share their talks. But we’ll do this all online. We will provide updates here soon about when you can tune in, and will be in touch with those who purchased a conference pass about a full refund.”

NVIDIA to host Full-Day HPC Summit at GPU Technology Conference

NVIDIA will host a full day HPC Summit this year at the GPU Technology Conference 2020. With a full day of plenary sessions and a developer track on high performance computing, the HPC Summit takes place Thursday, March 26 in San Jose, California. “The HPC Summit at GTC brings together HPC leaders, IT professionals, researchers, and developers to advance the state of the art of HPC. Explore content from different HPC communities, engage with experts, and learn about new trends and innovations.”

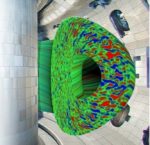

Advancing Fusion Science with CGYRO using GPU-based Leadership Systems

Jeff Candy and Igor Sfiligoi from General Atomics gave this talk at the GPU Technology Conference. “Gyrokinetic simulations are one of the most useful tools for understanding fusion science. We’ll explain how we designed and implemented CGYRO to make good use of the tens of thousands of GPUs on such systems, which provide simulations that bring us closer to fusion as an abundant clean energy source. We’ll also share benchmarking results of both CPU- and GPU-Based systems.”

Video: Multi-GPU FFT Performance on Different Hardware Configurations

Kevin Roe from the Maui High Performance Computing Center gave this talk at the GPU Technology Conference. “We will characterize the performance of multi-GPU systems in an effort to determine their viability for running physics-based applications using Fast Fourier Transforms (FFTs). Additionally, we’ll discuss how multi-GPU FFTs allow available memory to exceed the limits of a single GPU and how they can reduce computational time for larger problem sizes.”

Video: The Kokkos C++ Performance Portability EcoSystem for Exascale

Christian Trott from Sandia gave this talk at the GPU Technology Conference. “The Kokkos C++ Performance Portability EcoSystem is a production-level solution for writing modern C++ applications in a hardware-agnostic way. We’ll provide success stories for Kokkos adoption in large production applications on the leading supercomputing platforms in the U.S. We’ll focus particularly on early results from two of the world’s most powerful supercomputers, Summit and Sierra, both powered by NVIDIA Tesla V100 GPUs.”

Video: Exascale Deep Learning for Climate Analytics

Thorsten Kurth Josh Romero gave this talk at the GPU Technology Conference. “We’ll discuss how we scaled the training of a single deep learning model to 27,360 V100 GPUs (4,560 nodes) on the OLCF Summit HPC System using the high-productivity TensorFlow framework. This talk is targeted at deep learning practitioners who are interested in learning what optimizations are necessary for training their models efficiently at massive scale.”

Video: Can FPGAs compete with GPUs?

John Romein from ASTRON gave this talk at the GPU Technology Conference. “We’ll discuss how FPGAs are changing as a result of new technology such as the Open CL high-level programming language, hard floating-point units, and tight integration with CPU cores. Traditionally energy-efficient FPGAs were considered notoriously difficult to program and unsuitable for complex HPC applications. We’ll compare the latest FPGAs to GPUs, examining the architecture, programming models, programming effort, performance, and energy efficiency by considering some real applications.”

Video: How to Scale from Workstation through Cloud to HPC in Cryo-EM Processing

Lance Wilson from Monash University gave this talk at the GPU Technology Conference. “We’ll review the last two years of development in single-particle cryo-electron microscopy processing, with a focus on accelerated software, and discuss benchmarks and best practices for common software packages in this domain. Our talk will include videos and images of atomic resolution molecules and viruses that demonstrate our success in high-resolution imaging.”

Get Your Head in the Cloud: Lessons in GPU Computing with Schlumberger

Wyatt Gorman from Google and Abhishek Gupta from Schlumberger gave this talk at the GPU Technology Conference. “Demand for GPUs in High Performance Computing is only growing, and it is costly and difficult to keep pace in an entirely on-premise environment. We will hear from Schlumberger on why and how they are utilizing cloud-based GPU-enabled computing resources from Google Cloud to supply their users with the computing power they need, from exploration and modeling to visualization.”