This feature story describes how the computational power of Frontera will be a game changer for research. Late last year, the Texas Advanced Computing Center announced plans to deploy Frontera, the world’s fastest supercomputer in academia. To prepare for launch, TACC just published the inaugural edition of Texascale, an annual magazine with stories that highlight the people, science, systems, and programs that make TACC one of the leading academic computing centers in the world.

This feature story describes how the computational power of Frontera will be a game changer for research. Late last year, the Texas Advanced Computing Center announced plans to deploy Frontera, the world’s fastest supercomputer in academia. To prepare for launch, TACC just published the inaugural edition of Texascale, an annual magazine with stories that highlight the people, science, systems, and programs that make TACC one of the leading academic computing centers in the world.

In an inconspicuous-looking data center on The University of Texas at Austin’s J. J. Pickle Research Campus, construction is underway on one of the world’s most powerful supercomputers.

The Frontera system (Spanish for “frontier”) will allow the nation’s academic scientists and engineers to probe questions both cosmic and commonplace — What is the universe composed of? How can we produce enough food to feed the Earth’s growing population? — that cannot be addressed in a lab or in the field; that require the number-crunching power equivalent to a small city’s worth of computers to solve; and that may be critical to the survival of our species.

The name Frontera pays homage to the “endless frontier” of science envisioned by Vannevar Bush and presented in a report to President Harry Truman calling for a national strategy for scientific progress. The report led to the founding of the National Science Foundation (NSF) — the federal agency that funds fundamental research and education in science and engineering. It paved the way for investments in basic and applied research that laid the groundwork for our modern world, and inspired the vision for Frontera.

Whenever a new technological instrument emerges that can solve previously intractable problems, it has the potential to transform science and society,” said Dan Stanzione, executive director of TACC and one of the designers behind the new machine. “We believe that Frontera will have that kind of impact.”

The Quest for Computing Greatness

The pursuit of Frontera formally began in May 2017 when NSF issued an invitation for proposals for a new leadership-class computing facility, the top tier of high performance computing systems funded by the agency. The program would award $60 million to construct a supercomputer that could satisfy the needs of a scientific and engineering community that increasingly relies on computation.

“For over three decades, NSF has been a leader in providing the computing resources our nation’s researchers need to accelerate innovation,” explained NSF Director France Córdova. “Keeping the U.S. at the forefront of advanced computing capabilities and providing researchers across the country access to those resources are key elements in maintaining our status as a global leader in research and education.”

The Frontera project is not just about the system; our proposal is anchored by an experienced team of partners and vendors with a community-leading track record of performance.” — Dan Stanzione, TACC

Meet the The Architects

When TACC proposed Frontera, it didn’t simply offer to build a fastest-in-its-class supercomputer. It put together an exceptional team of supercomputer experts and power users who together have internationally recognized expertise in designing, deploying, configuring, and operating HPC systems at the largest scale. Learn more about principal investigators who led the charge.

NSF’s invitation for proposals indicated that the initial system would only be the beginning. In addition to enabling cutting-edge computations, the supercomputer would serve as a platform for designing a future leadership-class facility to be deployed five years later that would be 10 times faster still — more powerful than anything that exists in the world today.

TACC has deployed major supercomputers several times in the past with support from NSF. Since 2006, TACC has operated three supercomputers that debuted among the Top15 most powerful in the world — Ranger (2008-2013; #4), Stampede1 (2012-2017, #7) and Stampede2 (2017-present, #12) — and three more systems that rose to the Top25. These systems established TACC, which was founded in 2001, as one of the world leaders in advanced computing.

TACC solidified its reputation when, on August 28, 2018, NSF announced that the center had won the competition to design, build, deploy, and run the most capable system they had ever commissioned.

This award is an investment in the entire U.S. research ecosystem that will enable leap-ahead discoveries,” NSF Director Córdova said at the time.

Frontera represents a further step for TACC into the upper echelons of supercomputing — the Formula One race cars of the scientific computing world. When Frontera launches in 2019, it will be the fastest supercomputer at any U.S. university and one of the fastest in the world — a powerful, all-purpose tool for science and engineering.

“Many of the frontiers of research today can be advanced only by computing,” Stanzione said. “Frontera will be an important tool to solve Grand Challenges that will improve our nation’s health, well-being, competitiveness, and security.”

Supercomputers Expand the Mission

Supercomputers have historically had very specific uses in the world of research, performing virtual experiments and analyses of problems that can’t be easily physically experimented upon or solved with smaller computers.

Since 1945, when the ENIAC (Electronic Numerical Integrator and Computer) at the University of Pennsylvania first calculated artillery firing tables for the United States Army’s Ballistic Research Laboratory, the uses of large-scale computing have grown dramatically.

Today, every discipline has problems that require advanced computing. Whether it’s cellular modeling in biology, the design of new catalysts in chemistry, black hole simulations in astrophysics, or Internet-scale text analyses in the social sciences, the details change, but the need remains the same.

Computation is arguably the most critical tool we possess to reach more deeply into the endless frontier of science,” Stanzione says. “While specific subfields of science need equipment like radio telescopes, MRI machines, and electron microscopes, large computers span multiple fields. Computing is the universal instrument.”

In the past decade, the uses of high performance computing have expanded further. Massive amounts of data from sensors, wireless devices, and the Internet opened up an era of big data, for which supercomputers are well suited. More recently, machine and deep learning have provided a new way of not just analyzing massive datasets, but of using them to derive new hypotheses and make predictions about the future.

As the problems that can be solved by supercomputers expanded, NSF’s vision for cyberinfrastructure — the catch-all term for the set of information technologies and people needed to provide advanced computing to the nation — evolved as well. Frontera represents the latest iteration of that vision.

Data-Driven Design

TACC’s leadership knew they had to design something innovative from the ground up to win the competition for Frontera. Taking a data-driven approach to the planning process, they investigated the usage patterns of researchers on Stampede1, as well as on Blue Waters — the previous NSF-funded leadership-class system — and in the Department of Energy (DOE)’s large-scale scientific computing program, INCITE, and analyzed the types of problems that scientists need supercomputers to solve.

They found that Stampede1 usage was dominated by 15 commonly used applications. Together these accounted for 63 percent of Stampede1’s computing hours in 2016. Some 2,285 additional applications utilized the remaining 37 percent of the compute cycles. (These trends were consistent on Blue Waters and DOE systems as well.) Digging deeper they determined that, of the top 15 applications, 97 percent of the usage solved equations that describe motions of bodies in the universe, the interactions of atoms and molecules, or electron and fluids in motion.

Frontera will be the fastest supercomputer at a U.S. university and likely Top 5 in the world when it launches in 2019. It will support simulation, data analysis and AI on the largest scales.

We did a careful analysis to understand the questions our community was using our supercomputers to solve and the codes and equations they used to solve them,” said TACC’s director of High Performance Computing, Bill Barth. “This narrowed the pool of problems that Frontera would need to excel in solving.”

But past use wasn’t the only factor they considered. “It was also important to consider emerging uses of advanced computing resources for which Frontera will be critical,” Stanzione said. “Prominent among these are data-driven and data-intensive applications, as well as machine and deep learning.”

Though still small in terms of their overall use of Stampede2, and other current systems, these areas are growing quickly and offer new ways to solve enduring problems.

Whereas researchers traditionally wrote HPC codes in programming languages like C++ and Fortran, data-intensive problems often require non-traditional software or frameworks, such as R, Python, or TensorFlow.

The coming decade will see significant efforts to integrate physics-driven and data-driven approaches to learning,” said Tommy Minyard, TACC director of Advanced Computing Systems. “We designed Frontera with the capability to address very large problems in these emerging communities of computation and serve a wide range of both simulation-based and data-driven science.”

The Right Chips for the Right Jobs

Anyone following computer hardware trends in recent years has noticed the blossoming of options in terms of computer processors. Today’s landscape includes a range of chip architectures, from low energy ARM processors common in cell phones, to adaptable FPGAs (field-programmable gate arrays), to many varieties of CPU, GPUs and AI-accelerating chips.

The team considered a wide-range of system options for Frontera before concluding that a CPU-based primary system with powerful Intel Xeon x86 nodes and a fast network would be the most useful platform for most applications.

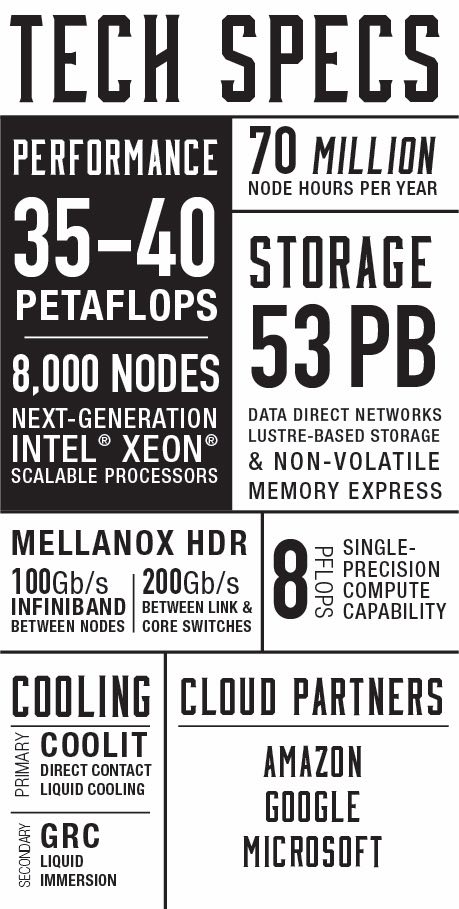

Once built, TACC expects that the main compute system will achieve 35 to 40 petaflops of peak performance. For comparison, Frontera will be twice as powerful as Stampede2 (currently the fastest university supercomputer) and 70 times as fast as Ranger, which operated at TACC until 2013.

Once built, TACC expects that the main compute system will achieve 35 to 40 petaflops of peak performance. For comparison, Frontera will be twice as powerful as Stampede2 (currently the fastest university supercomputer) and 70 times as fast as Ranger, which operated at TACC until 2013.

To match what Frontera will compute in just one second, a person would have to perform one calculation every second for one billion years.

In addition to its main system, Frontera will also include a subsystem made up of graphics processing units (GPUs) that have proven particularly effective for deep learning and molecular dynamics problems.

“For certain application classes that can make effective use of GPUs, the subsystem will provide a cost-efficient path to high performance for those in the community that can fully exploit it,” Stanzione said.

Designing a Complete Ecosystem

The effectiveness of a supercomputer depends on more than just its processors. Storage, networking, power, and cooling are all critical as well.

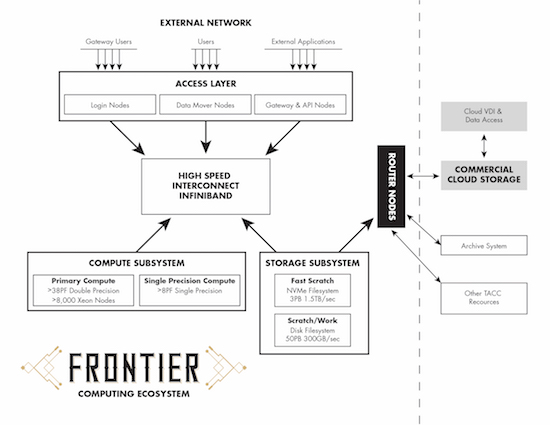

Frontera will include a storage subsystem from DataDirect Networks with almost 53 petabytes of capacity and nearly 2 terabytes per second of aggregate bandwidth. Of this, 50 petabytes will use disk-based, distributed storage, while 3 petabytes will employ a new type of very fast storage known as Non-volatile Memory Express storage, broadening the system’s usefulness for the data science community.

Supercomputing applications often employ many compute nodes, or devices, at once, which requires passing data and instructions from one part of the system to another. Mellanox InfiniBand interconnects will provide 100 Gigabits per second (Gbps) connectivity to each node, and 200 Gbps between the central switches.

These components will be integrated via servers from Dell EMC, who has partnered with TACC since 2003 on massive systems, including Stampede1 and 2.

The new Frontera system represents the next phase in the long-term relationship between TACC and Dell EMC, focused on applying the latest technical innovation to truly enable human potential,” said Thierry Pellegrino, vice president of Dell EMC High Performance Computing.

Though a top system in its own right, Frontera won’t operate as an island. Users will have access to TACC’s other supercomputers — Stampede2, Lonestar, Wrangler, and many more, each with a unique architecture — and storage resources, including Stockyard, TACC’s global file system; Corral, TACC’s data collection repository; and Ranch, a tape-based long-term archival system.

Together, they compose an ecosystem for scientific computing that is arguably unmatched in the world.

New Models of Access & Use

Researchers traditionally interact with supercomputers through the command line — a text-only program that takes instructions and passes them on to the computer’s operating system to run.

The bulk of a supercomputer’s time (roughly 90 percent of the cycles on Stampede2) is consumed by researchers using the system in this way. But as computing becomes more complex, having a lower barrier to entry and offering an end-to-end solution to access data, software, and computing services has grown in importance.

Science gateways offer streamlined, user-friendly interfaces to cyberinfrastructure services. In recent years, TACC has become a leader in building these accessible interfaces for science.

Visual interfaces can remove much of the complexity of traditional HPC, and lower this entry barrier,” Stanzione said. “We’ve deployed more than 20 web-based gateways, including several of the most widely used in the world. On Frontera, we’ll allow any community to build their own portals, applications, and workflows, using the system as the engine for computations.”

Though they use a minority of computing cycles, a majority of researchers actually access supercomputers through portals and gateways. To serve this group, Frontera will support high-level languages like Python, R, and Julia, and offer a set of RESTful APIs (application program interfaces) that will make the process of building community-wide tools easier.

“We’re committed to delivering the transformative power of computing to a wide variety of domains from science and engineering to the humanities,” said Maytal Dahan, TACC’s director of Advanced Computing Interfaces. “Expanding into disciplines unaccustomed to computing from the command line means providing access in a way that abstracts the complexity and technology and lets researchers focus on their scientific impact and discoveries.”

The Cloud

For some years, there has been a debate in the advanced computing community about whether supercomputers or “the cloud” are more useful for science. The TACC team believes it’s not about which is better, but how they might work together. By design, Frontera takes a bold step towards bridging this divide by partnering with the nation’s largest cloud providers — Microsoft, Amazon, and Google — to provide cloud services that complement TACC’s existing offerings and have unique advantages.

For some years, there has been a debate in the advanced computing community about whether supercomputers or “the cloud” are more useful for science. The TACC team believes it’s not about which is better, but how they might work together. By design, Frontera takes a bold step towards bridging this divide by partnering with the nation’s largest cloud providers — Microsoft, Amazon, and Google — to provide cloud services that complement TACC’s existing offerings and have unique advantages.

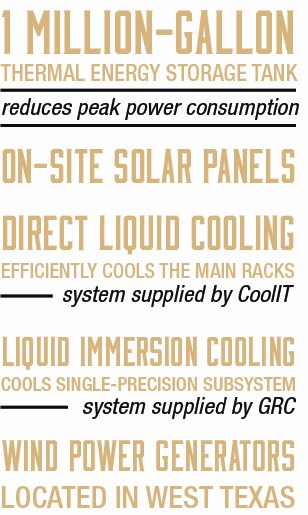

It’s no secret that supercomputers use a lot of power. Frontera will require more than 5.5 megawatts to operate — the equivalent of powering more than 3,500 homes. To limit the expense and environmental impact of running Frontera, TACC will employ a number of energy-saving measures with the new system. Some were put in place years ago; others will be deployed at TACC for the first time. All told, TACC expects one-third of the power for Frontera to come from renewable sources.

These include long-term storage for sharing datasets with collaborators; access to additional types of computing processors and architectures that will appear after Frontera launches; cloud-based services like image classification; and Virtual Desktop Interfaces that allow a cloud-based filesystem to look like one’s home computer.

The modern scientific computing landscape is changing rapidly,” Stanzione said. “Frontera’s computing ecosystem will be enhanced by playing to the unique strengths of the cloud, rather than competing with them.”

Software & Containers

When the applications that researchers rely on are not available on HPC systems, it creates a barrier to large-scale science. For that reason, Frontera will support the widest catalog of applications of any large-scale scientific computing system in history.

TACC will work with application teams to support highly-tuned versions of several dozen of the most widely used applications and libraries. Moreover, Frontera will provide support for container-based virtualization, which sidesteps the challenges of adapting tools to a new system while enabling entirely new types of computation.

With containers, user communities develop and test their programs on laptops or in the cloud, and then transfer those same workflows to HPC systems using programs like Singularity. This facilitates the development of event-driven workflows, which automate computations in response to external events like natural disasters, or for the collection of data from large-scale instruments and experiments.

Frontera will be a more modern supercomputer, not just in the technologies it uses, but in the way people will access it,” Stanzione said.

A Frontier System to Solve Frontier Challenges

Talking about a supercomputer in terms of its chips and access modes is a bit like talking about a telescope in terms of its lenses and mounts. The technology is important, but the ultimate question is: what can it do that other systems can’t?

Entirely new problems and classes of research will be enabled by Frontera. Examples of projects Frontera will tackle in its first year include efforts to explore models of the Universe beyond the Standard Model in collaboration with researchers from the Large Hadron Collider; research that uses deep learning and simulation to predict in advance when a major disruption may occur within a fusion reactor to prevent damaging these incredibly expensive systems; and data-driven genomics studies to identify the right species of crops to plant in the right place at the right time to maximize production and feed the planet. [See more about each project in the box below.]

The LHC modeling effort, fusion disruption predictions, and genomic analyses represent the types of ‘frontier,’ Grand Challenge research problems Frontera will help address.

Many phenomena that were previously too complex to model with the hardware of just a few years ago are within reach for systems with tens of petaflops,” said Stanzione.

A review committee made up of computational and domain experts will ultimately select the projects that will run on Frontera, with a small percentage of time reserved for emergencies (as in the case of hurricane forecasting), industry collaborations, or discretionary use.

It’s impossible to say what the exact impact of Frontera will be, but for comparison, Stampede1, which was one quarter as powerful as Frontera, enabled research that led to nearly 4,000 journal articles. These include confirmations of gravitational wave detections by LIGO that contributed to a Nobel Prize in Physics in 2016; discoveries of FDA approved drugs that have been successful in treating cancer; and a greater understanding of DNA interactions enabling the design of faster and cheaper gene sequencers.

From new machine learning techniques to diagnose and treat diseases to fundamental mathematical and computer science research that will be the basis for the next generation of scientists’ discoveries, Frontera will have an outsized impact on science nationwide.

Frontera will be the most powerful supercomputer at any U.S. university and likely top 10 in the world when it launches in 2019. It will support simulation, data analysis, and AI on the largest scales.

Physics Beyond the Standard Model

The NSF program that funds Frontera is titled, “Towards a Leadership-Class Computing Facility.” This phrasing is important because, as powerful as Frontera is, NSF sees it as a step toward even greater support for the nation’s scientists and engineers. In fact, the program not only funds the construction and operation of Frontera — the fastest system NSF has ever deployed — it also supports the planning, experimentation, and design required to build a system in five years that will be 10 times more capable than Frontera.

We’ll be planning for the next generation of computational science and what that means in terms of hardware, architecture, and applications,” Stanzione said. “We’ll start with science drivers — the applications, workflows, and codes that will be used — and use those factors to determine the architecture and the balance between storage, networks, and compute needed in the future.”

Much like the data-driven design process that influenced the blueprint for Frontera, the TACC team will employ a “design — operate — evaluate” cycle on Frontera to plan Phase 2.

TACC has assembled a Frontera Science Engagement Team, consisting of a more than a dozen leading computational scientists from a range of disciplines and universities, to help determine the “the workload of the future” — the science drivers and requirements for the next generation of systems. The team will also act as liaisons to the broader community in their respective fields, presenting at major conferences, convening discussions, and recruiting colleagues to participate in the planning.

Fusion physicist William Tang joined the Frontera Science Engagement Team in part because he believed in TACC’s vision for cyberinfrastructure. “AI and deep learning are huge areas of growth. TACC definitely saw that and encouraged that a lot more. That played a significant part in the winning proposal, and I’m excited to join the activities going forward,” Tang said.

A separate technology assessment team will use a similar strategy to identify critical emerging technologies, evaluate them, and ultimately develop some as testbed systems.

TACC will upgrade and make available their FPGA testbed, which investigates new ways of using interconnected FPGAs as computational accelerators. They also hope to add an ARM testbed and other emerging technologies.

Other testbeds will be built offsite in collaboration with partners. TACC will work with Stanford University and Atos to deploy a quantum simulator that will allow them to study quantum systems. Partnerships with the cloud providers Microsoft, Google, and Amazon, will allow TACC to track AMD (Advanced Micro Devices) solutions, neuromorphic prototypes and tensor processing units.

Finally, TACC will work closely with Argonne National Laboratory to assess the technologies that will be deployed in the Aurora21 system, which will enter production in 2021. TACC will have early access to the same compute and storage technologies that will be deployed in Aurora21, as well as Argonne’s simulators, prototypes, software tools, and application porting efforts, which TACC will evaluate for the academic research community.

The primary compute elements of Frontera represent a relatively conservative approach to scientific computing,” Minyard said. “While this may remain the best path forward through the mid-2020’s and beyond, a serious evaluation of a Phase 2 system will require not only projections and comparisons, but hands-on access to future technologies. TACC will provide the testbed systems not only for our team and Phase 2 partners, but to our full user community as well.”

Using the “design — operate — evaluate” process, TACC will develop a quantitative understanding of present and future application performance. It will build performance models for the processors, interconnects, storage, software, and modes of computing that will be relevant in the Phase 2 timeframe.

It’s a push/pull process,” Stanzione said. “Users must have an environment in which they can be productive today, but that also incentivizes them to continuously modernize their applications to take advantage of emerging computational technologies.”

The deployment of two to three small scale systems at TACC will allow the assessment team to evaluate the performance of the system against their model and gather specific feedback from the NSF science user community on usability. From this process, the design of the Phase 2 leadership class system will emerge.

With Great Power Comes Great Responsibility

The design process will culminate some years in the future. Meanwhile, in the coming months, Frontera’s server racks will begin to roll into TACC’s data center. From January to March 2019, TACC will integrate the system with hundreds of miles of networking cables and install the software stack. In the spring, TACC will host an early user period where experienced researchers will test the system and work out any bugs. Full production will begin in the summer of 2019.

We want it to be one of the most useable and accessible systems in the world,” Stanzione said. “Our design is not uniquely brilliant by us. It’s the logical next step — smart engineering choices by experienced operators.”

It won’t be TACC’s first rodeo. Over 17 years, the team has developed and deployed more than two dozen HPC systems totaling more than $150 million in federal investment. The center has grown to nearly 150 professionals, including more than 75 PhD computational scientists and engineers, and earned a stellar reputation for providing reliable resources and superb user service. Frontera will provide a unique resource for science and engineering, capable of scaling to the very largest capability jobs, running the widest array of jobs, and supporting science in all forms.

The project represents the achievement of TACC’s mission of “Powering Discoveries to Change the World.”

“Computation is a key element to scientific progress, to engineering new products, to improving human health, and to our economic competitiveness. This system will be the NSF’s largest investment in computing in the next several years. For that reason, we have an enormous responsibility to our colleagues all around the U.S. to deliver a system that will enable them to be successful,” Stanzione said. “And if we succeed, we can change the world.”