In this special guest feature, Bob Fletcher from Verne Global reflects on how liquid cooling technologies on display at SC19 represent more than just a wave.

Perhaps it is because I returned from my last business trip of 2019 to a flooded house, but more likely it’s all the wicked cool water-cooled equipment that I encountered at SC19 that I’m in a watery mood!

Many of the hardware vendors at SC19 were pushing their exascale-ready devices and about 15% of the devices on a typical computer manufacturer’s booth were water-cooled. Adding rack-level water cooling is theoretically straight forward, so I spent a few minutes checking out the various options.

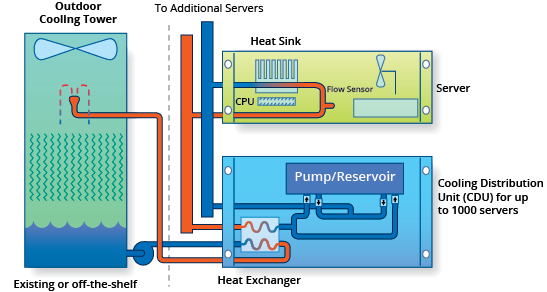

The first thing I noticed is that the chilled water from outside terminates in a Cooling Distribution Unit (CDU) which then has its own water-cooling loop which is connected to the computing equipment. Here is a great cooling system schematic from Chilldyne:

Traditionally the large supercomputer/mainframe manufacturers like IBM, Cray, Fujitsu and NEC provided water-cooling as a component of their solution. Over the last 20 years, or so, independent cooling solution products have become available from companies like CoolIT, Asetek, and others. More recently different technologies have been added to the mix from companies like Chilldyne and CloudandHeat.

CDUs have become technologically diverge with some companies like CooIT and Nortek selling high pressure (15-20psi, 1-1.4bar) CDUs with the ability to drive up to, and sometime beyond, 1MW of equipment per CDU and a couple of companies selling a dual vacuum low-pressure (8-10psi, 0.6-0.7bar vacuum differential) solutions designed by Chilldyne. I enjoyed determining the pros and cons of each one. The high-pressure CDUs are traditional and almost all the demo units on the show floor were configured for them.

Their pros are:

- Industry familiarity

- Simple lower-cost CDU design

- Proven designs

- And their cons are:

- More expensive high-quality pressure piping

- More expensive quality no-dip connectors

- Servers are pre-primed with glycol for shipping

- Careful cluster water-charging installation necessary

Chilldyne has an innovative alternative using a more sophisticated CDU but simpler piping and connectors much like you would see in a fish tank. Designed by Dr. Steve Harrington, a former rocket scientist, the system supplies both inlet and outlet water at a vacuum so any break in the piping results in no drips or water damage without the expensive connector. This concept is simple but understanding the hardware took a few minutes. The system is controlled by a very large vacuum pump capable of collecting all the water in the CDU continuously, this pump is connected to an air/water separator which deals with any leaks leak or priming related bubbles. The water under vacuum is pumped to the computer equipment using a 3-chamber water pump with flapper valves between the chambers.

On their booth I cut a pipe between 2 CPUs inside a server and the water from both sides of the cut flowed back to the CDU with not a drip in sight – wicked cool!

The demo server showing inexpensive piping which I cut without a drop

Their pros are:

- No leak technology

- Inexpensive piping

- Inexpensive connectors

- No water-charging necessary

And their cons are:

- Slightly more expensive CDUs (likely offset by the piping and connector costs)

- Slightly lower MTTF due to its complexity

- Now technology starting to gain market acceptance

In 2018 about 3% of the outages were attributed to “Colocation provider failure (not power related)” by the Uptime Institute Global Survey of Data Center Operators and Managers. Which is much below the “On-premise data center power failure”, “Network failure”, “Software – IT systems error” levels of about 30% each. Perhaps 1% of the outage are attributed to water issues although it’s likely that many water issues don’t cause a direct outage but do reduce the long-term reliability of the affected computing hardware. Nevertheless, water issues are serious and will ruin your work or vacation day.

My intuition suggests that the larger established water-cooling users will mostly stick with their familiar high-pressure solution, but the newer green field deployments will be fertile ground for the new vacuum CDUs. In particular, the AI community as their compute accelerators become increasingly water-cooled, while maintaining their short 2-year product life.

Inspur were showcasing new HPC systems with Natural Circulation Evaporative Cooling technology which combines high-density computing servers with natural circulation evaporative cooling technology, which is more reliable, energy-saving, and easier to deploy than other liquid cooling solutions.

Initially I struggled to differentiate this from the original scientific loop heat pipes used for cooling in space craft and similar applications and more recent technology applying them to data center applications. A great article from serverthehome.com added some clarity. It appears that the Natural Circulation system uses gravity/density changes versus capillary action to move the evaporated cooling liquid, now vapor at 60⁰C, to the condenser at the top of the rack where fans re-condense it and allow gravity to return it to the server.

This technology is great for distributing the energy from large heat sources to outside of the rack, a tricky problem at high density altitudes like Denver but not so much in Iceland, but thereafter the conventional data center cooling infrastructure takes over.

This technology is great for distributing the energy from large heat sources to outside of the rack, a tricky problem at high density altitudes like Denver but not so much in Iceland, but thereafter the conventional data center cooling infrastructure takes over.

As AI drives an insatiable desire for every increasing amount of computing power, water cooling will become more widespread away from the supercomputing and research facilities. Perhaps starting with small cooling loops to keep select CPUs and GPUs cool and reliable followed by wholesale water cooling of every compute element in the server clusters facilitating dense exaflop machines.

Irrespective of your future HPC/AI plans, with or without water, cloud or colocation, Verne Global has your bases covered with green free-air cooling and low-cost power.

Bob Fletcher is VP of Artificial Intelligence, Verne Global