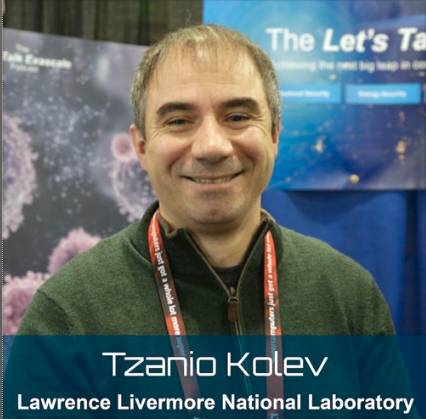

In this Let’s Talk Exascale podcast, Tzanio Kolev from LLNL describes the work at Center for Efficient Exascale Discretizations (CEED), one of six co-design centers within the Exascale Computing Project.

In this Let’s Talk Exascale podcast, Tzanio Kolev from LLNL describes the work at Center for Efficient Exascale Discretizations (CEED), one of six co-design centers within the Exascale Computing Project.

CEED is a research partnership involving more than thirty computational scientists from two DOE national laboratories and five universities. The co-design centers target crosscutting algorithmic methods that capture the most common patterns of computation and communication (known as motifs) in the ECP applications. The goal of the co-design activity is to integrate the rapidly developing software stack with emerging hardware technologies while developing software components that embody the most common application motifs.

Discretization methods divide a large simulation into smaller components in preparation for computer analysis. CEED is ECP’s hub for partial differential equation discretizations on unstructured grids, providing user-friendly software, mathematical expertise, community standards, benchmarks, and miniapps as well as coordination between the applications, hardware vendors, and Software Technology (ST) efforts in ECP.

Kolev said that CEED helps applications leverage future architectures by providing them with state-of-the-art discretization libraries that will exploit the hardware on upcoming exascale computing systems and deliver a significant performance gain over what are referred to as conventional low-order methods.

He said the center is delivering efficient user-friendly high-order partial differential equation discretization components for the entire simulation pipeline—from meshing to discretizations, solvers, adaptive mesh refinement, dense tensor contractions, visualizations, and more.

The CEED team is not just porting previously known algorithms to new architectures, Kolev said; they are inventing new algorithms, new high-order methods that have been used in the past but whose applicability was limited by the hardware.

With the new data-intensive hardware that we have now, where performance is governed by memory transfers, high-order methods are becoming really important and offer a lot of benefits from the perspective of HPC [high-performance computing] utilization,” Kolev said.

The focus of CEED’s research is on harnessing the mathematical power of high-order discretizations, which can provide both increased accuracy and better HPC performance. “High order methods are powerful, but you have to control that power; so, with great power, great responsibility,” Kolev said. “That is one of the themes with high-order methods research.”

CEED also deals with low-level algebraic operations. “To extract maximum performance from our algorithms, we really need to co-design all of these pieces together,” Kolev said. “Our benchmarks are focused on optimizing that and working with vendors to understand how best we can fuse operations to get maximum performance.”

Coordination and collaboration are key to the success of CEED. “It is one of the most exciting things about working on this project,” Kolev said. “Our users get the benefit of not just the expertise of one group but also from some of the best researchers in the country. I really enjoy the team that we have, as well as the cooperation and coordination with other data computational scientists in ECP. That’s been very rewarding.”

Coordination and collaboration are key to the success of CEED. “It is one of the most exciting things about working on this project,” Kolev said. “Our users get the benefit of not just the expertise of one group but also from some of the best researchers in the country. I really enjoy the team that we have, as well as the cooperation and coordination with other data computational scientists in ECP. That’s been very rewarding.”

At the top of CEED’s list of successes is becoming the high-order hub for the HPC community, especially because such a resource had not existed before. “We’ve done a lot of things around standardization and proposing new benchmarks,” Kolev said, adding that the team’s creation and dissemination of benchmark problems has elicited constructive feedback both internally within ECP and externally from the broader HPC community.

CEED is also particularly proud of the impact of its Laghos and Nekbone miniapps, which have been used extensively in procurement, vendor interactions, and ST interactions. “They have been very successful, and Laghos is something new that we developed under CEED,” Kolev said.

The center has delivered to users several discretization libraries, including libCEED, MFEM and Nek5000. “MFEM is probably the first general finite-element library that has full support for GPUs with really, really cutting-edge performance,” Kolev said.

Currently, the CEED team is helping applications with their specific problems and with optimizations for the pre-exascale Summit and Sierra machines. They’re also looking ahead and working on performance and software for AMD GPUs as well as the Intel GPUs that will be part of Aurora, the exascale machine set for 2021.

CEED’s enduring legacy will be its role as a hub for the computational motif of hardware methods that will enable a wide range of applications to run efficiently on future hardware. However, to leave this level of value for the future, the center’s contribution will need to exceed helping applications with discretization methods.

For applications to really use hardware technologies, they need much more,” Kolev said. “They need a high-order ecosystem, and that ecosystem starts with hardware meshing, hardware solvers, and hardware visualization. Discretization is just a part of that, and so we’re trying to play an active role in creating that ecosystem. . . . I hope our legacy will be that we help create a lot of the components that will go into the high-order software ecosystem. This will be really, really important for the future.”

Source: Scott Gibson at the Exascale Computing Project