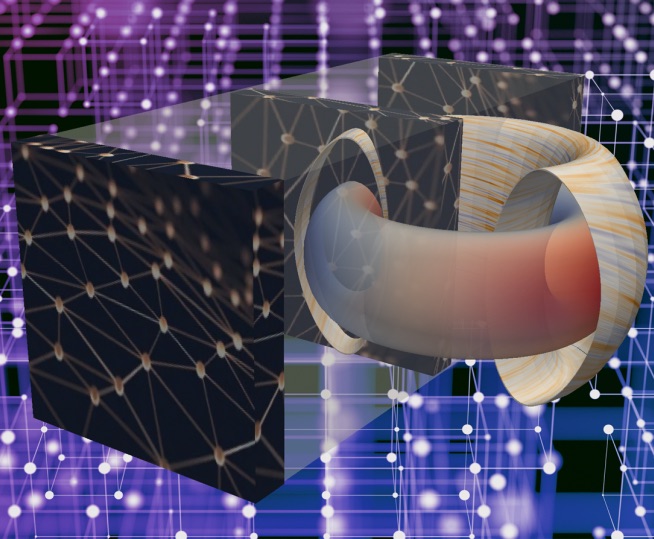

Princeton’s Fusion Recurrent Neural Network (FRNN) code uses convolutional and recurrent neural network components to integrate both spatial and temporal information for predicting disruptions in tokamak plasmas with unprecedented accuracy and speed on top supercomputers. (Image: Eliot Feibush, Princeton Plasma Physics Laboratory)

Scientists from Princeton Plasma Physics Laboratory are leading an Aurora ESP project that will leverage AI, deep learning, and exascale computing power to advance fusion energy research.

Argonne National Laboratory will be home to one of the nation’s first exascale systems when Aurora arrives in 2021. To prepare codes for the architecture and scale of the supercomputer, 15 research teams are taking part in the Aurora Early Science Program through the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science User Facility. With access to pre-production time on the system, these researchers will be among the first in the world to use an exascale machine for science. This is one of their stories.

If you’re going to make predictions designed to control ultra-hot, magnetically confined plasma, those predictions had better be pretty accurate to be useful. Engineers working with the potential energy source have estimated a window of only 30 milliseconds to control instabilities that can disrupt the energy production process and damage the plasma confinement device.

With a suite of the world’s most powerful path-to-exascale supercomputing resources at their disposal, William Tang and colleagues are developing models of disruption mitigation systems (DMS) to increase warning times and work toward eliminating major interruption of fusion reactions in the production of sustainable clean energy.

Magnetically confined fusion plasma is the topic of much research and the focus of a major initiative called ITER. Over the last decade, a number of researchers from across the country have utilized the computational resources at Argonne and other DOE labs to study both the properties and dynamics of fusion plasma and the means by which to confine and harness its energy, much of it focused on ITER.

Among them, Tang, professor of astrophysical sciences at Princeton University and principal research physicist with the DOE’s Princeton Plasma Physics Laboratory (PPPL). He is leading a project in the ALCF’s Aurora Early Science Program that is focused on using artificial intelligence (AI), deep learning, and exascale computing power to advance our understanding of disruptions in confinement devices, or tokamaks.

Utilizing some of the most powerful high-performance computers in the world, including Argonne’s Theta system, Tang and colleagues from Princeton and the PPPL want to quickly and accurately predict the onset of disruption disturbances that can rapidly release the plasma from its magnetically confined trap and cause structural damage to the reactor.

To make an economical fusion reactor viable, you really have to be able to predict and then control these disturbances,” notes Tang. “In order to train the predictor to achieve the high accuracy needed, you have to use the more powerful path-to-exascale supercomputers, and that’s what we’re doing right now.”

So far, the software has run successfully on GPU-enabled pre-exascale systems like those in Summit at DOE’s Oak Ridge National Laboratory, as well as on Japan’s top machines, the Tsubame 3.0 and the brand new ABCI, a dedicated AI supercomputer.

There’s a voracious appetite for more powerful and faster supercomputing capabilities, approaching exascale and beyond,” says Tang. “The reason that Argonne is interested in our software, for this very important project to deliver clean energy, is that it has been well vetted and runs very efficiently on these advanced systems.”

Intel will deliver the Aurora supercomputer, the United States’ first exascale system, to Argonne National Laboratory in 2021. Aurora will incorporate a future Intel Xeon Scalable processor, Intel Optane DC Persistent memory, Intel’s Xe compute architecture and Intel OneAPI programming framework — all anchored to Intel’s six key pillars of innovation. (Credit: Argonne National Laboratory)

Aurora’s exascale capability combined with artificial intelligence techniques like deep neural networks will enable researchers to better train predictive models using the massive amounts of data derived from actual fusion plasma laboratory experiments. Even with these resources, it remains a huge undertaking given the complexities associated with a wide range of spatio-temporal scales, multi-physics considerations, and the variety of events that lead to and cause disruptions.

Once the predictors are trained, the associated software must be made compatible with the plasma control system’s (PCS) software to enable real-time deployment on an active machine (i.e., a tokamak like ITER) designed to carry out the fusion experiment. Actuators in the PCS control real-time characteristics of the plasma, or the plasma state, such as its shape and pressure profile.

In advance of the planned “first plasma” on ITER around 2026, Tang’s team can test their pre-exascale predictors at existing experimental facilities, including the DIII-D tokamak at General Atomics in San Diego, the Joint European Torus in the UK, and the long-pulse superconducting KSTAR tokamak in Korea.

“That’s a real advantage if you’re trying to do a complicated prediction on a control problem, let’s say in robotics, where direct access allows you to see how well you’re controlling the way the robot moves,” says Tang.

In this case, the experimental tokamak facilities allow researchers to observe the real-time reaction of plasma to the predictor-guided actuator mechanisms meant to relax the plasma to a state much less susceptible to disruptions.

According to a Nature article the group published last April, initial results of their most recent work “illustrate the potential for deep learning to accelerate progress in fusion-energy science and, more generally, in the understanding and prediction of complex physical systems.”

The team hopes to provide the best predictors of disruptive events within that 30-millisecond window and identify earlier warning signals in the data. The ability to deliver significantly longer lead times would allow engineers to control the hot plasma or at least slowly “ramp down” the process without completely terminating it.

It’s complicated, but the payoff is huge,” says Tang. “Our predictor models trained on Aurora will be critical to the experimental research carried out at ITER and take us many steps forward toward the ultimate goal of a clean, carbon-free source of energy on a huge scale – an achievement that would really revolutionize the way we live.”

A further description of this research can be found in the following article: “Predicting disruptive instabilities in controlled fusion plasmas through deep learning,” Julian Kates-Harbeck, Alexey Svyatkovskiy, and William Tang, Nature, Vol. 568, April 25, 2019.

Source: Argonne