Gilad Shainer

Weather and climate models are both compute and data intensive. Forecast quality scales with modeling complexity and resolution. Resolution depends on the performance of supercomputers. And supercomputer performance depends on the underlying interconnect technology: to get higher performance, the interconnect must be able to move data quickly, effectively and in a scalable manner across compute resources.

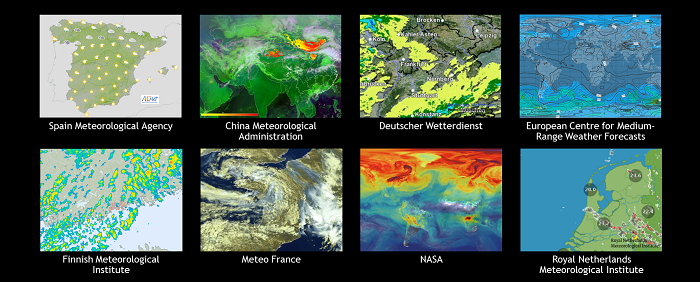

That’s why many of the world’s leading meteorological services have chosen NVIDIA Mellanox InfiniBand networking to accelerate their supercomputing platforms, including Spanish Meteorological Agency (AEmet), Beijing Meteorological Service, China Metrological Administration (CMA), German Climate Computing Centre (DKRZ), German National Meteorological Service (Deutscher Wetterdienst or DWD), European Center for Medium Range Weather Forecasts (ECMWF), Finnish Meteorological Institute (FMI), Royal Netherlands Meteorological Institute (KNMI), Meteo France, National Aeronautics and Space Administration (NASA), and many more.

The technological advantages of InfiniBand have made it the de facto standard for climate research and weather forecasting applications, delivering higher performance, scalability and resiliency than all other interconnect technologies.

InfiniBand technology is based on four main fundamentals:

- The first fundamental is the design of a very smart endpoint. An endpoint that can execute and manage all of the network functions. Since the endpoint is located near CPU/GPU memory, it can manage memory operations in a very effective and efficient way— for example, via RDMA or GPUDirect RDMA.

- The second fundamental is a switch network that is designed for scale. It is a pure software defined (SDN) network. InfiniBand switches, for example, do not require an embedded server within every switch appliance for managing the switch and running its operating system (as needed in the case of Ethernet switches). This makes InfiniBand a leading cost-performance network fabric compared to Ethernet or any other proprietary network. It also enables technology innovations such as In-Network Computing, for performing data calculations on the data as it is being transferred in the network. An important example is the Scalable Hierarchical Aggregation and Reduction Protocol (SHARP)™ technology, which has demonstrated unique improvements of MPI operation performance by offloading collective operations from the CPU to the switch network, and eliminating the need to send data multiple times between endpoints.

- Centralized management is the third fundamental. One can manage, control and operate the InfiniBand network from a single place. One can design and build any sort of network topology, and customize and optimize the data center network for its target applications.

- Last but not least, InfiniBand is a standard technology, ensuring backward and forward compatibility, open source, and with open APIs. Unlike proprietary networks, InfiniBand enables IT managers to protect their data center investments, and to eliminate the need to re-design the software over and over again.

These fundamentals make InfiniBand the connectivity-of-choice for compute and data demanding applications, weather and climate simulations being one prominent example.

The NVIDIA Mellanox networking technology team has also been working with German Climate Computing Centre (DKRZ) on optimizing performance of the ICON application, the first project in a multi-phase collaboration. ICON is a unified weather forecasting and climate model, jointly developed by Max-Planck-Institut für Meteorologie and Deutscher Wetterdienst (DWD). By optimizing the application’s data exchange modules to take advantage of InfiniBand’s unique advantages, the team has demonstrated a nearly 20 percent increase in overall application performance.

DKRZ has selected HDR InfiniBand to accelerate their next generation supercomputer to be available in 2021, which will increase the center computing power by five, compared to the currently operating system. The new supercomputer will allow more detailed simulations and simulations of longer time periods, and thus deeper insights into climate events.

Beijing Meteorological Service has selected 200 Gigabit HDR InfiniBand interconnect technology to accelerate its new supercomputing platform, which will be used for enhancing weather forecasting, improving climate and environmental research, and serving the weather forecasting information needs of the 2022 Winter Olympics in Beijing.

Meteo France, the French national meteorological service, has selected HDR InfiniBand to accelerate its two new large-scale supercomputers. The agency provides weather forecasting services for companies in transport, agriculture, energy and many other industries, as well as for a large number of media channels and worldwide sporting and cultural events. One of the systems debuted on the TOP500 list released in June, 2020.

HDR InfiniBand has also been selected to accelerate the new supercomputer of European Center for Medium Range Weather Forecasts. Slated for deployment in 2020, the system will support weather forecasting and prediction researchers from over 30 countries across Europe. It will increase the center’s weather and climate research compute power by 5X, making it one of the world’s most powerful meteorological supercomputers. The new platform will enable running nearly twice as many higher-resolution probabilistic weather forecasts in less than an hour, improving the ability to monitor and predict increasingly severe weather phenomena and enabling European countries to better protect lives and property.

HDR 200Gb/s InfiniBand enables extremely low latencies, high data throughput, and includes high-value features such as smart In-Network Computing acceleration engines via Scalable Hierarchical Aggregation and Reduction Protocol (SHARP) technology, high network resiliency through NVIDIA Mellanox SHIELD’s self-healing network capabilities, MPI offloads, enhanced congestion control and adaptive routing characteristics.

These capabilities deliver leading performance and scalability for compute and data intensive applications, and a dramatic boost in throughput and cost savings, paving the way to more scientific discovery.