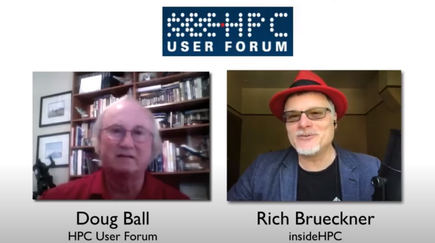

![]() Doug Ball is a leading expert in computational fluid dynamics and aerodynamic engineering, disciplines he became involved with more than 40 years ago. In this interview with the late Rich Brueckner of insideHPC, Ball discusses the increased scale and model complexity that HPC technology has come to handle and, looking to the future, his anticipation of reduction in package sizing enabling HPC to be embedded in cars, planes and other autonomous mobility devices.

Doug Ball is a leading expert in computational fluid dynamics and aerodynamic engineering, disciplines he became involved with more than 40 years ago. In this interview with the late Rich Brueckner of insideHPC, Ball discusses the increased scale and model complexity that HPC technology has come to handle and, looking to the future, his anticipation of reduction in package sizing enabling HPC to be embedded in cars, planes and other autonomous mobility devices.

In This Update… from the HPC User Forum Steering Committee

By Steve Conway and Thomas Gerard

After the global pandemic forced Hyperion Research to cancel the April 2020 HPC User Forum planned for Princeton, New Jersey, we decided to reach out to the HPC community in another way — by publishing a series of interviews with members of the HPC User Forum Steering Committee. Our hope is that these seasoned leaders’ perspectives on HPC’s past, present and future will be interesting and beneficial to others. To conduct the interviews, Hyperion Research engaged Rich Brueckner (1962-2020), president of insideHPC Media. We welcome comments and questions addressed to Steve Conway, sconway@hyperionres.com or Earl Joseph, ejoseph@hyperionres.com.

This interview is with Doug Ball, who served as enterprise director for computational fluid dynamics within Boeing’s Enterprise Technology Strategy, providing strategic guidance and manages investments into CFD technologies across Boeing’s businesses. Earlier, he was chief engineer for all of aerodynamics within Boeing Commercial Airplanes. Throughout his career, he served as a consultant to NASA, the National Research Council, the U.S. Air Force and The Ohio State University.

Before joining Boeing, Ball worked for General Dynamics Corporation as an aerodynamicist. Ball earned a bachelor of science degree in aeronautical and astronautical engineering and a master of science degree, both from The Ohio State University. In 2006, he received a distinguished alumni award from the university’s College of Engineering, and the Garvin L. Von Eschen award for leadership in aerospace engineering from the mechanical and aerospace engineering department in 2013.

The HPC User Forum was established in 1999 to promote the health of the global HPC industry and address issues of common concern to users. More than 75 HPC User Forum meetings have been held in the Americas, Europe and the Asia-Pacific region since the organization’s founding in 2000.

Brueckner: Today my guest is Doug Ball. Doug, welcome.

Ball: Thank you, glad to be here.

Brueckner: Well, Doug, I know you’ve been retired for now six years, right? Can you tell me how you got started in HPC?

Ball: That’s an easy one. When I left General Dynamics and went to Boeing in early 1977, my first job was actually developing a CFD tool, a 2D code to be able to analyze the flows over multi-element air foils. In airplane terms, that’s the flaps for takeoff and landing, and it was modelling it with separated flows, which had never been done before. I’d had one class in computer programming. I’d never done anything in CFD before, so it was trial by fire.

Brueckner: Well, great. So, can you tell us about your HPC background. What happened after that?

Ball: As I said, I really didn’t have any formal training, but I was hired into aero research at Boeing and fortunately there were two classes of people that were all very friendly and very willing to serve as mentors: I had good computer scientists and great mathematicians. So, whenever I came up against something I didn’t understand, all I had to do was turn around and ask somebody and I had all the help I could get. So, that’s pretty much how I got into it.

I’ve either used CFD when I was engineer or I’ve managed CFD developers or I’ve managed the users of CFD practically throughout my entire career. It was at a time when airplane development costs were becoming an issue and people were looking at, “how can we reduce the amount of wind tunnel testing?” It was either a case of being at the right place at the right time or being at the wrong place at the wrong time, I haven’t figured that one out yet.

Brueckner: So, Doug, you must’ve seen a lot of changes in HPC over the years. What were the biggest changes?

Ball: This was the fun question to contemplate because it took up probably 90 percent of the time that I spent putting my answers together. When I started, Seymour Cray was just starting to come on the scene. That 2D code that I talked about, we were cramming that into a CDC 6600. That’s where I learned about overlays, and also learned about how little memory there was available to do anything. And, of course, the 6600, while it was a good machine, it clearly wasn’t as fast as the first Cray machine that came on. And, of course, Cray had a distinct advantage over everybody else in that, for a user, the matrix arithmetic was as easy as the scalar arithmetic [on the Cray system]. They had all the libraries and everything, so you could say A times B, and A was a huge matrix and B was a huge matrix and, hey, C had the answer! Fabulous from a user standpoint.

But we still had the issue of memory. So, the speed went up. I should probably back up and say that at Boeing, as HPC capability increased, we always kept expanding the size of the problem, the magnitude of the problem, the level of fidelity of the problem to fill up whatever capability came along.

The next big improvement I would have to say that we experienced was the advent of SSD, solid state disk. Because before that, doing reads and writes with overlays and that kind of thing — writing to magnetic media is not the fastest process in the world, so having solid state disk was a huge boon to job runtimes, clock-times, and that’s what the users care about. They don’t care how much CPU time it takes. The only thing they care about is, “if I put my job in at four o’clock this evening, will it be available for me at seven o’clock tomorrow morning?” So that was the next big step. And, of course, the Cray improvements came along, and for the longest time we rode the coattails of NASA Ames. We actually modeled our first couple of data centers directly after the Ames facility, the NAS [NASA Advanced Supercomputer facility]. It was great because we could prototype our software, get everything up and running, then we would have our hardware delivered and, literally, by the time Cray said it’s ready to go, so were we because the software we knew already ran because we’d been running it in Mountain View [NASA Ames center].

Brueckner: Any other big changes you’ve seen?

Ball: Obviously, I think the next step was the advent of parallelization. Parallelization came along at a time when it wasn’t obvious that Moore’s Law was going to keep working anymore for a single chip, but it also helped address the memory issue because now you could hang memory off of 50 processors, then 500 processors, then 5,000 processors and, of course, the challenges there were that the conductor has a bigger and bigger orchestra that he or she has to keep in sync. But it helped address the memory issue, so we could run larger and larger jobs and, obviously, the more processors you have, the more it reduces the cycle time in terms of getting those jobs done. That, to me, is where I’ve seen the speed thing come along, the big improvements.

On top of all of this, when you are an industrial user, the overarching thing is cost. So, you are always looking at how much is this going to cost? Can I get cost benefits? And believe it or not, we had one Cray purchase that we weren’t going to do because we couldn’t make the cost benefit analysis work until one of my really good engineers, John Bussoletti, went, “Wait a minute, have we looked at the cooling costs?” And when we looked at the whole data center, if we put this new processor in our analysis and took the old one out, the air conditioning requirements dropped dramatically and then it became a no-brainer to buy the new machine. Cost is always sitting there as a forcing function. And, of course, sometimes you can justify spending more on computing as long as you are confident that you would do less physical testing, and Boeing has been pretty successful with that.

Brueckner: Well, great. So, where do you think HPC is headed?

Ball: Wow, you know that is a really good question. Because I’m a user as opposed to someone who is heavily involved on the hardware side, I may not be the best person to answer that. But since you asked, I honestly think when you back up and look at what drove HPC to begin with, CFD and oil and gas, those people were really the big drivers early on. Then, we kind of became, I’m going to call it, two-bit players, and the gaming industry is what started really driving what was going on, as well as people like PayPal and Google. For them, HPC is not one job needing a million processors, it’s like a million jobs that all have to be done in the wink of an eye and they each get their own processor.

I’m not going to argue whether those are both HPC or not. That’s kind of a moot point. I looked at that and went, those were totally unrelated events and I’m going to speculate that autonomous mobility may be the driver for where HPC goes in the future. I’m leaving it open to whether it’s cars, boats, airplanes, helicopters, UAVs. All of these things are going to have to be able to analyze their situation, predict what outcomes are possible, and then they have to decide and act. They won’t necessarily be able to do that just by themselves.

So, I think we’re liable to see a drive towards how we can drive the package size of HPC down so that we can put it in all of these devices that are going to need it in the future, because they’re all going to have an overriding risk that a lot of these others don’t — the risk to human life. Gamers and CFDers and stuff? No, because we’re going to go off and do other things. Obviously, gaming doesn’t risk anybody’s life, but when I’m talking about two cars approaching an intersection, they have to know exactly what each other is going to do. That’s my two cents worth.

Brueckner: Are any HPC trends that make you particularly excited or concerned?

Ball: Well, yes, I’m excited from the standpoint of the previous question. I admitted that I wasn’t maybe the best person to ask, but you know what, that’s what makes it exciting, because you don’t really know what it is. It’s that part of the journey that’s going to be exciting. Something is liable to pop up that nobody ever thought about.

One of the things that rarely gets a talked about a lot: a friend of mine who worked at NASA, her husband was a dentist. He had some health issues and he could no longer really practice dentistry. So, he took CFD and he took the modelling and the visualization, and he started processing X-rays and that kind of stuff and figuring out how to use the HPC software, and he applied it to the dental industry, and that was 35 years ago. We rarely, if ever, talk about that kind of thing.

So, autonomous mobility is obviously coming, and I don’t think it’s that big a stretch to say that that’s going to be a driver. Whether it’s going to be the 500-pound gorilla in the room, who knows? But again, the thing about that one is that there is also the big risk question.

Brueckner: Anything else you want to bring up here that we didn’t touch on yet today?

Ball: No, other than when you look back 45 years ago at what we could do then versus what we can do now, it’s really quite amazing. The one disappointment that I do have is that there is still an awful lot in the area of CFD to do. I realize that HPC is more than CFD, I get that, but in the area of CFD, where we have really plateaued because of the physical models, we’re going to have to wait for some other quantum leap before we can bring in direct numeric simulation and that kind of thing, where the models are enormous compared to even today’s really big ones, which are enormous compared to what they were 20 years before. As to whether qubits are going to play a role in the future I don’t know enough about that one to speculate, but there are certainly a lot of people spending a lot of effort on it.

Brueckner: Thanks. Doug, I really appreciate you coming on. Stay safe down there in Arizona.

About Hyperion Research, LLC

Hyperion Research provides data-driven research, analysis and recommendations for technologies, applications, and markets in high performance computing and emerging technology areas to help organizations worldwide make effective decisions and seize growth opportunities. Research includes market sizing and forecasting, share tracking, segmentation, technology and related trend analysis, and both user & vendor analysis for multi-user technical server technology used for HPC and HPDA (high performance data analysis). We provide thought leadership and practical guidance for users, vendors and other members of the HPC community by focusing on key market and technology trends across government, industry, commerce, and academia.