Navin Shenoy, ECP, Intel Data Platforms Group, with Intel 3rd Gen Xeon chip

In its cage-match struggle with AMD for data center (and HPC) CPU market superiority, Intel responded this morning to its rival’s vaunted EPYC 7003 Series CPUs, announced two weeks ago, with its 3rd Gen Intel Xeon Scalable data center chips, code named “Ice Lake.” And the early response from a small sampling of industry analysts is that Ice Lake is more competitive than might have been expected.

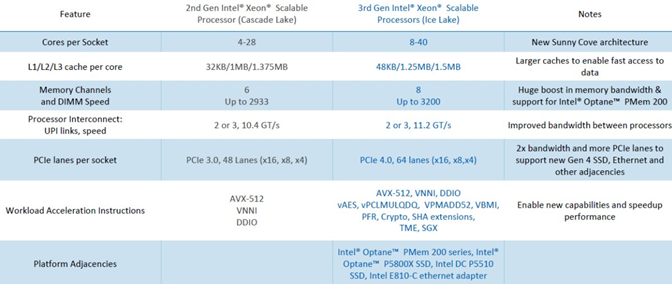

Coming in at 10nm, Ice Lake delivers a performance increase of 46 percent “on popular data center workloads” compared with 2nd Gen Xeon, Intel said, with up to 40 cores per processor and up to 2.65 times higher average performance gain compared with test results from 2017, according to the company. The platform supports up to 6 terabytes of system memory per socket, up to 8 channels of DDR4-3200 memory per socket and up to 64 lanes of PCIe Gen4 per socket.

The processors offer features such as Intel SGX data security capabilities, and Intel Crypto Acceleration and Intel DL Boost for AI inference acceleration. Combined with Intel Select and Market Ready Solutions, Ice Lake is designed to “enable customers to accelerate deployments across cloud, AI, enterprise, HPC, networking, security and edge applications,” the company said. The 3rd Gen Xeon platform also includes Intel Optane persistent memory 200 series, Optane SSD P5800X and SSD D5-P5316 NAND SSDs, as well as Intel Ethernet 800 Series Network Adapters and the latest version of Intel Agilex FPGAs.

On the AI front, Intel claimed their latest hardware and software optimizations deliver 74 percent faster AI performance compared with the prior Xeon generation and up to 1.5 times higher performance for 20 popular AI workloads versus 3rd gen AMD EPYC 7763 and up to 1.3 times higher performance on a broad mix of 20 popular AI workloads versus Nvidia A100 GPU.

It’s the totality of Intel’s 3rd Gen offering that favorably impresses industry watcher Karl Freund, even if AMD has seized the price/performance lead in data center CPUs.

“Intel has demonstrated the company’s broad spectrum of technology prowess and leadership in this announcement, from CPU’s to memory to encryption and networking,” said Freund, founder and principal analyst at Cambrian AI. “AMD still enjoys hard-earned leadership in many CPU metrics, including scalar performance per core and per socket. But on other features, such as AI performance, Intel clearly has the lead. In particular, AMD still must catch up on vector performance and AI inference, where AVX512 and DL Boost overcome AMD’s leadership in manufacturing at TSMC.”

On Intel’s head-to-head battle with AMD, Freund added, “I think Intel has done an excellent job of reminding us all that comparing CPUs is a complex undertaking, that specific features rise to importance for specific workloads. So, yes, taken as a whole, Ice Lake is surprisingly strong.”

Steve Conway, senior adviser, HPC Market Dynamics at Hyperion Research, said he is impressed with how Ice Lake extends the data center CPU’s reach from its traditional compute-centric stronghold to also become more data-centric.

“I think the most important isolated benefit (of Ice Lake) is that it’s designed to support both established and emerging HPC markets,” Conway said. “That really had to happen and that’s a general direction for all processor makers, as HPC has gotten over the decades extremely compute-centric and relatively data unfriendly. (Ice Lake) is another advance in the direction of supporting data better with on-chip memory and data movement. That’s going to be very important for markets like AI, cloud enterprise, and edge computing.”

This relates to an Ice Lake AI strength, in Conway’s view, the processor’s combination of inferencing capabilities across both simulation and data analytics workloads.

“People associate AI often in kind of a knee jerk way only with analytics,” he said. “But simulation is incredibly important for AI. There’s data intensive simulation just as there is data intensive analytics. Processors like the Ice Lake family are clearly aimed at supporting both data intensive simulations and data intensive, and most of the really economically important AI, use cases that need HPC need concurrent simulation and analytics on the same workflow. So that’s where Ice Like fits, it’s developed for not only where HPC is but for where HPC is going.”

Dan Olds, chief research officer at analyst firm Intersect360 Research, had a similar response. Noting that Intel’s latest desktop chips were generally regarded as less-than-expected, he had anticipated something of a let-down from Ice Lake. But noting the nearly 50 percent increase in throughput over Gen 2 Xeons (released in 2019), Olds also cited Intel’s statement regarding Ice Lake speed-ups for HPC workloads over Gen 2 Xeons.

“Intel claiming that they have 1.57x greater performance (over 2nd Gen Xeon) on typical HPC application puts them, if true, right back into contention with AMD Milan to win the hearts and minds of HPC users.”

He also cited Ice Lake components whose performance compares favorably with Milan.

“For memory per socket to have 6 terabytes with this processor versus 4 terabytes for EPYC, that might make a difference to some customers,” he said. “And the read latency for remote sockets in nanoseconds is 191 for AMD and 139 for Ice Lake, and that might make a difference on read-intensive type workloads. I’m impressed.”