This is a round-up of some of NVIDIA’s announcements today at the opening of the GTC conference.

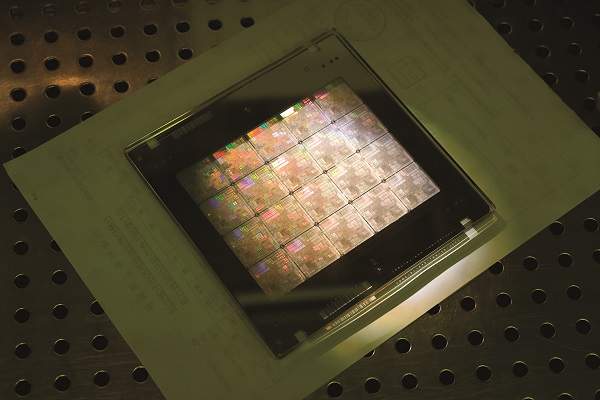

NVIDIA Lithography Software Adopted by ASML, TSMC and Synopsys

NVIDIA cuLitho

NVIDIA today announced what it said is a breakthrough that brings accelerated computing to the field of computational lithography that will set the foundation for 2nm chips “just as current production processes are nearing the limits of what physics makes possible,” NVIDIA said.

Running on GPUs, cuLitho delivers a performance boost of up to 40x beyond current lithography — the process of creating patterns on a silicon wafer — accelerating the massive computational workloads that currently consume tens of billions of CPU hours every year. NVIDIA said it enables 500 NVIDIA DGX H100 systems to achieve the work of 40,000 CPU systems, running all parts of the computational lithography process in parallel, helping reduce power needs and potential environmental impact.

The NVIDIA cuLitho software library for computational lithography is being integrated by chip foundry TSMC and electronic design automation company Synopsys into their software, manufacturing processes and systems for the latest-generation NVIDIA Hopper architecture GPUs. Equipment maker ASML is working with NVIDIA on GPUs and cuLitho, and is planning to integrate support for GPUs into its computational lithography software products.

The advance will enable chips with tinier transistors and wires than is now achievable, while accelerating time to market and boosting energy efficiency of data centers to drive the manufacturing process, NVIDIA said.

“The chip industry is the foundation of nearly every other industry in the world,” said Jensen Huang, founder and CEO of NVIDIA. “With lithography at the limits of physics, NVIDIA’s introduction of cuLitho and collaboration with our partners TSMC, ASML and Synopsys allows fabs to increase throughput, reduce their carbon footprint and set the foundation for 2nm and beyond.”

NVIDIA said fabs using cuLitho could help produce each day 3-5x more photomasks — the templates for a chip’s design — using 9x less power than current configurations. A photomask that required two weeks can now be processed overnight.

Longer term, cuLitho will enable better design rules, higher density, higher yields and AI-powered lithography, the company said.

The full announcement can be found here.

DGX Cloud

![]() NVIDIA announced NVIDIA DGX Cloud, an AI supercomputing service designed to give enterprises access to the infrastructure and software needed to train advanced models for generative AI and other applications.

NVIDIA announced NVIDIA DGX Cloud, an AI supercomputing service designed to give enterprises access to the infrastructure and software needed to train advanced models for generative AI and other applications.

The offering provides dedicated clusters of DGX AI supercomputing paired with NVIDIA AI software. The service is accessed using a web browser and is designed to avoid the complexity of deploying an on-premises infrastructure. Enterprises rent DGX Cloud clusters on a monthly basis, enabling rapid deployment scaling, NVIDIA said.

“We are at the iPhone moment of AI. Startups are racing to build disruptive products and business models, and incumbents are looking to respond,” said Huang. “DGX Cloud gives customers instant access to NVIDIA AI supercomputing in global-scale clouds.”

NVIDIA said it is partnering with cloud service providers to host DGX Cloud infrastructures, starting with Oracle Cloud Infrastructure. The OCI Supercluster provides a RDMA network, bare-metal compute and high-performance local and block storage that can scale to over 32,000 GPUs.

Microsoft Azure is expected to begin hosting DGX Cloud next quarter, followed by Google Cloud and others.

More information can be found here.

NVIDIA H100s on Public Cloud Platforms

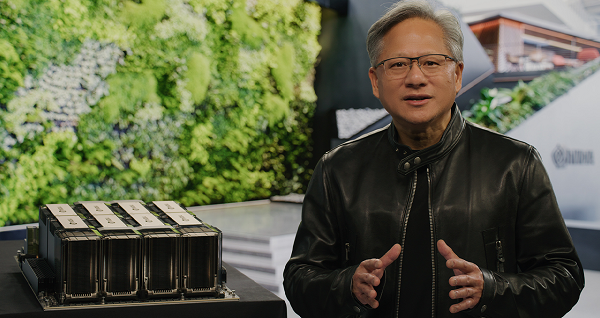

Jensen Huang with DGX H100 at GTC 2023

NVIDIA and partners today announced the availability of new products and services featuring the NVIDIA H100 Tensor Core GPU for generative AI training and inference.

Oracle Cloud Infrastructure (OCI) announced the limited availability of new OCI Compute bare-metal GPU instances featuring H100 GPUs. Additionally, Amazon Web Services announced its forthcoming EC2 UltraClusters of Amazon EC2 P5 instances which can scale in size up to 20,000 interconnected H100 GPUs. This follows Microsoft Azure’s private preview announcement last week for its H100 virtual machine, ND H100 v5.

Additionally, Meta has now deployed its H100-powered Grand Teton AI supercomputer internally for its AI production and research teams.

“Generative AI’s incredible potential is inspiring virtually every industry to reimagine its business strategies and the technology required to achieve them,” said Huang. “NVIDIA and our partners are moving fast to provide the world’s most powerful AI computing platform to those building applications that will fundamentally transform how we live, work and play.”

The H100, based on the NVIDIA Hopper GPU computing architecture with its built-in Transformer Engine, is optimized for developing, training and deploying generative AI, large language models (LLMs) and recommender systems. This technology makes use of the H100’s FP8 precision and offers 9x faster AI training and up to 30x faster AI inference on LLMs versus the prior-generation A100, NVIDIA said. The H100 began shipping in the fall in individual and select board units from global manufacturers.

Several generative AI companies are adopting H100 GPUs, including ChatGPT creator OpenAI, Meta in the development of its Grand Teton AI supercomputer and Stability.ai, the generative AI company.

More information can be found here.

NVIDIA and AWS for Generative AI

Amazon Web Services and NVIDIA will collaborate on a scalable AI infrastructure for training large language models (LLMs) and developing generative AI applications.

Amazon Web Services and NVIDIA will collaborate on a scalable AI infrastructure for training large language models (LLMs) and developing generative AI applications.

The project involves Amazon EC2 P5 instances powered by NVIDIA H100 Tensor Core GPUs and AWS networking and scalability that the companies say will deliver up to 20 exaFLOPS of compute performance. They said P5 instances will be the first GPU-based instance to be combined with AWS’s second-generation Elastic Fabric Adapter networking, which provides 3,200 Gbps of low-latency, high bandwidth networking throughput, enabling customers to scale up to 20,000 H100 GPUs in EC2 UltraClusters.

P5 instances feature eight NVIDIA H100 GPUs capable of 16 petaFLOPs of mixed-precision performance, 640 GB of high-bandwidth memory, and 3,200 Gbps networking connectivity (8x more than the previous generation) in a single EC2 instance. P5 instances accelerates the time-to-train machine learning (ML) models by up to 6x, and the additional GPU memory helps customers train larger, more complex models, the companies said, adding that P5 instances are expected to lower the cost to train ML models by up to 40 percent over the previous generation, according to NVIDIA.

BioNeMo Generative AI Services for Life Sciences

NVIDIA announced a set of generative AI cloud services designed for customizing AI foundation models to accelerate creation of new proteins and therapeutics, as well as research in genomics, chemistry, biology and molecular dynamics.

NVIDIA announced a set of generative AI cloud services designed for customizing AI foundation models to accelerate creation of new proteins and therapeutics, as well as research in genomics, chemistry, biology and molecular dynamics.

Part of NVIDIA AI Foundations, the BioNeMo Cloud service offering — for both AI model training and inference — accelerates the time-consuming and costly stages of drug discovery, NVIDIA said. It enables researchers to fine-tune generative AI applications on their proprietary data, and to run AI model inference directly in a web browser or through new cloud APIs that integrate into existing applications.

“The transformative power of generative AI holds enormous promise for the life science and pharmaceutical industries,” said Kimberly Powell, vice president of healthcare at NVIDIA. “NVIDIA’s long collaboration with pioneers in the field has led to the development of BioNeMo Cloud Service, which is already serving as an AI drug discovery laboratory. It provides pretrained models and allows customization of models with proprietary data that serve every stage of the drug-discovery pipeline, helping researchers identify the right target, design molecules and proteins, and predict their interactions in the body to develop the best drug candidate.”

BioNeMo now has six open-source models, in addition to its previously announced MegaMolBART generative chemistry model, ESM1nv protein language model and OpenFold protein structure prediction model. The pretrained AI models are designed to help researchers build AI pipelines for drug development.

Generative AI models can identify potential drug molecules — in some cases designing compounds or protein-based therapeutics from scratch. Trained on large-scale datasets of small molecules, proteins, DNA and RNA sequences, these models can predict the 3D structure of a protein and how well a molecule will dock with a target protein.

BioNeMo has been adopted by drug-discovery companies including Evozyne and Insilico Medicine, along with biotechnology company Amgen.

“BioNeMo is dramatically accelerating our approach to biologics discovery,” said Peter Grandsard, executive director of Biologics Therapeutic Discovery, Center for Research Acceleration by Digital Innovation at Amgen. “With it, we can pretrain large language models for molecular biology on Amgen’s proprietary data, enabling us to explore and develop therapeutic proteins for the next generation of medicine that will help patients.”

More information can be found here.

culitho and omniverse were the most exciting topics in the keynote