Sept. 26, 2023 — Nuclear energy is responsible for approximately one-fifth of total electricity used in the U.S., and nearly half of the country’s renewable electricity. Most of the reactors quietly, safely and efficiently generating this electricity were built decades ago. The construction of new nuclear reactors that use advanced technologies and processes could help grow the amount of carbon-free electricity the nuclear power industry produces and help the U.S. progress toward a net zero economy.

Sept. 26, 2023 — Nuclear energy is responsible for approximately one-fifth of total electricity used in the U.S., and nearly half of the country’s renewable electricity. Most of the reactors quietly, safely and efficiently generating this electricity were built decades ago. The construction of new nuclear reactors that use advanced technologies and processes could help grow the amount of carbon-free electricity the nuclear power industry produces and help the U.S. progress toward a net zero economy.Building a new nuclear reactor, however, takes time, and begins with rigorous computer simulations.

Researchers at Argonne National Laboratory are preparing to use Aurora, the laboratory’s upcoming exascale supercomputer, to delve into the inner mechanics of a variety of nuclear reactor models. These simulations promise an unprecedented level of detail, offering insights that could revolutionize reactor design by improving understanding of the intricate heat flows within nuclear fuel rods. This could lead to substantial cost savings while still generating electricity safely.

“What’s really new with Aurora are both the scale of the simulations we’re going to be able to do as well as the number of simulations,” said Argonne nuclear engineer Dillon Shaver. “In working on Polaris — Argonne’s current pre-exascale supercomputer — and Aurora, these very large-scale simulations are becoming commonplace for us, which is quite exciting.”

The simulations that Shaver and his colleagues are working on look at large sections of the nuclear reactor core with what he terms “high fidelity.” Essentially, there are tens of millions of discrete elements with billions of unknowns in a simulation. Their physics need to be directly calculated and resolved rather than just approximated. That’s where the supercomputers at the Argonne Leadership Computing Facility come into play.

“Fidelity means the amount of detail you can capture,” Shaver said. “We can simulate an entire core, but to do so at high fidelity really requires an exascale machine to compute all the physics on the finest length scales.”

The ultimate goal of doing these simulations is to provide companies who seek to build commercial reactors with a greater ability to validate and license their designs, Shaver explained. “Reactor vendors are looking to us to do these high-fidelity simulations in lieu of expensive experiments, with the hope that as we move to a new era in computing, they will be able to have data that strongly backs up their proposals,” he said.

According to Shaver, existing models have been well-benchmarked for the majority of reactors currently operating in the U.S. As new reactor concepts undergo further development and testing, the general physics should remain similar, but scientists still need to simulate them in many different environments. “From the realms of mechanical engineering, chemical science, and fluid dynamics, everything tells us that these reactors should behave in some predictable ways,” Shaver said. “But we still have to make sure.”

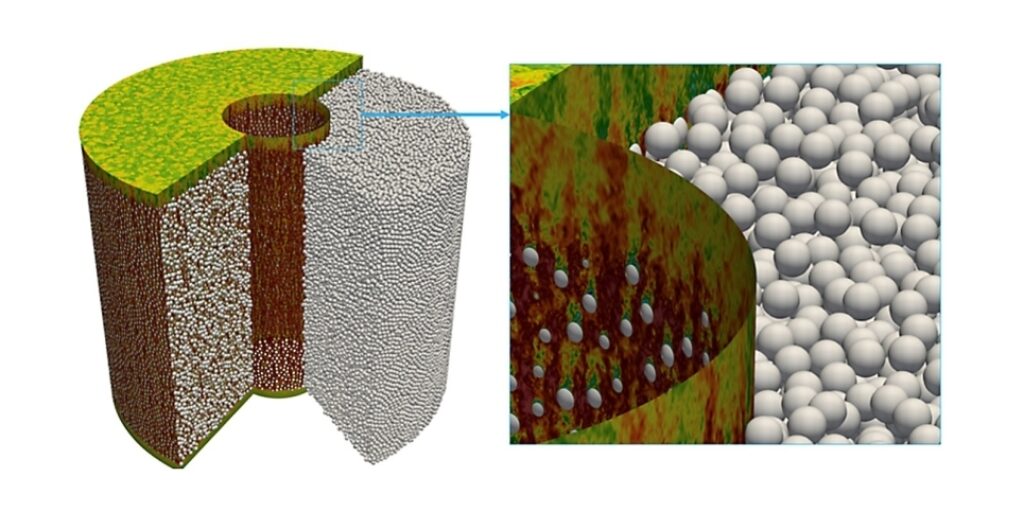

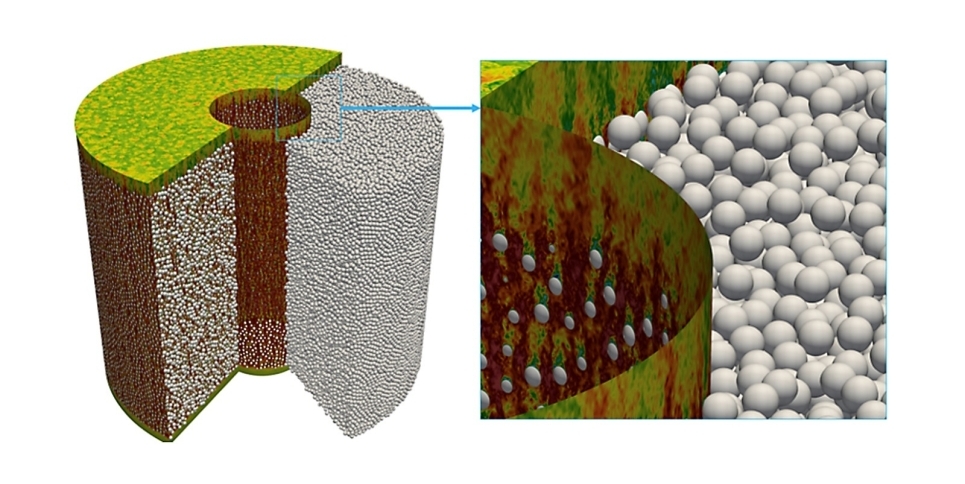

A near-exascale simulation of fluid velocity in a bed of 352,000 randomly packed pebbles in a nuclear reactor. credit: Argonne National Laboratory

By simulating the turbulence in the reactor — the whirls and eddies of heat that circulate around the fuel pins — researchers like Shaver can effectively model the reactor’s heat transfer properties. “Generally, and to an extent, the more turbulence you have, the more heat transfer you have,” Shaver said. “However, to get more turbulence, you have to pump your reactor harder and faster, which requires more energy.”

For sodium-cooled fast reactors, a type of advanced reactor that Argonne has been investigating for decades, the turbulence can cause the formation of vortices, which are essentially little heat whirlpools that can compound on one another. Too many of these vortices in the flow can cause a “flow-induced vibration” of the reactor pins themselves. “You have to make sure that your reactor core and your materials are going to stand up to any vibrations that are present, and that’s what we call a multiphysics problem, because you need to couple the fluid dynamics of the flow to the structural mechanics of the reactor,” Shaver said.

Multiphysics is pretty much the keyword when it comes to exascale simulations, Shaver explained. “Heat transfer, fuel performance, fluid dynamics, structural mechanics — we’re working more and more toward coupling all of our tools together as tightly as we can to figure out these multiphysics questions.”

The basic code underpinning the multiphysics simulations is the Multiphysics Object Oriented Simulation Environment, better known as MOOSE, which makes modeling and simulation more accessible to a wide range of scientists. Developed by DOE’s Idaho National Laboratory, MOOSE enables simulations to be developed in a fraction of the time previously required. The tool has revolutionized nuclear engineering predictive modeling, where it allows nuclear fuels and materials scientists to develop numerous applications that predict the behavior of fuels and materials.

According to Shaver, the design of Aurora will allow for scientists to run MOOSE and NekRS — a computational fluid dynamics solver — simultaneously, taking advantage of the computer’s mixed CPU/GPU nodes.

Being able to resolve all the little details in the flow in a reactor can make an enormous difference when it comes to designing either next-generation light water reactors or new reactor designs, such as those cooled by sodium or molten salt. “These small-scale dynamics are really important to tease out because they compound together to give you the large-scale behavior of the heat transport in the reactor,” Shaver said. “We have to get as fundamental as we can to make sure we get the best answers that we can.”

Source: Jared Sagoff, Argonne