In this special guest feature, John Kirkley writes that Argonne is already building code for their future Theta and Aurora supercomputers based on Intel Knights Landing.

Last year, when Intel and the U.S. Department of Energy (DOE) announced the $200 million supercomputing investment at Argonne National Laboratory, preparations were already underway at the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science User Facility located at Argonne.

Last year, when Intel and the U.S. Department of Energy (DOE) announced the $200 million supercomputing investment at Argonne National Laboratory, preparations were already underway at the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science User Facility located at Argonne.

One of the ALCF’s primary tasks is to help prepare key applications for two advanced supercomputers. One is the 8.5-petaflops Theta system based on the upcoming Intel® Xeon Phi™ processor, code-named Knights Landing (KNL) and due for deployment this year. The other is a larger 180-petaflops Aurora supercomputer scheduled for 2018 using Intel Xeon Phi processors, code-named Knights Hill. A key goal is to solidify libraries and other essential elements, such as compilers and debuggers that support the systems’ current and future production applications.

This is a complex undertaking, but the scientists and engineers at the ALCF have been down this road before. The template was established in 2010 as a joint effort of ALCF staff, IBM and user community scientists under the Early Science Program (ESP) to adapt applications software to take advantage of the Mira Blue Gene/Q architecture. Some 16 ESP science projects, representing a diverse range of scientific fields, numerical methods, programming models and computational approaches, were modified to run on Mira in its pre-production mode.

“The ESPs involving Theta and Aurora are using the same protocols as the Mira effort,” says Tim Williams, ALCF computational scientist and the manager of the Theta ESP. “We have six science projects that have been accepted on the basis of computational readiness and leading-edge science for the Theta ESP, which has been ongoing for about a year. The projects are using whatever tools are available – such as other systems, early versions of the Theta hardware and simulators – to prepare their applications for the new, advanced architecture that features nodes based on KNL. Our Theta system vendors play vital roles in the efforts—for example, instructing the ESP project teams on Intel hardware details and software development tools, and participating in hands-on deep dives to port and tune application codes.

Not only did our ESP for Mira help the system hit the ground running on day one,” says Williams, “it also produced invaluable lessons learned and some very interesting new science, including the first accurately computed values for the bulk properties of solid argon, a noble gas element. We’re looking forward to seeing what the new ESP projects can do with Theta’s leading-edge architecture.”

Williams adds that once the Theta system has been accepted later this year, there will be a special pre-production window of time called the Early Science period lasting about three months. During that time the system is dedicated to the ESP. The scientists will have their parallelized and vectorized codes ready so they can begin running their scientific calculations during this window.

A preproduction allocation of compute time on the new system to conduct leadership-scale computational research – that’s the carrot for a scientist to participate in the program,” he says. “ALCF benefits in a number of ways, including preparing applications to be strong candidates for production projects on the system. In addition, because a good number of developers will be working with the Theta system software and libraries, we will be able to prep the system for the rest of the production users. This includes finding and fixing bugs and learning the ins and outs of the system. One of our major challenges will be to understand and make the best use of the new memory hierarchy that will be incorporated in both Theta and Aurora. We’ll also be investigating how to use node-local storage, another new feature of Theta. And we will take advantage of this period to train our own staff and future computational scientists through our postdoc program.”

One of the deliverables will be technical reports on the computational aspects of each ESP project. The reports will cover such topics as how the team refactored the application to run on the new architectures, the issues encountered during the process and how they were solved. A workshop including presentations from each of the ESP projects will be held to discuss what they learned and contribute to the education of the ALCF’s larger user community.

Williams says the basic challenge facing everyone working on these next-generation systems is dealing with a high degree of parallelism. For example, Knights Hill processors will provide more than 60 cores per node, as compared to the 16 cores the ALCF user community is used to working with on the current Blue Gene/Q system.

Pushing the scaling out to 60 cores with four threads each is a major jump. At the very least, this will call for tuning application code to take full advantage of the fine-grained capabilities provided by these next-generation machines. Although, Williams notes, a number of ALCF applications will have a straightforward transition path to the new systems, some applications running on Mira may have to undergo extensive modification to scale up to the new, higher levels of on-node parallelism.

For the ALCF, one of the major benefits of ESP is to establish a structured program that allows the lab’s computational scientists to explicitly apply their expertise in code porting and code modification to prepare for these new architectures.

Many of the scientific and engineering applications in the ESP are used not just at ALCF, but on many computer systems by large communities of users,” says Williams. “Having community applications optimized for the latest system architectures benefits that wider community.

Each project has an ALCF postdoc assigned on a full-time basis. Both the postdocs and the users in the universities and research labs where these projects originated will be receiving intensive on-the-job training in using these next-generation systems. Postdocs in particular can look forward to enhanced career opportunities as a result of their involvement in the program and their intimate association with leading-edge technology.

Williams adds, “Many of the ESP users are also current users of ALCF production systems. We are trying to get a reasonable sample of applications from our current production workload into the ESP, as well as allowing some new applications.

“ESP provides us with a rigorous mechanism to shake down these systems to flush out problems and prepare software, such as libraries and compilers that will be used for a wide range of scientific applications long after the ESP is completed,” Williams concludes (see sidebar: Building a Solid Foundation). “We will be able to harden the software to allow next generations of users to investigate scientific problems that were beyond their reach until now.”

Building a Solid Foundation

Kalyan Kumaran and his application performance team at the ALCF are playing a key role supporting the latest ESP projects. They work closely with ALCF projects to optimize and scale applications on current and emerging systems.

The team also collaborates with performance tools, math libraries, programming models, and debugger vendors and communities to ensure their availability and performance on ALCF systems – including Theta and Aurora.

Once the system has been accepted,“ Kumaran explains, “We don’t immediately open it for production. Instead over a period of four to six months the early science projects will be running on the machines at scale.”

The feedback from the ESP projects during the pre-production time is beneficial to the ALCF as it provides an opportunity to optimize the machine based on real science runs. It is also during this time that a large portion of user documentation is developed and lessons learned are captured.

“During that period, we make sure that the researchers have access to all the software libraries they need, ported and ready to run,” Kumaran adds.

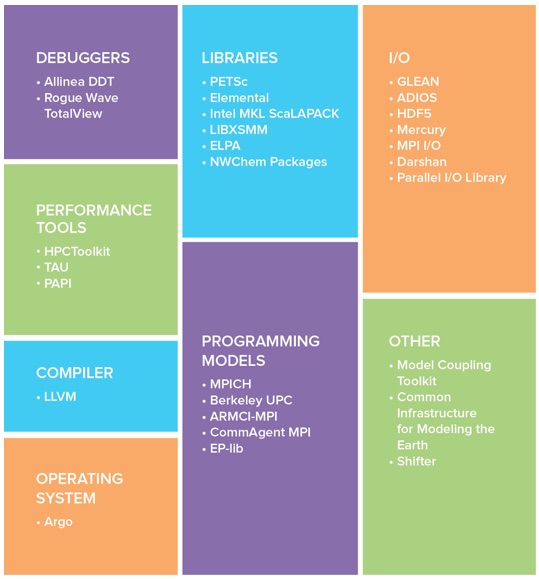

This includes other tools such as performance analysis, and debuggers (see the chart below).

Porting for Performance: The software & programming environment project to enable high-performance computational science

With the new system comes new technology that can help scientists who may have run into restrictions on the previous platform,” Kumaran said. “At the same time we want to make sure the software from our previous platform that our users depended upon is still available on the new system.”

The goal of all this intensive work is to provide unprecedented computing power and optimized software to users from academia, research and industry so they can address grand challenges.