In this special guest feature, Nick Nystrom from the Pittsburgh Supercomputing Center writes that the new Bridges supercomputer at PSC is tailor-made for High Performance Data Analytics.

Nick Nystrom, Senior Director of Research at PSC

The Pittsburgh Supercomputing Center (PSC) recently added Bridges to its lineup of world-class supercomputers. Bridges is designed for uniquely flexible, interoperating capabilities to empower research communities that previously have not used HPC and enable new data-driven insights. It also provides exceptional performance to traditional HPC users. It converges the best of High Performance Computing (HPC), High Performance Data Analytics (HPDA), machine learning, visualization, Web services, and community gateways in a single architecture.

The acquisition was funded by a $9.65 million National Science Foundation (NSF) award with the design target to enable analyses of vast data sets and allow researchers to easily take advantage of advanced computing resources, among other goals. Bridges was built by Hewlett Packard Enterprise (HPE) with Intel® Xeon® processors, and it incorporates the first installation of Intel’s new HPC fabric—Intel® Omni Path Architecture (Intel® OPA), both of which are key components of Intel® Scalable System Framework.

Building Bridges to the Future

Bridges began with initial hardware in December, 2015, followed by installation of the full Phase 1 system in February of this year. Both Intel and HPE engineers worked on bringing up the machine. It took them less than three days to have an operational system ready to begin acceptance testing.

Bridges came up just before the MIDAS MISSION Public Health Hackathon that took place at PSC on February 25 and 26. The Hackathon was a gathering of teams of expert programmers that ran and benchmarked novel and innovative visualization applications they created for public health uses based on a large set of publicly available health databases. The Hackathon was Bridges first run of real world applications. It was also the first chance to watch how Intel OPA performed with these workloads.

My colleague, Shawn Brown, Director of Public Health Applications at PSC, felt it was “important for the organizers of the MIDAS MISSION Public Health Hackathon to make available Intel Omni-Path to our participants so that they had the best technology on the market at their disposal to create top notch visualization applications.”

Jay DePasse, a Hackathon participant and member of Team vzt said, “The MIDAS MISSION Public Health Hackathon was a great experience, and having access to resources like Bridges it’s extremely high-speed Intel Omni-Path network was very helpful in producing data driven visualizations.”

First Place Entry: SPEW VIEW created by Shannon Ghallager and Lee Richardson at Carnegie Mellon University. Full application available at http://stat.cmu.edu:3838/sgallagh/hackathon/

A new effort for Bridges begins now, with several groups being allocated time on the machine who will use the system. Soon after, Bridges was opened to all researchers who had allocations to allow exploration of everything that Bridges was designed to do, from machine learning, large-scale data analytics, and gateways to traditional HPC science and engineering applications. One of the early groups will be running a machine learning framework that delivers much higher performance than Spark or Hadoop. For their framework and other applications, the Intel OPA interconnect in Bridges is expected to improve performance dramatically. We’ll be seeing a lot of exciting work and proving out the performance, usability, and reliability of Bridges and the fabric. For Bridges, this is only the beginning of a very interesting and exciting future designed for a wide range of workloads.

Converged Computing Enabled by Advanced Technologies

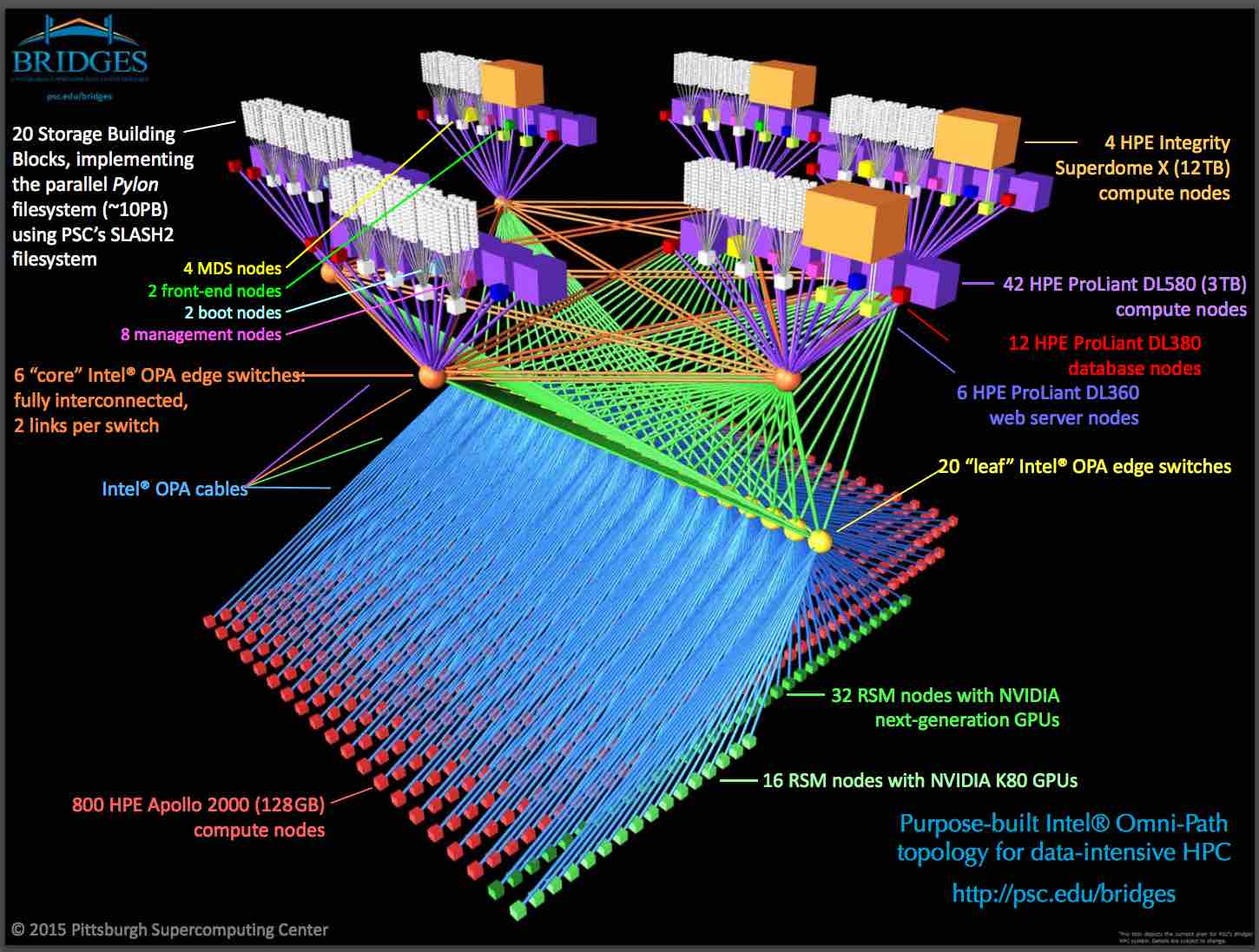

The multi-functional architecture of Bridges leverages a completely new fabric from Intel. The Intel OPA switches form an enhanced leaf-spine network layout that interconnects 846 advanced compute nodes, which includes four large-memory HPE Integrity Superdome X servers with 12 TB of RAM each, 42 HPE ProLiant DL580 servers with 3 TB of RAM each, and 800 HPE Apollo 2000 servers with 128 GB of RAM each. PSC offers a virtual tour of Bridges to show how its heterogeneous architecture supports different kinds of applications.

Performance, flexibility, and ease of use are core to Bridges’ design mandate. We have found that in many of the new research communities, the way to reach people and provide them computational facilities is to make resources available without requiring users to become HPC programmers. Many are comfortable with their desktop environments rather than Linux, and they’re using widespread tools such as R and Python rather than coding in C++ and MPI. Some are using Hadoop or Spark, and others are gaining benefit from containers. Some need high-performance resources for organizing information, maintaining provenance, driving gateways, and analytics. Additionally, others would like to couple applications expressed in different paradigms, leveraging some components developed for HPC and others for Hadoop or Spark, with optimized sharing of data between the components. We therefore designed Bridges to be able to simultaneously service a wide variety of computing needs by partitioning groups of nodes for different workloads, which entails providing very high IO bandwidth. Intel OPA was the best solution available to meet these converged computing and IO requirements.

A Performance Enabler

Intel OPA gives Bridges the performance it needs for the workloads that the system will run. The fabric delivers high bandwidth, extremely low latency, and very high injection rates. Our initial acceptance testing on the first set of nodes revealed an impressive 12.37 GB/s with latency at 930 nanoseconds, which is beyond our target levels. We very much appreciate the advanced features of the fabric architecture, such as Quality of Service (QoS), and we’re looking forward to exploring other aspects of the technology, including prioritization of messaging versus IO traffic.

We’re already taking advantage of the graphic management interface that’s part of Intel OPA. PSC’s sys administration team are experienced “power users” for whom command-line tools are often the most powerful, but for the complicated fabric of Bridges, using command lines and text files would be inefficient and time consuming. OPA’s graphic management interface offers significant advantages and is working out very well for us.

Three Intel Omni-Path switches in a Bridges rack.

Intel OPA offers a 48-port switch for the fabric, which turned out to be a perfect fit to our goal of running tight-coupled applications on approximately 1000 cores, which is a “sweet spot” for nontraditional communities and also much of traditional HPC. The Intel OPA 48-port switch allowed us to interconnect islands of 42 nodes, each with 28 Intel Xeon processor cores, to stand up 1,176-core partitions all tightly coupled and running at full bisection bandwidth across the 100 Gbps switch. Had there been only a 36-port switch, we would have had to build a much more complicated network to get full bisection bandwidth to 1,000 cores. We can also provide up to 22,400 cores (or around 27,000 cores, including Bridges’ large-memory nodes) for projects that require that level of computational resources and are not bisection-bandwidth bound. Intel OPA allowed us to achieve those complementary goals.

Bridges’ Analytics Focus

From our experience, the scarcest resource for advancing knowledge is often researchers’ time. Enhancing researchers’ productivity is extremely important, two aspects of which are doing analytics with large, in-memory databases and supporting graph analytics for interactive workflows. Bridges includes four 12 TB nodes and 42 3TB nodes for those purposes and others (for example, genome sequence assembly). These are connected very closely to persistent storage through Intel OPA edge switches to minimize latency between storage and compute nodes. Bridges’ topology creates a high-bandwidth, low-latency, cost-effective analytics engine.

Bridges provides users with unusually large amounts of RAM for in-memory database engines and analytics, enabling transparent acceleration of widely-used, open-source databases such as MySQL, MongoDB, and Neo4j and vast capacity to true in-memory databases without the overheads of distributed joins. Accessing those databases and incorporating them into workflows over a high-speed fabric yields complementary benefit. Hadoop users should also see speedups, especially in Hadoop’s time-consuming shuffle step, compared to what they’re used to seeing on lower-performing fabrics. Even more exciting is the opportunity to build applications that leverage existing components from both the HPC and Big Data software ecosystems, with all of those components efficiently accessing shared data.

With the ability to support high-performance data analytics (HPDA) and to bring together diverse, massive data collections, Bridges will enable new lines of research and interdisciplinary collaboration. For example, we expect projects that interface high-variety data, such as text corpora, images, GIS, and socioeconomic data, to which they apply natural language processing, machine learning, and visualization to create intuitive analytical tools. By supporting the broad availability of such tools as “gateways” accessible through a web browser – effectively, making them available (at no charge) through a software-as-a-service model – Bridges is a platform for democratizing access to HPDA and important datasets. Bridges’ fabric performance and topology that couple its heterogeneous, large-memory HPC nodes and high-performance storage are essential to realizing that vision. Community interest in Bridges is very high, and early results confirm Intel OPA’s value in making high-performance data analytics substantially more productive.

You can follow Bridges at http://psc.edu/bridges.