In this special guest feature, Sean Thielen writes that Intel is helping the European HPC community push forward with the DEEP project.

16 partners, 1 goal

To put it mildly, challenging a fundamental compute law is never going to be easy. Few European organizations or companies have all of the expertise and technology needed to tackle today’s big challenges. But well-orchestrated groups of specialists, research institutions and technology companies are achieving important breakthroughs. The Dynamical Exascale Entry Platform (DEEP) project , which was formed to test the limits of Amdahl’s generalized law, is a case in point.

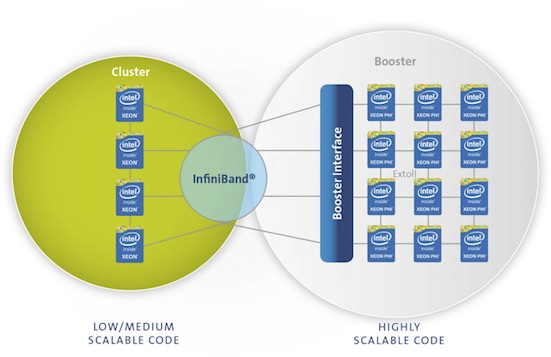

Funded by the European Commission in 2011, the DEEP project was the brainchild of scientists and researchers at the Jülich Supercomputing Centre (JSC) in Germany. The basic idea is to overcome the limitations of standard HPC systems by building a new type of heterogeneous architecture. One that could dynamically divide less parallel and highly parallel parts of a workload between a general-purpose Cluster and a Booster—an autonomous cluster with Intel® Xeon Phi™ processors designed to dramatically improve performance of highly parallel code.

80 people from 15 leading European institutions and companies, as well as Intel, provided more than 3.5 years of input and support for the first phases of the DEEP project. Ultimately, the consortium proved the potential of the Cluster-Booster architecture to push the limits of Amdahl’s law while demonstrating that European teams are in the vanguard of supercomputing innovations.

From concept to funding

The Jülich Supercomputing Centre, which is headed by Prof. Thomas Lippert, has a long-standing interest in HPC architecture projects. In 2010 Prof. Lippert approached Intel with the idea for the Cluster-Booster architecture, looking for input on how to make the theoretical system a reality. After considering the idea, the Intel team proposed that JSC could build a viable system using Intel® Xeon® processors and Xeon Phi coprocessors. Intel also found the project interesting and committed to supporting JSC’s development of a Cluster-Booster architecture.

Figure 1: The DEEP Cluster-Booster architecture at a glance.

“Jülich Supercomputing Centre is focused on shaping and building supercomputing instruments to be at the forefront of research,” says Prof. Lippert. “When it comes to a project like DEEP, we are strongly dependent on partnerships for co-designing and optimizing our systems. In many cases, without the direct help of our partners, we could not achieve our goals.” In the case of the DEEP project, in addition to Intel, JSC turned to the HPC group of Eurotech for help building the system along with a host of leading European companies and institutions, with expertise across key software, hardware, application and networking areas. Given the promise of the DEEP project, the European Commission agreed to provide € 8.3 million in funding in 2011. And with that one of the first three Exascale research projects funded by the European commission was off and running.

Extensive collaboration leads to success

Lippert notes that with the respect to the DEEP project, the involvement of Intel was a tacit guarantee that the project could ultimately produce something and show that the Cluster-Booster paradigm concept could work. “Knowing that Intel would be collaborating on this project was a big relief because we simply could not have made as much progress without their support—especially when it comes to optimizing new processor technology,” Lippert explains.

The shared roles of all partners were equally important to the overall success of the complex project, starting with JSC, which shouldered all of the management coordination, in addition to developing much of the system software.

Intel contributed the time of five different specialists to the project, including Hans-Christian Hoppe, a Principal Engineer. Over the course of the project, Hoppe spent 50 percent of his time on site at JSC so he could work closely with other team members and help to more quickly find resolutions for issues that arose. “Early on, my colleagues from Intel and I did a lot of work to help define what the system should look like, considering things like the topology, integrating with a third-party network and how to control the Intel Xeon Phi processor Booster from the Xeon processor cluster,” says Hoppe. Intel also worked closely with the consortium to optimize applications for the system.

Figure 2: The DEEP system.

Eurotech worked with the Leibniz Supercomputing Centre (LRZ), Heidelberg University, JSC and Intel to design and build the Booster. It also provided its innovative, highly dense and energy-efficient Aurora packing and cooling technology, which is water-cooled technology capable of high packing densities, for use in the Cluster-Booster system. LRZ was responsible for the system monitoring architecture and infrastructure, coming up with a novel and innovative approach for the new architecture. Heidelberg University provided key enabling technology for the Booster in the form of the highly scalable, direct-switched EXTOLL network, which proved well-suited to managing the scaling demands of the Cluster-Booster system.

Programming model work for the DEEP project was split amongst several consortium members. The Barcelona Supercomputing Center (BSC) extended their task-based OmpSs programming model to support the DEEP system. ParTec Cluster Competence Center GmbH also contributed key solutions for the system software stack, including highly efficient middleware for seamless communication between the physically autonomous parallel systems.

Six European partners provided workloads that were well-suited for the project, restructuring and optimizing them for the DEEP architecture. “The beauty of the DEEP approach is that you don’t have to completely rewrite applications and you can use an incremental approach to optimizing your application,” notes Hoppe. “You can also continue to run applications that have been adapted for DEEP on conventional architectures.”

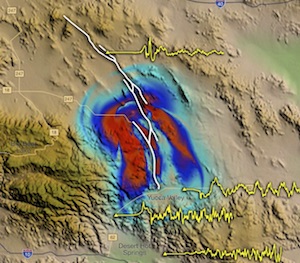

Figure 3: The DEEP team optimized six workloads for the project, including an earthquake simulation application.

It took about four years for the DEEP team to realize its vision and design and build the DEEP Booster, which includes 384 Intel Xeon Phi coprocessors. The prototype platform not only can achieve up to 500 TFLOP/s in peak performance—it provides a completely new and practical approach for breaking through efficiency and performance barriers that are inherent in standard architectures.

Lippert notes that all of the key players remained engaged throughout the project, gaining invaluable experience in the process. “Our experience shows that if a project is the right size it can be managed so that all of the members of the team feel like a family and stay engaged and focused on achieving the goals of the project. Our key partners all stepped up to the challenges and we had the added benefit of Intel helping us with quality standards and working with upcoming European technology companies to achieve their project goals,” Lippert explains.

Breaking down compute barriers with teamwork

In any inherently complex project like DEEP there are considerable technical hurdles to overcome. In the case of the DEEP project, many of the partners had to set aside conventional approaches to come up with solutions to novel problems. For example, Intel dedicated time for direct collaboration with Eurotech to validate its systems. “Normally Intel provides reference designs and documentation to OEMs,” explains Hoppe. “In the case of the DEEP project we knew we would need to do more. For example, at a few critical junctures, we helped Eurotech debug their boards to quickly find the source of problems that had the potential to be a big holdup.”

Lippert is pleased how the team used outside-of-the-box thinking for the sake of project. “The interesting thing is that you might think a big company like Intel would be reluctant to engage on a project like this, but in fact they were helping hold the whole thing together. Hans-Christian Hoppe’s involvement at Jülich was extremely beneficial for a couple of reasons. On the one hand, he helped clarify the potential for this project to the rest of the team. On the other he helped us maintain the very high level of quality standards that are necessary to make something like this successful. So the Intel team provided a great role model for Jülich and the European partners for how certain things should be handled,” Lippert explains.

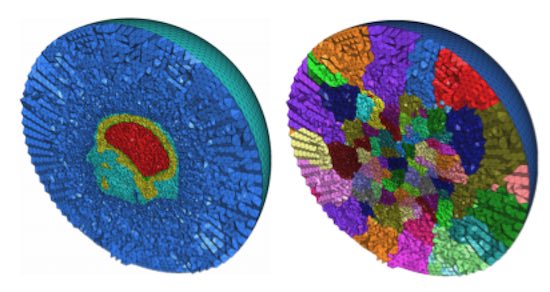

Figure 4: One of the real-world HPC applications that plays a key role in the DEEP-ER machine is MAXW-DGTD, a simulation software that models the propagation of electromagnetic fields in living tissues.

While the initial phase of the DEEP project is mostly complete, many of the original partners, and a few new ones, have moved on to phase two, known as DEEP-ER (Dynamical Exascale Entry Platform – Extended Reach) . DEEP-ER is partly a refresh for DEEP using the next generation of the Xeon Phi processor, which will deliver an even higher level of performance and efficiency. It also adds fast local storage to the Booster using Intel non-volatile memory devices to improve system I/O and resiliency, among other improvements.

Overall, Dr. Lippert feels the potential of the DEEP and DEEP-ER approaches are very promising for creating a new, more efficient paradigm for HPC. “Our hope is to turn DEEP from a prototype into production systems and then to finally get the DEEP approach into our daily life… Over the longer term, I would be very happy if our DEEP-related work helps lead toward the goal of what we call modular supercomputing where we have top-level system components with different capabilities that in combination run applications with high efficiency and throughput. For instance, a Cluster-Booster system could be complemented by a memory and data analytics module, which provides a large shared-memory resource and performs post-processing of simulation data. I would like to work with Intel to help realize this because I believe this is what we need for the data-centric computing of the future,” concludes Lippert.

[1] The DEEP project is funded by the European Commission within the 7th Framework Programme under grant agreement 287530.

[1] The DEEP-ER project is funded by the European Commission within the 7th Framework Programme under grant agreement no. 610476.

Sean Thielen, the founder and owner of Sprocket Copy, is freelance writer who specializes in high-tech subject matter.

Challenge Amdahl’s law? Didn’t someone do that back in 1988, rather successfully…?

Amdahl’s law is an algebraic truth about the way speeds add harmonically instead of arithmetically, assuming a fixed workload. The only way to challenge Amdahl’s math is to point out that the assumption was shaky; faster computers are used for bigger problems, so in measuring parallel speedup, it is the denominator that should be held constant, not the numerator. That removes the pessimistic predictions of Amdahl’s law, and explains why we have had several decades of successful use of massively parallel computers.

Thanks, John!