Sponsored Post

Machine Learning is a hot topic for many industries and is showing tremendous promise to change how we use systems. From design and manufacturing to searching for cures for diseases, machine learning can be a great disrupter, when implemented to take advantage of the latest processors.

Many algorithms and data types are being developed all the time. The algorithms constantly evolve in order to tackle new problems. So how does a developer choose the hardware that will be the underlying infrastructure of a productive system ? One way is to create and run benchmarks that closely resemble the current algorithms and then understand which hardware platform performs best. While there are many algorithms, the building blocks tend to remain the same over time. While we can be sure that new algorithms will be developed in the future, if the building blocks can be categorized, understood and tuned, then hopefully future algorithms will run fast as well.

It turns out that there are 3 main building blocks that can be identified across a large number of machine learning algorithms.

- Dense Linear Algebra

- Sparse Linea Algebra

- Data dependent compute – where the next step depends on the data in the present step.

[clickToTweet tweet=”Intel has a number of products that assist greatly with Machine Learning. #intelxeon #intelxeonphi” quote=”Intel products greatly speed up machine learning applications.”]

While many may think that compute is the most important part of the system, the memory bandwidth is critical for many algorithms to run fast. Getting data in and out to the CPU is critical, and this should not be taken lightly.

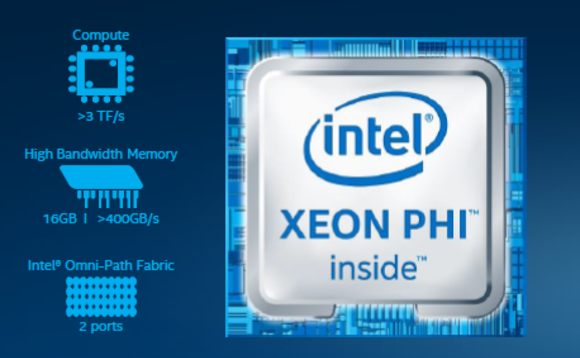

When looking at the underlying hardware for studying benchmark performance, the Intel Xeon can be compared to the Intel Xeon Phi processor. While the specifications in terms of peak performance may be higher with the Intel Xeon Phi processor, the maximum memory that can be installed is higher with the Intel Xeon, but the maximum memory bandwidth is higher with the Intel Xeon Phi processor. Thus, there are some tradeoffs that should be considered.

How do these properties affect data scientists ? Faster hardware is not critical, as the software must be able to take advantage of the hardware. The key is to optimize various applications to use the chip architecture and what it excels at.

At the lowest level, optimizing the Intel ® Math Kernel Library will lead to faster results for applications that rely on the math provided by the library. Hundreds of routines have been optimized for both the Intel Xeon and Intel Xeon Phi processor. Moving up the stack, the Intel Deep Learning SDK and deep learning framework are often built on top of the Intel MKL or similar libraries.

For a benchmark that is well understood, Intel has run Caffe/AlexNet on a variety of hardware systems, while also using the tuned libraries. From a baseline of 1.0, by using Intel MKL, the performance was 5.8 X faster. Further optimizations and running on the Intel Xeon Phi processor, showed an overall performance increase of 24X compared to the baseline. Other benchmarks show a performance gain of over 30X. This could be compared to what used to take a day to run, now might only take 1 hour.

While deep learning applications should be able to scale within a single server, including those that can benefit from utilizing the Intel Xeon Phi processor, some applications can scale across multiple servers in a cluster. Speedups on 128 node system have been demonstrated to be over 50X.

Machine learning consists of a diverse and fast evolving set of algorithms that generally rely on the same set of building blocks at a lower level. While the CPU performance is important, the memory bandwidth is also critical for high performance. The Intel Xeon Phi processor benefits applications that rely on dense linear algebra, especially for training purposes.

Download your free 30-day trial of Intel® Parallel Studio XE