In this special guest feature from Scientific Computing World, Michael Klemm, CEO, and Matthijs van Waveren, marketing coordinator for the OpenMP ARB, along with Jim Cownie, principal engineer, Intel Corporation (UK), consider the development of OpenMP over the last 20 years.

The first version of the OpenMP application programming interface (API) was published in October 1997. That was the year when Dolly the sheep was born, the first Harry Potter book was published, and the Mars Pathfinder landed. The number one machine in the Top500 list was ASCII Red built from 1,952 Intel Pentium Pro 200MHz cores delivering 1.34 Tflops. What a year!

The first version of the OpenMP application programming interface (API) was published in October 1997. That was the year when Dolly the sheep was born, the first Harry Potter book was published, and the Mars Pathfinder landed. The number one machine in the Top500 list was ASCII Red built from 1,952 Intel Pentium Pro 200MHz cores delivering 1.34 Tflops. What a year!

In the 20 years since then, the OpenMP API and the slightly older MPI have become the two stable programming models that high-performance parallel codes rely on. MPI handles the message passing aspects and allows code to scale out to significant numbers of nodes, while the OpenMP API allows programmers to write portable code to exploit the multiple cores and accelerators in modern machines.

In this article, we’ll run through the history of the OpenMP API and describe its evolution. From the very start, the OpenMP programming model has supported the changing architectures of modern machines, while retaining the stable and vendor-neutral programming model that is most relevant in the age where the number one machine in the Top500 now has over 10 million cores running at 1.45GHz delivering 125,436 Tflops. If you live in Europe (or even if you don’t!) it’s likely your weather forecast is created with the help of OpenMP, since both the IFS code used at the European Centre for Medium Range Weather Forecasting and the unified model at the UK Met Office use OpenMP directives.

What is the OpenMP API?

The OpenMP API is a directive-based high-level programming model for shared-memory parallelism. It enables programmers to write regular C/C++ or Fortran code and augment it with directives that tell the compiler how to transform the sequential code into a parallel version that smoothly executes in a multi-threaded environment.

From its very beginning the OpenMP API provided a high-level programming model that allows for easy access to the parallel world, but also gave programmers who focus on gaining the last bit of performance, a path to high performance by exposing control over how the code is transformed. This vision continued throughout the past 20 years, adding features like tasking for irregular parallel applications, heterogeneous programming (sometimes referred to as “offloading”) for coprocessors, and support for single-instruction multiple-data machines.

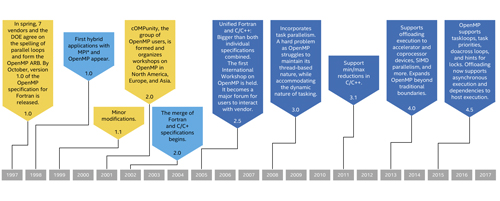

Evolution of the OpenMP Specification

The parallel programming world in 1997, when the OpenMP API was born, was different from what we experience today. Back then, systems had a central shared memory and two or more homogeneous CPUs that typically consisted of multiple chips. Each hardware vendor had their own way of programming these systems, which made the task of moving an HPC application from one system to another expensive. It was the OpenMP programming model that became the most widely accepted standard after it was published in 1997. Its success built on support from vendors and user communities, because it provided easy access to the performance of the multi-threaded world.

Summary of the Evolution of the OpenMP API Specification

The OpenMP Architecture Review Board (ARB), set up to maintain and promote the OpenMP API, published separate standards for Fortran and C, which were driven by Tim Mattson and Larry Meadows, respectively. In 2003, Mark Bull, from the Edinburgh Parallel Computing Centre (EPCC), became the new chair of the language committee and began an initiative to merge the Fortran and C specifications into a single, joint document.

With the rise of HPC as a major tool, application algorithms became more complex and moved away from simple loop-based execution. Recognising this, the OpenMP API added support for task-based programming to its core language. This made it possible to parallelise irregular applications that use recursive algorithms or traverse non-array data structures such as graphs.

Michael Klemm (right), CEO of OpenMP ARB

A few years later, commodity hardware began to move in a new direction, adopting the vector-like features of 1970s supercomputers by introduction single-instruction multiple-data (SIMD) processing. This shift required the OpenMP language to provide features that account for this new form of parallelism. The OpenMP API was extended to offer new constructs to let the compiler use SIMD instructions from these new processors.

Starting around 2009, HPC systems began to use specialised processors called accelerators or coprocessors. Graphics Processing Units (GPUs) were used to leverage their compute power in addition to traditional processors to accelerate the computations. Digital Signal Processors (DSPs) were used in embedded systems to account for the specialised functions needed in signal processing and filtering.

Programming support for these new kinds of accelerators required new features to be added to the OpenMP API in order to meet the requirements of this hardware and programming environment. Bronis de Supinski, from Lawrence Livermore Lab, then leader of the language committee, started an accelerator subgroup in the OpenMP ARB, which designed new language features (device constructs) for offloading calculations and data to accelerators.

The OpenMP API version 4.0 was released with device constructs in July 2013. About this time, Texas Instruments released an OpenMP toolkit for its DSPs and its SoCs, and Intel released its coprocessor based on the Many-Integrated Core (MIC) architecture with OpenMP support. With these releases and other support in the ecosystem, the extension of the OpenMP API from pure shared-memory systems to accelerators and embedded systems was a reality. Since then multiple vendors of accelerator and embedded systems have released products based on the OpenMP API for offloading data and calculations.

Applications that use the OpenMP programming model for parallelisation are countless and can be found in many scientific and non-scientific domains. The list includes, and is certainly not limited to, commercial applications such as the Altair RADIOSS OptiStruct and FEKO products, and the DATADVANCE MACROS product; and open-source applications such as NWChem for quantum chemistry, Amber for molecular dynamics, WRF for weather forecasting, eFindSite for bioinformatics, and StingRay for radio frequency ray tracing.

Applications that use the OpenMP programming model for parallelisation are countless and can be found in many scientific and non-scientific domains. The list includes, and is certainly not limited to, commercial applications such as the Altair RADIOSS OptiStruct and FEKO products, and the DATADVANCE MACROS product; and open-source applications such as NWChem for quantum chemistry, Amber for molecular dynamics, WRF for weather forecasting, eFindSite for bioinformatics, and StingRay for radio frequency ray tracing.

Members

The OpenMP ARB is the body that oversees the OpenMP language and promotes it world-wide. Since its inauguration, the OpenMP ARB has been a vendor-neutral organisation (formally a US non-profit), with many different hardware and software vendors, academics and important HPC sites participating in defining the OpenMP API. A full list of current members can be found on the OpenMP website (http://openmp.org; the source for all things related to OpenMP). The number of members has increased from the original eight to 29 members, and the list is growing.

The ARB membership has prominent representation, with members including AMD, ARM, Cavium, Cray, Fujitsu, IBM, Intel, Micron, NEC, NVIDIA, Oracle, Red Hat, and Texas Instruments. Given that list, there are only two machines in the November 2017 Top500 list which use processors from a vendor who is not an OpenMP ARB member!

Similarly, major HPC sites such as the US national labs, Barcelona Supercomputing Center, INRIA and EPCC are involved along with leading technical universities.

Any organisation interested in helping to drive the future of the OpenMP API, or wanting to know what will be in the next version of the standard as it is being worked on, is invited to join the OpenMP ARB.

Workshops

Over the years, several conferences and workshops have been established to provide the community a forum for knowledge exchange.

Over the years, several conferences and workshops have been established to provide the community a forum for knowledge exchange.

The main research workshop is the yearly International Workshop on OpenMP (IWOMP) that emerged from the EWOMP, WOMPAT, and WOMPEI workshops. IWOMP alternates between the US, Europe, and the Asia Pacific region.

Workshop proceedings appear in the renowned Springer Lecture Notes on Computer Science and attract many researchers focusing on OpenMP development. Of course, other HPC-themed conferences also welcome research based on the OpenMP programming language.

OpenMPCon is a newer conference which gathers the OpenMP community to present their use of the OpenMP API in their applications and to provide a platform for knowledge exchange between OpenMP developers and the OpenMP ARB. OpenMPCon is co-located with IWOMP.

The inaugural UK OpenMP Users’ Conference will be in Oxford in May. Similar to OpenMPCon, this conference is intended to be a forum for OpenMP users to meet, discuss their achievements and findings, and to engage with the OpenMP ARB.

For user queries and discussions, there is an active OpenMP group on StackOverflow, as well as support through a forum on the OpenMP website (http://forum.openmp.org/forum/). There are also many OpenMP courses.

The Next 20 Years

Over the last 20 years, the OpenMP language has demonstrated it can adapt to changes in the hardware and software environment while maintaining its utmost relevance in HPC.

Since the beginning it has been a key programming model that enables the use of modern hardware, without requiring the use of vendor-specific languages and its many supporters intend to ensure that remains true for the next 20 years.

This story appears here as part of a cross-publishing agreement with Scientific Computing World.