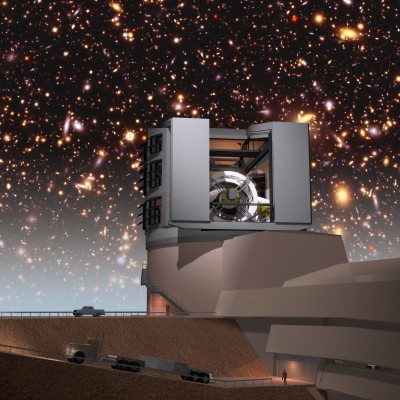

The first research area for ExaLearn’s surrogate models will be in cosmology to support projects such a the LSST (Large Synoptic Survey Telescope) now under construction in Chile and shown here in an artist’s rendering. (Todd Mason, Mason Productions Inc. / LSST Corporation)

As supercomputers become ever more capable in their march toward exascale levels of performance, scientists can run increasingly detailed and accurate simulations to study problems ranging from cleaner combustion to the nature of the universe. Enter ExaLearn, a new machine learning project supported by DOE’s Exascale Computing Project (ECP), aims to develop new tools to help scientists overcome this challenge by applying machine learning to very large experimental datasets and simulations.

The challenge is that these powerful simulations require lots of computer time. That is, they are “computationally expensive,” consuming 10 to 50 million CPU hours for a single simulation. For example, running a 50-million-hour simulation on all 658,784 compute cores on the Cori supercomputer NERSC would take more than three days. Running thousands of these simulations, which are needed to explore wide ranges in parameter space, would be intractable.

One of the areas ExaLearn is focusing on is surrogate models. Surrogate models, often known as emulators, are built to provide rapid approximations of more expensive simulations. This allows a scientist to generate additional simulations more cheaply – running much faster on many fewer processors. To do this, the team will need to run thousands of computationally expensive simulations over a wide parameter space to train the computer to recognize patterns in the simulation data. This then allows the computer to create a computationally cheap model, easily interpolating between the parameters it was initially trained on to fill in the blanks between the results of the more expensive models.

Training can also take a long time, but then we expect these models to generate new simulations in just seconds,” said Peter Nugent, deputy director for science engagement in the Computational Research Division at LBNL.

From Cosmology to Combustion

Nugent is leading the effort to develop the so-called surrogate models as part of ExaLearn. The first research area will be cosmology, followed by combustion. But the team expects the tools to benefit a wide range of disciplines.

Many DOE simulation efforts could benefit from having realistic surrogate models in place of computationally expensive simulations,” ExaLearn Principal Investigator Frank Alexander of Brookhaven National Lab said at the recent ECP Annual Meeting. “These can be used to quickly flesh out parameter space, help with real-time decision making and experimental design, and determine the best areas to perform additional simulations.”

The surrogate models and related simulations will aid in cosmological analyses to reduce systematic uncertainties in observations by telescopes and satellites. Such observations generate massive datasets that are currently limited by systematic uncertainties. Since we only have a single universe to observe, the only way to address these uncertainties is through simulations, so creating cheap but realistic and unbiased simulations greatly speeds up the analysis of these observational datasets. A typical cosmology experiment now requires sub-percent level control of statistical and systematic uncertainties. This then requires the generation of thousands to hundreds of thousands of computationally expensive simulations to beat down the uncertainties.

These parameters are critical in light of two upcoming programs:

- The Dark Energy Spectroscopic Instrument, or DESI, is an advanced instrument on a telescope located in Arizona that is expected to begin surveying the universe this year. DESI seeks to map the large-scale structure of the universe over an enormous volume and a wide range of look-back times (based on “redshift,” or the shift in the light of distant objects toward redder wavelengths of light). Targeting about 30 million pre-selected galaxies across one-third of the night sky, scientists will use DESI’s redshifts data to construct 3D maps of the universe. There will be about 10 terabytes (TB) of raw data per year transferred from the observatory to NERSC. After running the data through the pipelines at NERSC (using millions of CPU hours), about 100 TB per year of data products will be made available as data releases approximately once a year throughout DESI’s five years of operations.

- The Large Synoptic Survey Telescope, or LSST, is currently being built on a mountaintop in Chile. When completed in 2021, the LSST will take more than 800 panoramic images each night with its 3.2 billion-pixel camera, recording the entire visible sky twice each week. Each patch of sky it images will be visited 1,000 times during the survey, and each of its 30-second observations will be able to detect objects 10 million times fainter than visible with the human eye. A powerful data system will compare new with previous images to detect changes in brightness and position of objects as big as far-distant galaxy clusters and as small as nearby asteroids.

For these programs, the ExaLearn team will first target large-scale structure simulations of the universe since the field is more developed than others and the scale of the problem size can easily be ramped up to an exascale machine learning challenge.

As an example of how ExaLearn will advance the field, Nugent said a researcher could run a suite of simulations with the parameters of the universe consisting of 30 percent dark energy and 70 percent dark matter, then a second simulation with 25 percent and 75 percent, respectively. Each of these simulations generates three-dimensional maps of tens of billions of galaxies in the universe and how the cluster and spread apart as time goes by. Using a surrogate model trained on these simulations, the researcher could then quickly run another surrogate model that would generate the output of a simulation in between these values, at 27.5 and 72.5 percent, without needing to run a new, costly simulation — that too would show the evolution of the galaxies in the universe as a function of time. The goal of the ExaLearn software suite is that such results, and their uncertainties and biases, would be a byproduct of the training so that one would know the generated models are consistent with a full simulation.

Toward this end, Nugent’s team will build on two projects already underway at Berkeley Lab: CosmoFlow and CosmoGAN. CosmoFlow is a deep learning 3D convolutional neural network that can predict cosmological parameters with unprecedented accuracy using the Cori supercomputer at NERSC. CosmoGAN is exploring the use of generative adversarial networks to create cosmological weak lensing convergence maps — maps of the matter density of the universe as would be observed from Earth — at lower computational costs.

Source: Berkeley Lab

Sign up for our insideHPC Newsletter