In this video from ISC 2018, Mark Seamans from HPC describes the HPE Data Management Framework.

In this video from ISC 2018, Mark Seamans from HPC describes the HPE Data Management Framework.

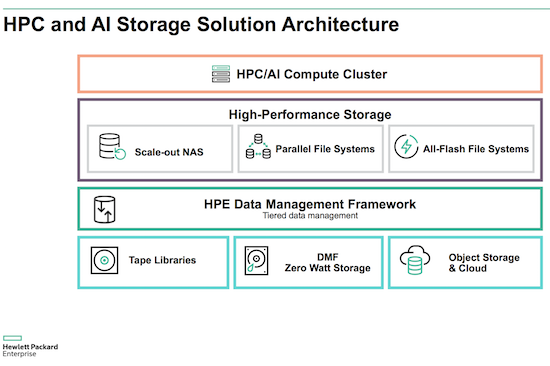

The HPE Data Management Framework (DMF) optimizes data accessibility and storage resource utilization by enabling a hierarchical, tiered storage management architecture. Data is allocated to tiers based on service level requirements defined by the administrator. For example, frequently accessed data can be placed on a flash, high-performance tier, less frequently accessed data on hard drives in a capacity tier, and archive data can be sent off to tape storage.”

With an integrated policy engine, HPE DMF allows specification of data movement between tiers, as well as integration with backup, archive, and disaster recovery mechanisms. By allocating data to the proper tier, data is stored on the most cost effective hardware for accessibility requirements. Additionally, data intensive workflows can be streamlined through automatic staging of data, reducing data gathering delays.

Features include:

- Data at the Right Time and Place. The HPE Data Management Framework enables a tiered storage architecture for HPC Linux storage environments, where tiers can be defined based upon access speed requirements. For example, data needed quickly can be placed on a SSD tier, while infrequently used data can be placed into tape archive. Data appears online to the user or application regardless of which tier it’s on. A file can be partially recalled, with the data immediately accessible, even as the remainder of the file remains on a lower tier of storage. Job-specific data staging can be used to streamline workflows by automating data gathering processes to provide data when needed.

- Optimized Use of Data Storage Resources. The HPE Data Management Framework optimizes usage of storage resources. Storage capacity is efficiently used since data can be expediently moved to the most cost effective storage mechanism based on defined access policies. ROI is improved as data resides on each tiers only as long as necessary, so that data is always placed on the least expensive storage required per access policy, and reducing overall cost of storage.

- Reduced Administrative Overhead. The HPE Data Management Framework (DMF) provides automated policies that reduce manual data transfers. ROI is improved as data resides on each tier only as long as necessary, so that data is always placed on the least expensive storage required per access policy, reducing overall cost of storage.

- Future-proof Storage Architecture. The HPE Data Management Framework allows the seamless introduction of new storage technologies by allowing automatic migration, validation and consolidation of data to new storage capabilities in the background. Data migration to new storage capabilities is invisible to the end-user, as data is consistently accessible for the end-user.

Mark Seamans is the Director, HPC and AI Data Management and Storage at Hewlett Packard Enterprise. In this role Mark manages the strategy, engineering, and delivery teams focused on storage and data management solutions for HPE’s high-performance computing customer base, including Lustre file system solutions and the HPE Data Management Foundation (DMF) tiered data platform. Prior to HPE, Mark was the Senior Director for HPC Storage Solutions at Silicon Graphics Inc. (SGI) which was acquired by HPE in November 2016. Mark joined SGI following their acquisition of FileTek, a leading software provider of tiered data management software where he was Chief Technology Officer.

Mark Seamans is the Director, HPC and AI Data Management and Storage at Hewlett Packard Enterprise. In this role Mark manages the strategy, engineering, and delivery teams focused on storage and data management solutions for HPE’s high-performance computing customer base, including Lustre file system solutions and the HPE Data Management Foundation (DMF) tiered data platform. Prior to HPE, Mark was the Senior Director for HPC Storage Solutions at Silicon Graphics Inc. (SGI) which was acquired by HPE in November 2016. Mark joined SGI following their acquisition of FileTek, a leading software provider of tiered data management software where he was Chief Technology Officer.