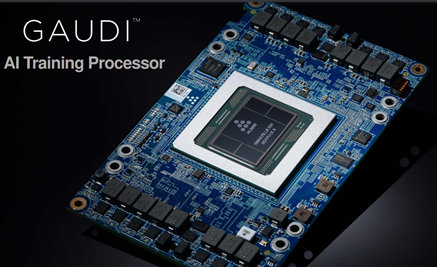

As customer validations go, it’s safe to say AWS is at the high end – *cough* – of any vendor’s wish list. So it’s understandable that Intel is crowing about a notable win for Gaudi AI co-processors from Habana Labs, an Intel company – particularly in light of Intel’s difficulties gaining a foothold in the AI accelerator market. At its virtual re:Invent conference today, AWS announced EC2 instances using up to eight Gaudi AI training chips and delivering up to 40 percent better price performance than current GPU-based EC2 instances for machine learning workloads, according to AWS.

As customer validations go, it’s safe to say AWS is at the high end – *cough* – of any vendor’s wish list. So it’s understandable that Intel is crowing about a notable win for Gaudi AI co-processors from Habana Labs, an Intel company – particularly in light of Intel’s difficulties gaining a foothold in the AI accelerator market. At its virtual re:Invent conference today, AWS announced EC2 instances using up to eight Gaudi AI training chips and delivering up to 40 percent better price performance than current GPU-based EC2 instances for machine learning workloads, according to AWS.

AWS said Gaudi-based EC2 instances are designed for deep learning training workloads for such applications as natural language processing, object detection and classification, recommendation and personalization. The public cloud market leader said the new offering is intended to address the increasing complexity of ML models that are driving up the time and cost of AI training. “Customers continue to look for ways to reduce the cost of training in order to iterate more frequently and improve the quality of their ML models,” AWS said. “Gaudi-based EC2 instances are designed to address these customer needs by delivering cost efficiency in training machine learning models.”

AWS, with a sizeable if somewhat reduced lead in the exploding global public cloud computing services market, is widely used among AI developers for training their artificial intelligence models. But the cost of training is rising as model complexity and data volumes increase. Intel said Gaudi-based EC2 instances deliver cost efficiency and high performance by integrating 10 100-GbE ports of RoCE (RDMA over Converged Ethernet) into the processor chip while natively supporting common frameworks such as TensorFlow and PyTorch. It’s also supported by Habana’s SynapseAI Software Suite for building or porting training models from GPUs to Gaudi accelerators. Customers will be able to launch EC2 instances using AWS Deep Learning AMIs, Amazon EKS and ECS for containerized applications, and Amazon SageMaker.

Intel acquired Habana in 2019 to bolster its portfolio of AI accelerators, a chip category dominated by Nvidia. Intel said the acquisition supports the company’s “XPU” strategy – offering a mix of CPUs, GPUs, FPGAs and other architectures.

“Intel’s under a transformation,” Intel’s Jeff McVeigh, VP/GM of data center XPU products and solutions, recently told us, “we’re going from what we call a CPU-centric world to an XPU-centric world…, we really recognize that there’s just a diverse set of workloads and that one architecture is not appropriate for all of them. Oftentimes, they have workloads that have high portions of scalar code, matrix or vector code, or even spatial, and that’s really been driven a lot of our acquisition activities… And we’ve been doing a lot of what I would call organic development in the GPU space, to really provide a full portfolio of capabilities to our customers in the ecosystem so they can address their needs and allow them to mix and match whatever best suits their requirements.”

“Our portfolio reflects the fact that artificial intelligence is not a one-size-fits-all computing challenge,” said Remi El-Ouazzane, chief strategy officer of Intel’s Data Platforms Group. “Cloud providers today are broadly using the built-in AI performance of our Intel Xeon processors to tackle AI inference workloads. With Habana, we can now also help them reduce the cost of training AI models at scale, providing a compelling, competitive alternative in this high-growth market opportunity.”