The twice-annual TOP500 list of the world’s most powerful supercomputers is not universally loved, arguments persist whether the LINPACK benchmark is an optimal way to assess HPC system performance. But few would argue it serves a valuable purpose: for those installing leadership-class supercomputers, the TOP500 poses a challenge and a looming deadline that “concentrates the mind wonderfully.” *

This happened in 2022 when the team standing up the Frontier exascale supercomputer mounted a final-days effort to reach the exaflops milestone in time for the TOP500 list released during ISC in Germany. And it’s happening now at Argonne National Laboratory where the Aurora system, the second of three initial American exascale systems, is receiving TLC from Intel, HPE and Argonne technicians as the deadline approaches for the TOP500 list to be unveiled at the SC23 conference.

There is speculation in some circles that the Aurora project team may not attain the performance numbers it wants to participate in next month’s TOP500 list. But we sat down with project leaders from Argonne and Intel for an Aurora update, and the overall sense they conveyed was one of positive, if somewhat cautious, optimism. Both avoided providing details regarding numbers of Aurora nodes engaged or performance levels reached. But they both said multiple hundreds of project team members – the ones in “the dungeon” running benchmarks and tuning Aurora to increase LINPACK (HPL) results – are working long, intensive hours.

Aurora installation at the Argonne Leadership Computing Facility

They’ve got the mother of all supercomputers to wrestle with, a 600-ton behemoth comprised of 166 cabinets and 10,624 nodes with 300 miles of networking cables, situated in its own wing of the Argonne Leadership Computing Facility (ALCF).

When the HPE Cray EX supercomputer is fully accepted, it will likely have several “firsts” to its name. It’s expected to be the first system to deliver 2 exaflops (or close to it) of compute power, the first to integrate Intel CPUs (21,248 “Sapphire Rapids HBM” Xeon Max Series) and Intel GPUs (63,744 “Ponte Vecchio [PVC] GPU Max Series), the first to integrate Intel GPUs with the HPE Slingshot interconnect fabric, and the first world-class supercomputer in a long time for which Intel is the prime contractor.

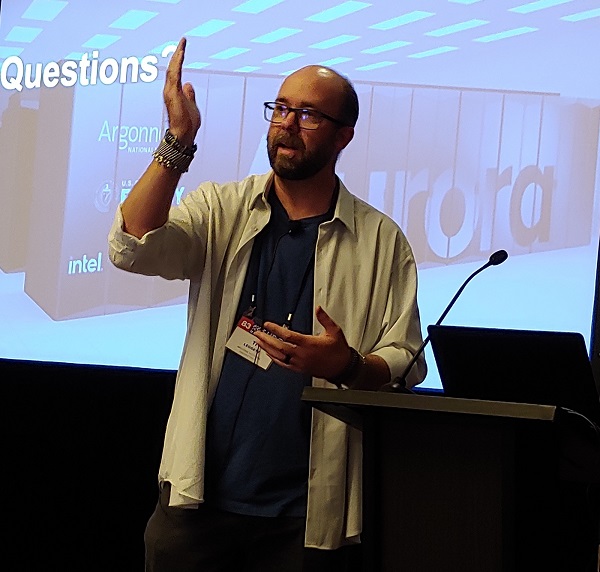

We spoke with Ti Leggett, ALCF deputy project director and deputy director of operations, and with Manoher Bommena, Intel’s vice president, super compute platforms. Within the normal range of challenges and issues involved in standing up a system as large and complex, they tended toward an optimistic outlook on the Aurora install.

Ti Leggett at last month’s HPC User Forum

“Progress is certainly being made on the bringing up and validation of the hardware,” Leggett said. “We’re still working through node-to-node connectivity issues, there’s not a whole lot of those left but there’s still some of that work to be done… as we’re bringing up and scaling up on the HPL front. So as those nodes are being moved in and we increase our HPL benchmark, those nodes are being more thoroughly validated.”

“We’ve been working to stabilize the system, make it performant running applications on it, too,” said Bommena. “And in some cases, we are actually doing well, in some cases, they are not so well, further optimization needs to happen.

He said the two chips powering the system have been vetted and tested, “…so all the ingredients and independent components have been worked on before and there’s confidence in those. But once you put them all together into one blade, and then you take that into a whole system of 10,000-plus nodes and make them all work together, I think that’s a totally different beast. And we are working on it right now. “

With all nodes and blades stood up, now the focus is on the fabric bringing Aurora aggregate compute resources to bear. “The fabric issues are being resolved,” Bommena said, “and … you start seeing where your bottlenecks are and you’re trying to fix them, those are the things that are being worked on.”

Leggett declined to share specific HPL results.

“We are at larger scale than we were a month ago, for sure,” he said. “And the performance we’re achieving is promising, I think that is how I can frame it. Things are going relatively well. That doesn’t mean that we’re out of the woods because you never are until you’re done with it.”

He described the work as an all-hands-on-deck effort from Argonne, Intel and HPE. “We are furiously working on scaling that up. You don’t do the hero run, especially on a system like this, you have to take it in steps. And sometimes it’s two steps forward one step back as you make progress. And so everybody’s fully engaged to try and get a sufficient run before the (TOP500) cut-off that looming in the next couple of weeks.”

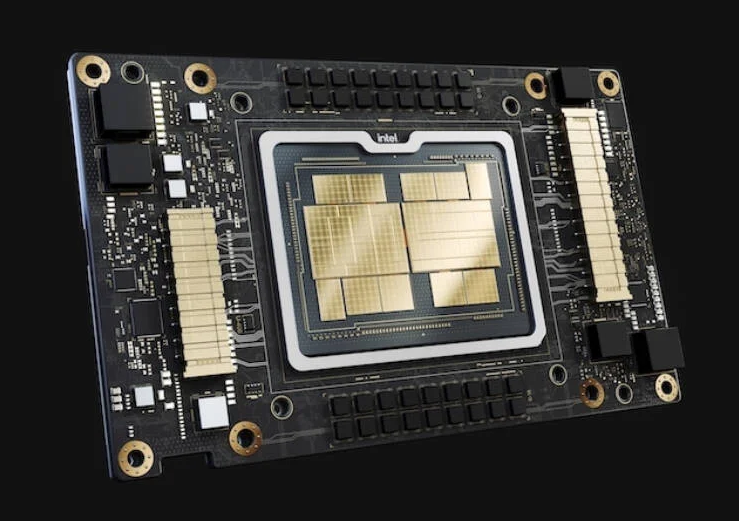

Intel’s “Ponte Vecchio” GPU Max Series

“Things are certainly looking positive,” he added. “The performance that we’re getting is in line with where we want to be.”

As has been exhaustively reported, Aurora’s GPU Max Series chip, Ponte Vecchio (PVC), has been a problem child as chip development projects go. Delayed many times over the last several years, it’s given Intel and Aurora project team members fits. But that it’s shipped and has been installed in Aurora blades, Leggett is upbeat on PVC.

“I think it’s been performing very well,” he said. “Initial observations from some of the early (Aurora) users are the same. The comparison was PVC compared to (NVIDIA) A100 and AMD MI250X GPUs. And in many cases, a lot of the codes already are on par with both of those, and in some cases are performing better on the PVC, and, obviously, there’s some that are lagging. But in general, they’re already on par and in some cases are doing better, which is good. That’s what we would love to see.”

Bommena also declined to provide specifics on Aurora’s LINPACK runs.

Manoher Bommena, Intel

“Ponte Vecchio has been exercised at scale,” Bommena said. “Previously, it was exercised at a node level itself, and a tremendous amount of validation it went through. And with respect to the lower micro benchmarks, etc., they’ve been able to get that to work on PVC and it performed to that mode. But that is only one part of the story. Once you put PVC together in the blade with the CPU, and you have the software … and you have several other components, we’ve got the switch from Broadcom, that needs to be there, and that needs to interact with each of these. So all those all those hardware components need to come together.”

Bommena said blade level validation on Aurora went well, “we were able to accomplish all the targets that we set ourselves for. But then the newer ones are really when you put it at scale. So those are the ones that we’re working on. So far, any setbacks that we’ve seen because of the scale validation … are able to get resolved through firmware and software upgrades. By the time we are done with installation, and complete the job, we should have performant software, firmware and hardware that are working together. It’s the standard process of making sure any hardware works the right way.”