The Exascale Computing Project (ECP) is working with a number of National Labs on the development of supercomputers with 50x better performance than today’s systems. In this special guest feature, ECP describes how Argonne is stepping up to the challenge. “Given the complexity and importance of exascale, it is not anything a single lab can do,” said Argonne’s Rick Stevens.

Archives for August 2017

Ace Computers Teams with BeeGFS for HPC

Ace Computers and BeeGFS have teamed to deliver a complete parallel file system solving storage access speed issues that slow down even the fastest supercomputers. BeeGFS eliminates the gap between compute speed and the limited speed of storage access for these clusters–stalling on disk access while reading input data or writing the intermediate or final simulation results. “We are building clusters that are more and more powerful,” said Ace Computers CEO John Samborski. “So we recognized that storage access speed was becoming an issue. BeeGFS has proven to be an excellent, cost-effective solution for our clients and a valuable addition to our portfolio of partners.”

Bringing Your Kids to SC17 in Denver?

“As part of its ongoing inclusivity efforts, SC17 will once again offer on-site child care during the conference. Available to registered attendees and exhibitors for a small fee, SC17 day care is available for children between the ages of 6 months through 12 years old are eligible to participate.”

TensorFlow Deep Learning Optimized for Modern Intel Architectures

Researchers at Google and Intel recently collaborated to extract the maximum performance from Intel® Xeon and Intel® Xeon Phi processors running TensorFlow*, a leading deep learning and machine learning framework. This effort resulted in significant performance gains and leads the way for ensuring similar gains from the next generation of products from Intel. Optimizing Deep Neural Network (DNN) models such as TensorFlow presents challenges not unlike those encountered with more traditional High Performance Computing applications for science and industry.

Globus for Google Drive Provides Unified Interface for Collaborative Research

Today Globus.org announced general availability of Globus for Google Drive, a new capability that lets users seamlessly connect Google Drive with their existing storage ecosystem, enabling a single interface for data transfer, sharing and publication across all storage systems. “Our researchers wanted to use Google Drive for data storage, but found that they had to babysit the data transfers,” said Krishna Muriki, Computer Systems Engineer, HPC Services, at Lawrence Berkeley National Laboratory. “They were already familiar with using Globus so we thought it would make a good interface for Google Drive; that’s why we partnered with Globus to develop this connector. Now our researchers have a familiar, web-based interface across all their storage resources, including Google Drive, so it is painless to move data where they need it and share results with collaborators. Globus manages all the data movement and authorization, improving security and reliability as well.”

Podcast: Could an AI Win the Nobel Prize?

In this AI Podcast, Paul Wigley from the Australian National University describes how his team of scientists applied AI to an experiment to create a Bose-Einstein condensate. And in doing so they had a question: if we can use AI as a tool in this experiment, can we use AI as its own novel, scientist, to explore different parts of physics and different parts of science?

Video: MVAPICH at Petascale on the Stampede System

“This talk will focus on the role MVAPICH has played at the Texas Advanced Computing Center over multiple generations of multiple technologies of interconnect, and why it has been critical not only in maximizing interconnect performance, but overall system performance as well. The talk will include examples of how poorly tuned interconnects can mean the difference between success and failure for large systems, and how the MVAPICH software level continues to provide significant performance advantages across a range of applications and interconnects.”

Heroes of Deep Learning: Andrew Ng interviews Ian Goodfellow from Google Brain

“There were several other ways of doing generative models that had been popular for several years before I had the idea for GANs. And after I’d been working on all those other methods throughout most of my Ph.D., I knew a lot about the advantages and disadvantages of all the other frameworks like Boltzmann machines and sparse coding and all the other approaches that have been really popular for years. I was looking for something that avoid all these disadvantages at the same time.”

Embry-Riddle University Deploys Cray CS Supercomputer for Aerospace

Today Embry-Riddle Aeronautical University announced it has deployed a Cray CS400 supercomputer. The four-cabinet system will power collaborative applied research with industry partners at the University’s new research facility – the John Mica Engineering and Aerospace Innovation Complex (“MicaPlex”) at Embry-Riddle Research Park.

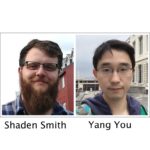

Researchers in Machine Learning Awarded George Michael Memorial HPC Fellowships

Today ACM and IEEE announced that Shaden Smith of the University of Minnesota and Yang You of the University of California, Berkeley are the recipients of the 2017 ACM/IEEE-CS George Michael Memorial HPC Fellowships. Smith is being recognized for his work on efficient and parallel large-scale sparse tensor factorization for machine learning applications. You is being recognized for his work on designing accurate, fast, and scalable machine learning algorithms on distributed systems.