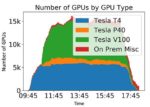

Researchers at SDSC and the Wisconsin IceCube Particle Astrophysics Center have successfully completed a second computational experiment using thousands of GPUs across Amazon Web Services, Microsoft Azure, and the Google Cloud Platform. “We drew several key conclusions from this second demonstration,” said SDSC’s Sfiligoi. “We showed that the cloudburst run can actually be sustained during an entire workday instead of just one or two hours, and have moreover measured the cost of using only the two most cost-effective cloud instances for each cloud provider.”

Supercomputing Planet Formation at SDSC

Researchers are using a novel approach to solving the mysteries of planet formation with the help of the Comet supercomputer at the San Diego Supercomputer Center on the UC San Diego campus. The modeling enabled scientists at the Southwest Research Institute (SwRI) to implement a new software package, which in turn allowed them to create a simulation of planet formation that provides a new baseline for future studies of this mysterious field. “The problem of planet formation is to start with a huge amount of very small dust that interacts on super-short timescales (seconds or less), and the Comet-enabled simulations finish with the final big collisions between planets that continue for 100 million years or more.”

SC19 Invited Talk: Next Generation Disaster Intelligence Using the Continuum of Computing and Data Technologies

Dr. Ilkay Altintas from SDSC gave this Invited Talk at SC19. “This talk will review some of our recent work on building this dynamic data driven cyberinfrastructure and impactful application solution architectures that showcase integration of a variety of existing technologies and collaborative expertise. The lessons learned on use of edge and cloud computing on top of high-speed networks, open data integrity, reproducibility through containerization, and the role of automated workflows and provenance will also be summarized.”

Qumulo Unified File Storage Reduces Administrative Burden While Consolidating HPC Workloads

Qumulo showcased its scalable file storage for high-performance computing workloads at SC19. The company helps innovative organizations gain real-time visibility, scale and control of their data across on-prem and the public cloud. More and more HPC institutes are looking to modern solutions that help them gain insights from their data, faster,” said Molly Presley, global product marketing director for Qumulo. “Qumulo helps the research community consolidate diverse workloads into a unified, simple-to-manage file storage solution. Workgroups focused on image data, analytics, and user home directories can share a single solution that delivers real-time visibility into billions of files while scaling performance on-prem or in the cloud to meet the demands of the most intensive research environments.”

Video: Characterizing Network Paths in and out of the Clouds

Igor Sfiligoi from SDSC gave this talk at CHEP 2019. “Cloud computing is becoming mainstream, with funding agencies moving beyond prototyping and starting to fund production campaigns, too. An important aspect of any production computing campaign is data movement, both incoming and outgoing. And while the performance and cost of VMs is relatively well understood, the network performance and cost is not.”

SDSC Conducts 50,000+ GPU Cloudburst Experiment with Wisconsin IceCube Particle Astrophysics Center

In all, some 51,500 GPU processors were used during the approximately two-hour experiment conducted on November 16 and funded under a National Science Foundation EAGER grant. The experiment used simulations from the IceCube Neutrino Observatory, an array of some 5,160 optical sensors deep within a cubic kilometer of ice at the South Pole. In 2017, researchers at the NSF-funded observatory found the first evidence of a source of high-energy cosmic neutrinos – subatomic particles that can emerge from their sources and pass through the universe unscathed, traveling for billions of light years to Earth from some of the most extreme environments in the universe.

PSI Researchers Using Comet Supercomputer for Flu Forecasting Models

Researchers at Predictive Science, Inc. are using the the Comet Supercomputer at SDSC for Flu Forecasting Models. “These calculations fall under the category of ‘embarrassingly parallel’, which means we can run each model, for each week and each season on a single core,” explained Ben-Nun. “We received a lot of support and help when we installed our codes on Comet and in writing the scripts that launched hundreds of calculations at once – our retrospective studies of past influenza seasons would not have been possible without access to a supercomputer like Comet.”

Supercomputing the Future Path of Wlldfires

In this KPBS video, firefighters in the field tap an SDSC supercomputer to battle wild fires. Fire officials started using the supercomputer’s WIFIRE tool in September. WIFIRE is a sophisticated fire modeling software that uses real-time data to run rapid simulations of a fire’s progress. It helps to see where the fire is likely going to go,” said Raymond De Callafon, a UCSD engineer who worked on the project. “So, a fire department can use this for planning purposes with their limited resources. It can also be used to plan, maybe their aircraft that will go over. Where to put the fire out.”

SDSC Awarded NSF Grant for Triton Stratus

The National Science Foundation has awarded SDSC a two-year grant worth almost $400,000 to deploy a new system called Triton Stratus. “Triton Stratus will provide researchers with improved facilities for utilizing emerging computing paradigms and tools, namely interactive and portal-based computing, and scaling them to commercial cloud computing resources. Researchers, especially data scientists, are increasingly using toolssuch as Jupyter notebooks and RStudio to implement computational and data analysis functions and workflows.”

NSF Funds $10 Million for ‘Expanse’ Supercomputer at SDSC

SDSC has been awarded a five-year grant from the NSF valued at $10 million to deploy Expanse, a new supercomputer designed to advance research that is increasingly dependent upon heterogeneous and distributed resources. “As a standalone system, Expanse represents a substantial increase in the performance and throughput compared to our highly successful, NSF-funded Comet supercomputer. But with innovations in cloud integration and composable systems, as well as continued support for science gateways and distributed computing via the Open Science Grid, Expanse will allow researchers to push the boundaries of computing and answer questions previously not possible.”