According to the Centers for Disease Control and Prevention (CDC), preeclampsia, or pregnancy-related hypertension, occurs in roughly one in 25 pregnancies in the United States. The causes are unknown and childbirth is the only remedy, which can sometimes lead to adverse perinatal outcomes, such as preterm delivery. To better understand this serious pregnancy complication, which reduces blood supply to the fetus, researchers used Comet at the San Diego Supercomputer Center (SDSC) at UC San Diego to conduct cellular modeling to detail the differences between normal and preeclampsia placental tissue.

Modeling on SDSC’s Comet Supercomputer Reveals Findings on Pregnancy-related Hypertension

XSEDE-Allocated Supercomputers, Comet and Stampede2, Accelerate Alzheimer’s Research

By Kimberly Mann Bruch, San Diego Supercomputer Center Communications Since 2009, Daniel Tward and his collaborators have analyzed more than 47,000 images of human brains via MRI Cloud — a gateway created to collect and share quantitative information from human brain images, including subtle changes in shape and cortical thickness. The latter was the topic of […]

MIT Researchers Develop Neural Networks for Computational Chemistry Using SDSC, PSC Supercomputers

Even though computational chemistry represents a challenging arena for machine learning, a team of researchers from the Massachusetts Institute of Technology (MIT) may have made it easier. Using Comet at the San Diego Supercomputer Center at UC San Diego and Bridges at the Pittsburgh Supercomputing Center, they succeeded in developing an artificial intelligence (AI) approach to detect electron correlation – the interaction between a system’s electrons – which is vital but expensive to calculate in quantum chemistry.

SDSC makes Comet Supercomputer available for COVID-19 research

With the U.S. and many other countries working ‘round the clock to mitigate the devastating effects of the COVID-19 disease, SDSC is providing priority access to its high-performance computer systems and other resources to researchers working to develop an effective vaccine in as short a time as possible. “For us, it absolutely crystalizes SDSC’s mission, which is to deliver lasting impact across the greater scientific community by creating innovative end-to-end computational and data solutions to meet the biggest research challenges of our time. That time is here.”

Supercomputing Ocean Wave Energy

Researchers are using XSEDE supercomputers to help develop ocean waves into a sustainable energy source. “We primarily used our simulation techniques to investigate inertial sea wave energy converters, which are renewable energy devices developed by our collaborators at the Polytechnic University of Turin that convert wave energy from large bodies of water into electrical energy,” explained study co-author Amneet Pal Bhalla from SDSU.

XSEDE Supercomputers Complete Simulations Pertinent to coronavirus, DNA Replication

Fundamental research supported by XSEDE supercomputers could help lead to new strategies and better technology that combats infectious and genetic diseases. “Chemical reactions, life, doesn’t happen that quickly,” Roston said. “It happens on a timescale of people talking to each other. Bridging this gap in timescale of many, many orders of magnitude requires many steps in your simulations. It very quickly becomes computationally intractable.”

SDSC Announces Comprehensive Data Sharing Resource

SDSC has announced the launch of HPC Share, a data sharing resource that will enable users of the Center’s high-performance computing resources to easily transfer, share, and discuss their data within their research teams and beyond. “HPC Share bridges a major gap in our current cyberinfrastructure by offering a turnkey system that eliminates barriers among collaborating researchers so that they can quickly access and review scientific results with context,” said SDSC Director Michael Norman. “Users will benefit from reduced complexity, ubiquitous accessibility, and more importantly rapid knowledge exchange.”

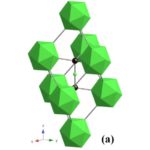

Supercomputing the Mysteries of Boron Carbide

Researchers at the University of Florida are using XSEDE supercomputers to unlock the mysteries of boron carbide, one of the hardest materials on earth. The material is also very lightweight, which explains why it has been used in making vehicle armor and body protection for soldiers. The research, which primarily used the Comet supercomputer at the San Diego Supercomputer Center along with the Stampede and Stampede2 systems at the Texas Advanced Computing Center, may provide insight into better protective mechanisms for vehicle and soldier armor after further testing and development.

PSI Researchers Using Comet Supercomputer for Flu Forecasting Models

Researchers at Predictive Science, Inc. are using the the Comet Supercomputer at SDSC for Flu Forecasting Models. “These calculations fall under the category of ‘embarrassingly parallel’, which means we can run each model, for each week and each season on a single core,” explained Ben-Nun. “We received a lot of support and help when we installed our codes on Comet and in writing the scripts that launched hundreds of calculations at once – our retrospective studies of past influenza seasons would not have been possible without access to a supercomputer like Comet.”

NSF Funds $10 Million for ‘Expanse’ Supercomputer at SDSC

SDSC has been awarded a five-year grant from the NSF valued at $10 million to deploy Expanse, a new supercomputer designed to advance research that is increasingly dependent upon heterogeneous and distributed resources. “As a standalone system, Expanse represents a substantial increase in the performance and throughput compared to our highly successful, NSF-funded Comet supercomputer. But with innovations in cloud integration and composable systems, as well as continued support for science gateways and distributed computing via the Open Science Grid, Expanse will allow researchers to push the boundaries of computing and answer questions previously not possible.”