In this Let’s Talk Exascale podcast, Tim Germann from Los Alamos National Laboratory discusses the ECP’s Co-Design Center for Particle Applications (COPA). “COPA serves as centralized clearinghouse for particle-based methods, and as first users on immature simulators, emulators, and prototype hardware. Deliverables include ‘Numerical Recipes for Particles’ best practices, libraries, and a scalable open exascale software platform.”

Let’s Talk Exascale Podcast Looks at Co-Design Center for Particle-Based Applications

Video: D-Wave Systems Seminar on Quantum Computing from SC17

In this video from SC17 in Denver, Bo Ewald from D-Wave Systems hosts a Quantum Computing Seminar. “We will discuss quantum computing, the D-Wave 2000Q system and software, the growing software ecosystem, an overview of some user projects, and how quantum computing can be applied to problems in optimization, machine learning, cyber security, and sampling.”

Podcast: The Exascale Data and Visualization project at LANL

In this episode of Let’s Talk Exascale, Scott Gibson discusses the ECP Data and Visualization project with Jim Ahrens from Los Alamos National Lab. Ahrens is principal investigator for the Data and Visualization project, which is responsible for the storage and visualization aspects of the ECP and for helping its researchers understand, store, and curate scientific data.

Trinity Supercomputer lands at #7 on TOP500

The Trinity Supercomputer at Los Alamos National Laboratory was recently named as a top 10 supercomputer on two lists: it made number three on the High Performance Conjugate Gradients (HPCG) Benchmark project, and is number seven on the TOP500 list. “Trinity has already made unique contributions to important national security challenges, and we look forward to Trinity having a long tenure as one of the most powerful supercomputers in the world.” said John Sarrao, associate director for Theory, Simulation and Computation at Los Alamos.

UCX and UCF Projects for Exascale Move Forward at SC17

In this video, Jeff Kuehn from LANL, Pavel Shamis from ARM, and Gilad Shainer from Mellanox describe progress on the new UCF consortium, a collaboration between industry, laboratories, and academia to create an open-source production grade communication framework for data centric and high-performance applications.

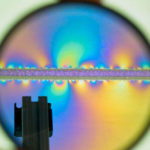

Video: An Affordable Supercomputing Testbed based on Raspberry Pi

In this video from SC17, Bruce Tulloch from BitScope describes a low-cost Rasberry Pi cluster that LANL can use to simulate large-scale supercomputers. “The BitScope Pi Cluster Modules system creates an affordable, scalable, highly parallel testbed for high-performance-computing system-software developers. The system comprises five rack-mounted BitScope Pi Cluster Modules consisting of 3,000 cores using Raspberry Pi ARM processor boards, fully integrated with network switching infrastructure.”

Video: The Legion Programming Model

“Developed by Stanford University, Legion is a data-centric programming model for writing high-performance applications for distributed heterogeneous architectures. Legion provides a common framework for implementing applications which can achieve portable performance across a range of architectures. The target class of users dictates that productivity in Legion will always be a second-class design constraint behind performance. Instead Legion is designed to be extensible and to support higher-level productivity languages and libraries.”

LANL Steps Up to HPC for Materials Program

“Understanding and predicting material performance under extreme environments is a foundational capability at Los Alamos,” said David Teter, Materials Science and Technology division leader at Los Alamos. “We are well suited to apply our extensive materials capabilities and our high-performance computing resources to industrial challenges in extreme environment materials, as this program will better help U.S. industry compete in a global market.”

Predicting Earthquakes with Machine Learning

Researchers at LANL are using Machine Learning to predict earthquakes. “The novelty of our work is the use of machine learning to discover and understand new physics of failure, through examination of the recorded auditory signal from the experimental setup. I think the future of earthquake physics will rely heavily on machine learning to process massive amounts of raw seismic data. Our work represents an important step in this direction.”

LANL’s Herbert Van de Sompel to Receive Paul Evan Peters Award

“For the last two decades Herbert, working with a range of collaborators, has made a sustained series of key contributions that have helped shape the current networked infrastructure to support scholarship,” noted CNI executive director Clifford Lynch. “While many people accomplish one really important thing in their careers, I am struck by the breadth and scope of his contributions.” Lynch added, “I’ve had the privilege of working with Herbert on several of these initiatives over the years, and I was honored in 2000 to be invited to serve as a special external member of the PhD committee at the University of Ghent, where he received his doctorate.”